Siqi Yi

Conversational Agents for Older Adults' Health: A Systematic Literature Review

Mar 29, 2025Abstract:There has been vast literature that studies Conversational Agents (CAs) in facilitating older adults' health. The vast and diverse studies warrants a comprehensive review that concludes the main findings and proposes research directions for future studies, while few literature review did it from human-computer interaction (HCI) perspective. In this study, we present a survey of existing studies on CAs for older adults' health. Through a systematic review of 72 papers, this work reviewed previously studied older adults' characteristics and analyzed participants' experiences and expectations of CAs for health. We found that (1) Past research has an increasing interest on chatbots and voice assistants and applied CA as multiple roles in older adults' health. (2) Older adults mainly showed low acceptance CAs for health due to various reasons, such as unstable effects, harm to independence, and privacy concerns. (3) Older adults expect CAs to be able to support multiple functions, to communicate using natural language, to be personalized, and to allow users full control. We also discuss the implications based on the findings.

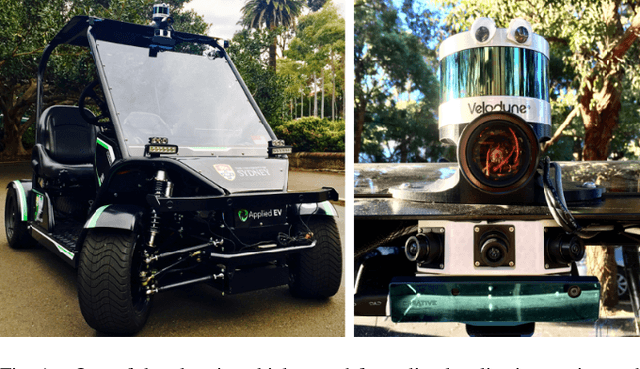

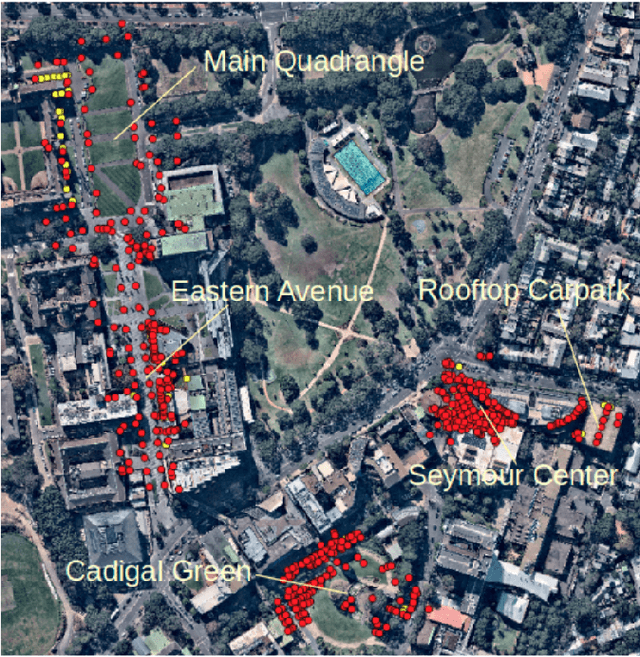

A Persistent and Context-aware Behavior Tree Framework for Multi Sensor Localization in Autonomous Driving

Mar 26, 2021

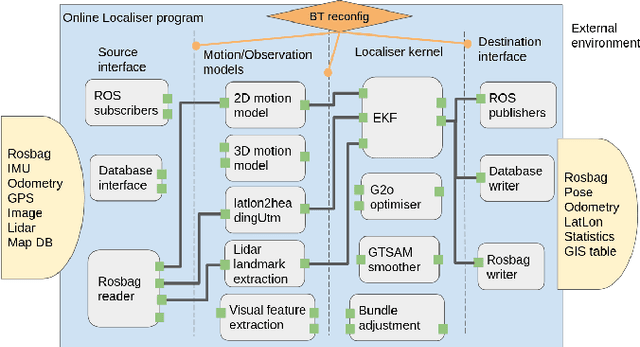

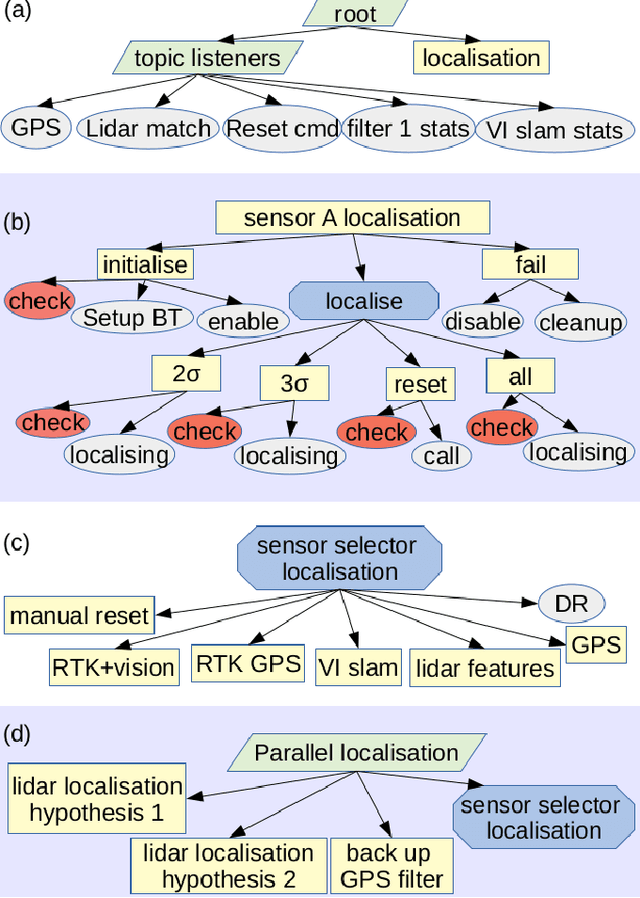

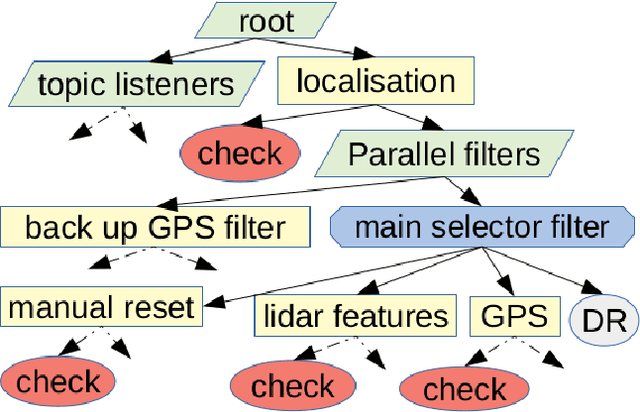

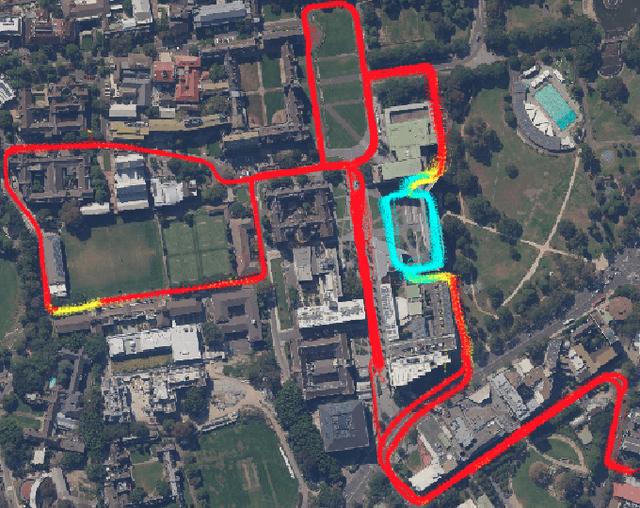

Abstract:Robust and persistent localisation is essential for ensuring the safe operation of autonomous vehicles. When operating in large and diverse urban driving environments, autonomous vehicles are frequently exposed to situations that violate the assumptions of algorithms, suffer from the failure of one or more sensors, or other events that lead to a loss of localisation. This paper proposes the use of a behavior tree framework that can monitor the performance of localisation health metrics and triggers intelligent responses such as sensor switching and loss recovery. The algorithm presented selects the best available sensor data at given time and location, and can perform a series of actions to react to adverse situations. The behavior tree encapsulates the system-level logic to give commands that make up the intelligent behaviors, so that the localisation "actuators" (data association, optimisation, filters, etc) can perform decoupled actions without needing context. Experimental results to validate the algorithms are presented using the University of Sydney Campus dataset which was taken weekly over an 18 month period. A video showing the online localisation process can be found here: https://youtu.be/353uKqXLV5g

Geographical Map Registration and Fusion of Lidar-Aerial Orthoimagery in GIS

Apr 19, 2019

Abstract:Centimeter level globally accurate and consistent maps for autonomous vehicles navigation has long been achieved by on board real-time kinematic(RTK)-GPS in open areas. However when dealing with urban environments, GPS will experience multipath and blockage in urban canyon, under bridges, inside tunnels and in underground environments. In this paper we present strategies to efficiently register local maps in geographical coordinate systems through the tactical integration of GPS and information extracted from precisely geo-referenced high resolution aerial orthogonal imagery. Dense lidar point clouds obtained from moving vehicle are projected down to horizontal plane, accurately registered and overlaid on aerial orthoimagery. Sparse, robust and long-term pole-like landmarks are used as anchor points to link lidar and aerial image sensing, and constrain the spatial uncertainties of remaining lidar points that cannot be directly measured and identified. We achieved 15-75cm absolute average global accuracy using precisely geo-referenced aerial imagery as ground truth. This is valuable in enabling the fusion of ground vehicle on-board sensor features with features extracted from aerial images such as traffic and lane markings. It is also useful for cooperative sensing to have an unbiased and accurate global reference. Experimental results are presented demonstrating the accuracy and consistency of the maps when operating in large areas.

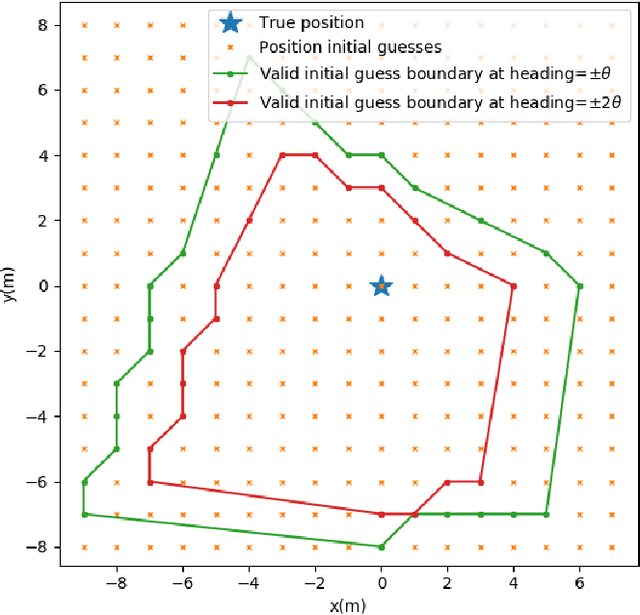

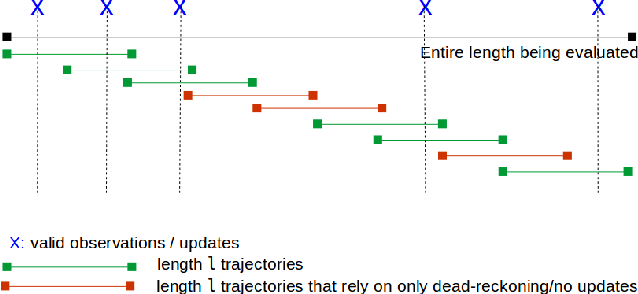

Metrics for the Evaluation of localisation Robustness

Apr 18, 2019

Abstract:Robustness and safety are crucial properties for the real-world application of autonomous vehicles. One of the most critical components of any autonomous system is localisation. During the last 20 years there has been significant progress in this area with the introduction of very efficient algorithms for mapping, localisation and SLAM. Many of these algorithms present impressive demonstrations for a particular domain, but fail to operate reliably with changes to the operating environment. The aspect of robustness has not received enough attention and localisation systems for self-driving vehicle applications are seldom evaluated for their robustness. In this paper we propose novel metrics to effectively quantify localisation robustness with or without an accurate ground truth. The experimental results present a comprehensive analysis of the application of these metrics against a number of well known localisation strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge