Simon Janssen

Exploring the Capabilities and Limits of 3D Monocular Object Detection -- A Study on Simulation and Real World Data

May 15, 2020

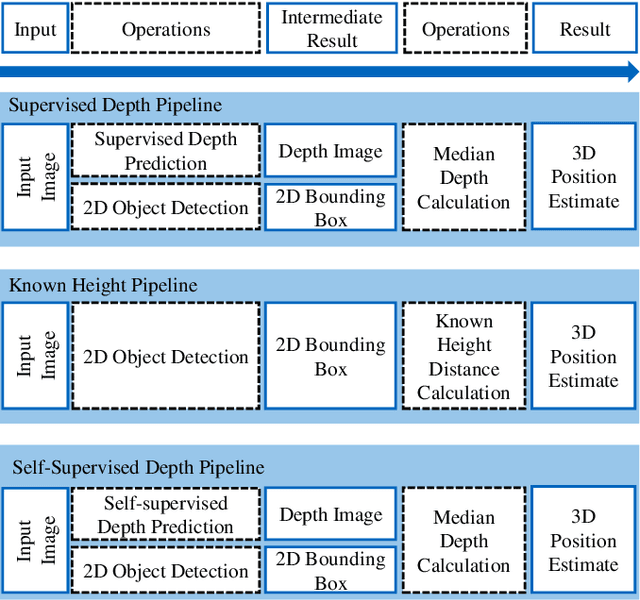

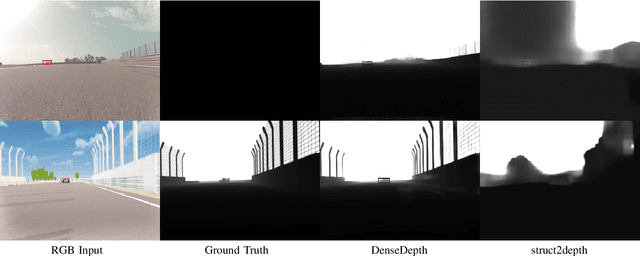

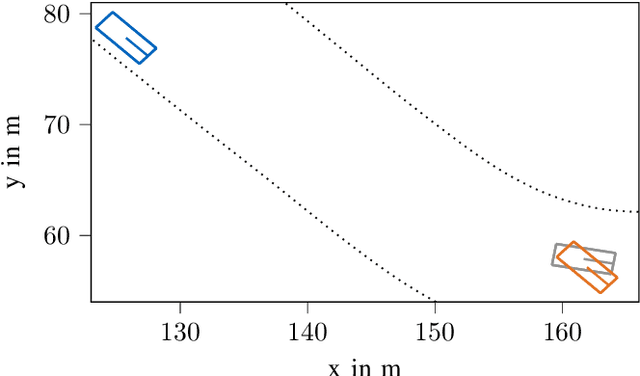

Abstract:3D object detection based on monocular camera data is a key enabler for autonomous driving. The task however, is ill-posed due to lack of depth information in 2D images. Recent deep learning methods show promising results to recover depth information from single images by learning priors about the environment. Several competing strategies tackle this problem. In addition to the network design, the major difference of these competing approaches lies in using a supervised or self-supervised optimization loss function, which require different data and ground truth information. In this paper, we evaluate the performance of a 3D object detection pipeline which is parameterizable with different depth estimation configurations. We implement a simple distance calculation approach based on camera intrinsics and 2D bounding box size, a self-supervised, and a supervised learning approach for depth estimation. Ground truth depth information cannot be recorded reliable in real world scenarios. This shifts our training focus to simulation data. In simulation, labeling and ground truth generation can be automatized. We evaluate the detection pipeline on simulator data and a real world sequence from an autonomous vehicle on a race track. The benefit of simulation training to real world application is investigated. Advantages and drawbacks of the different depth estimation strategies are discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge