Simon Goldstein

AI Survival Stories: a Taxonomic Analysis of AI Existential Risk

Jan 14, 2026Abstract:Since the release of ChatGPT, there has been a lot of debate about whether AI systems pose an existential risk to humanity. This paper develops a general framework for thinking about the existential risk of AI systems. We analyze a two premise argument that AI systems pose a threat to humanity. Premise one: AI systems will become extremely powerful. Premise two: if AI systems become extremely powerful, they will destroy humanity. We use these two premises to construct a taxonomy of survival stories, in which humanity survives into the far future. In each survival story, one of the two premises fails. Either scientific barriers prevent AI systems from becoming extremely powerful; or humanity bans research into AI systems, thereby preventing them from becoming extremely powerful; or extremely powerful AI systems do not destroy humanity, because their goals prevent them from doing so; or extremely powerful AI systems do not destroy humanity, because we can reliably detect and disable systems that have the goal of doing so. We argue that different survival stories face different challenges. We also argue that different survival stories motivate different responses to the threats from AI. Finally, we use our taxonomy to produce rough estimates of P(doom), the probability that humanity will be destroyed by AI.

A Case for AI Consciousness: Language Agents and Global Workspace Theory

Oct 15, 2024

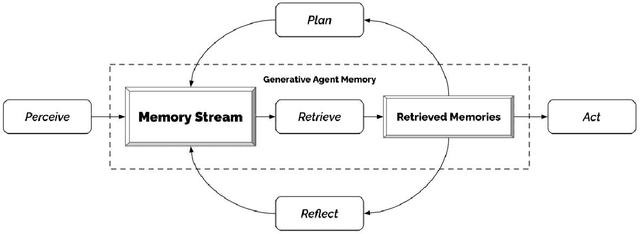

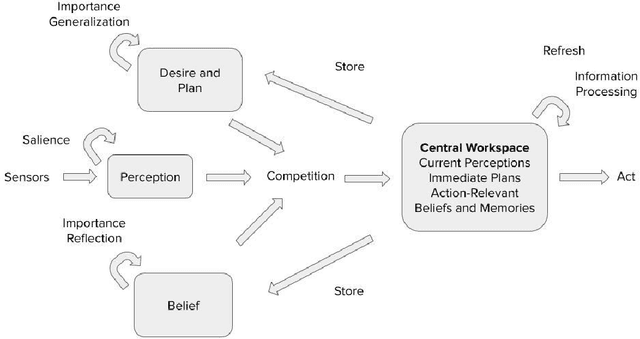

Abstract:It is generally assumed that existing artificial systems are not phenomenally conscious, and that the construction of phenomenally conscious artificial systems would require significant technological progress if it is possible at all. We challenge this assumption by arguing that if Global Workspace Theory (GWT) - a leading scientific theory of phenomenal consciousness - is correct, then instances of one widely implemented AI architecture, the artificial language agent, might easily be made phenomenally conscious if they are not already. Along the way, we articulate an explicit methodology for thinking about how to apply scientific theories of consciousness to artificial systems and employ this methodology to arrive at a set of necessary and sufficient conditions for phenomenal consciousness according to GWT.

AI Deception: A Survey of Examples, Risks, and Potential Solutions

Aug 28, 2023

Abstract:This paper argues that a range of current AI systems have learned how to deceive humans. We define deception as the systematic inducement of false beliefs in the pursuit of some outcome other than the truth. We first survey empirical examples of AI deception, discussing both special-use AI systems (including Meta's CICERO) built for specific competitive situations, and general-purpose AI systems (such as large language models). Next, we detail several risks from AI deception, such as fraud, election tampering, and losing control of AI systems. Finally, we outline several potential solutions to the problems posed by AI deception: first, regulatory frameworks should subject AI systems that are capable of deception to robust risk-assessment requirements; second, policymakers should implement bot-or-not laws; and finally, policymakers should prioritize the funding of relevant research, including tools to detect AI deception and to make AI systems less deceptive. Policymakers, researchers, and the broader public should work proactively to prevent AI deception from destabilizing the shared foundations of our society.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge