Sigur de Vries

Kozax: Flexible and Scalable Genetic Programming in JAX

Feb 05, 2025

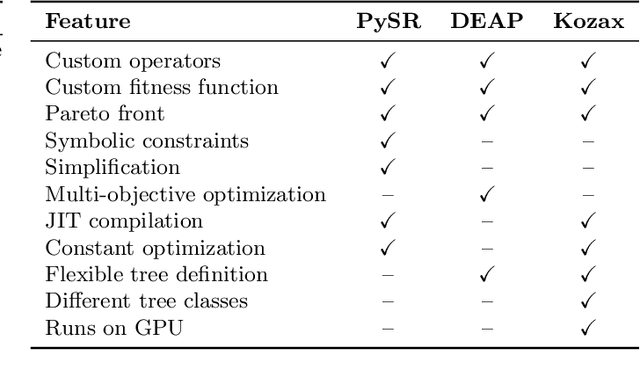

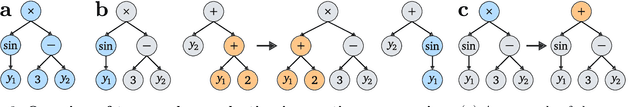

Abstract:Genetic programming is an optimization algorithm inspired by natural selection which automatically evolves the structure of computer programs. The resulting computer programs are interpretable and efficient compared to black-box models with fixed structure. The fitness evaluation in genetic programming suffers from high computational requirements, limiting the performance on difficult problems. To reduce the runtime, many implementations of genetic programming require a specific data format, making the applicability limited to specific problem classes. Consequently, there is no efficient genetic programming framework that is usable for a wide range of tasks. To this end, we developed Kozax, a genetic programming framework that evolves symbolic expressions for arbitrary problems. We implemented Kozax using JAX, a framework for high-performance and scalable machine learning, which allows the fitness evaluation to scale efficiently to large populations or datasets on GPU. Furthermore, Kozax offers constant optimization, custom operator definition and simultaneous evolution of multiple trees. We demonstrate successful applications of Kozax to discover equations of natural laws, recover equations of hidden dynamic variables and evolve a control policy. Overall, Kozax provides a general, fast, and scalable library to optimize white-box solutions in the realm of scientific computing.

Discovering Dynamic Symbolic Policies with Genetic Programming

Jun 04, 2024Abstract:Artificial intelligence (AI) techniques are increasingly being applied to solve control problems. However, control systems developed in AI are often black-box methods, in that it is not clear how and why they generate their outputs. A lack of transparency can be problematic for control tasks in particular, because it complicates the identification of biases or errors, which in turn negatively influences the user's confidence in the system. To improve the interpretability and transparency in control systems, the black-box structure can be replaced with white-box symbolic policies described by mathematical expressions. Genetic programming offers a gradient-free method to optimise the structure of non-differentiable mathematical expressions. In this paper, we show that genetic programming can be used to discover symbolic control systems. This is achieved by learning a symbolic representation of a function that transforms observations into control signals. We consider both systems that implement static control policies without memory and systems that implement dynamic memory-based control policies. In case of the latter, the discovered function becomes the state equation of a differential equation, which allows for evidence integration. Our results show that symbolic policies are discovered that perform comparably with black-box policies on a variety of control tasks. Furthermore, the additional value of the memory capacity in the dynamic policies is demonstrated on experiments where static policies fall short. Overall, we demonstrate that white-box symbolic policies can be optimised with genetic programming, while offering interpretability and transparency that lacks in black-box models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge