Shyam A

Immersive Virtual Reality Platform for Robot-Assisted Antenatal Ultrasound Scanning

Sep 07, 2023Abstract:Maternal health remains a pervasive challenge in developing and underdeveloped countries. Inadequate access to basic antenatal Ultrasound (US) examinations, limited resources such as primary health services and infrastructure, and lack of skilled healthcare professionals are the major concerns. To improve the quality of maternal care, robot-assisted antenatal US systems with teleoperable and autonomous capabilities were introduced. However, the existing teleoperation systems rely on standard video stream-based approaches that are constrained by limited immersion and scene awareness. Also, there is no prior work on autonomous antenatal robotic US systems that automate standardized scanning protocols. To that end, this paper introduces a novel Virtual Reality (VR) platform for robotic antenatal ultrasound, which enables sonologists to control a robotic arm over a wired network. The effectiveness of the system is enhanced by providing a reconstructed 3D view of the environment and immersing the user in a VR space. Also, the system facilitates a better understanding of the anatomical surfaces to perform pragmatic scans using 3D models. Further, the proposed robotic system also has autonomous capabilities; under the supervision of the sonologist, it can perform the standard six-step approach for obstetric US scanning recommended by the ISUOG. Using a 23-week fetal phantom, the proposed system was demonstrated to technology and academia experts at MEDICA 2022 as a part of the KUKA Innovation Award. The positive feedback from them supports the feasibility of the system. It also gave an insight into the improvisations to be carried out to make it a clinically viable system.

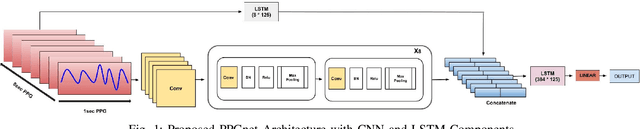

PPGnet: Deep Network for Device Independent Heart Rate Estimation from Photoplethysmogram

Mar 21, 2019

Abstract:Photoplethysmogram (PPG) is increasingly used to provide monitoring of the cardiovascular system under ambulatory conditions. Wearable devices like smartwatches use PPG to allow long term unobtrusive monitoring of heart rate in free living conditions. PPG based heart rate measurement is unfortunately highly susceptible to motion artifacts, particularly when measured from the wrist. Traditional machine learning and deep learning approaches rely on tri-axial accelerometer data along with PPG to perform heart rate estimation. The conventional learning based approaches have not addressed the need for device-specific modeling due to differences in hardware design among PPG devices. In this paper, we propose a novel end to end deep learning model to perform heart rate estimation using 8 second length input PPG signal. We evaluate the proposed model on the IEEE SPC 2015 dataset, achieving a mean absolute error of 3.36+-4.1BPM for HR estimation on 12 subjects without requiring patient specific training. We also studied the feasibility of applying transfer learning along with sparse retraining from a comprehensive in house PPG dataset for heart rate estimation across PPG devices with different hardware design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge