Manojkumar Lakshmanan

GUI-based Pedicle Screw Planning on Fluoroscopic Images Utilizing Vertebral Segmentation

Jul 11, 2024

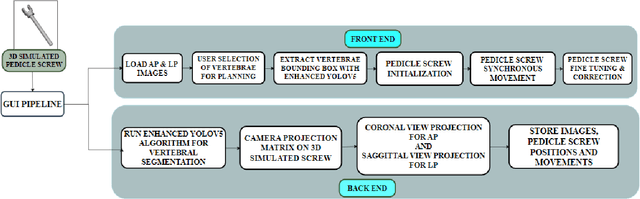

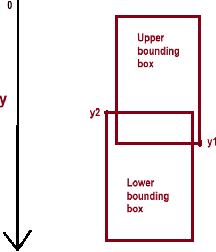

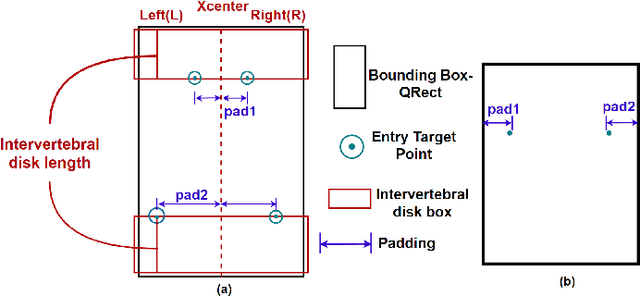

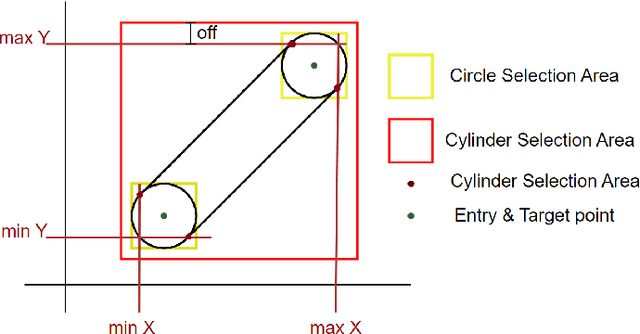

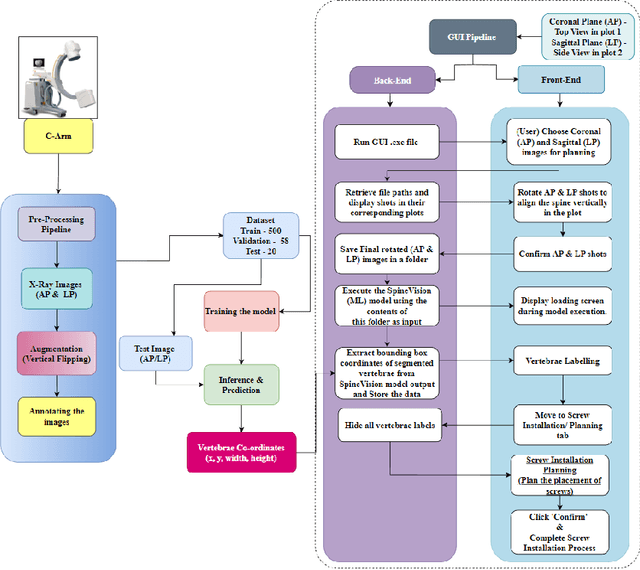

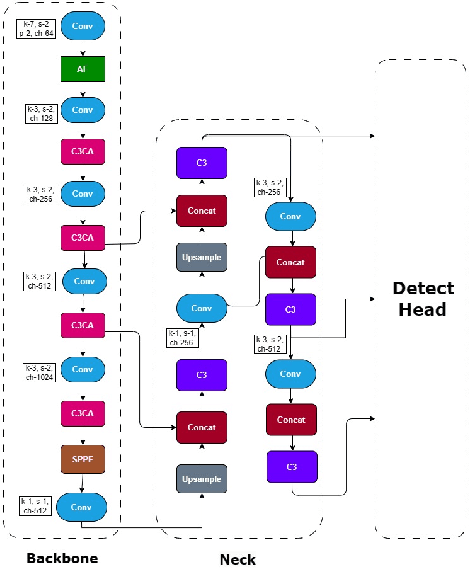

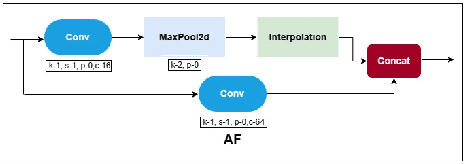

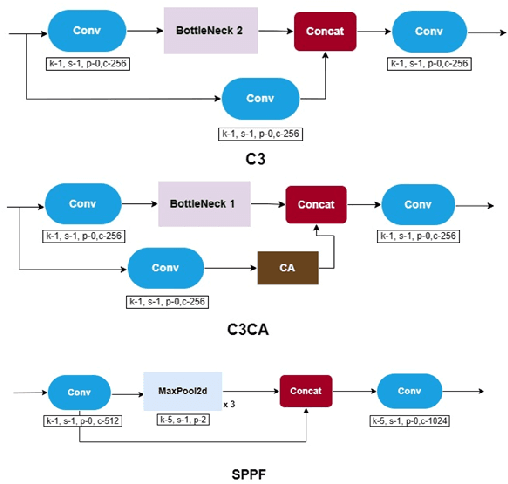

Abstract:The proposed work establishes a novel Graphical User Interface (GUI) framework, primarily designed for intraoperative pedicle screw planning. Current planning workflow in Image Guided Surgeries primarily relies on pre-operative CT planning. Intraoperative CT planning can be time-consuming and expensive and thus is not a common practice. In situations where efficiency and cost-effectiveness are paramount, planning to utilize fluoroscopic images acquired for image registration emerges as the optimal choice. The methodology proposed in this study employs a simulated 3D pedicle screw to calculate its coronal and sagittal projections for pedicle screw planning using anterior-posterior (AP) and lateral (LP) images. The initialization and placement of pedicle screw is computed by utilizing the bounding box of vertebral segmentation, which is obtained by the application of enhanced YOLOv5. The GUI front end includes functionality that allows surgeons or medical practitioners to efficiently choose, set up, and dynamically maneuver the pedicle screw on AP and LP images. This is based on a novel feature called synchronous planning, which involves correlating pedicle screws from the coronal and sagittal planes. This correlation utilizes projective correspondence to ensure that any movement of the pedicle screw in either the AP or LP image will be reflected in the other image. The proposed GUI framework is a time-efficient and cost-effective tool for synchronizing and planning the movement of pedicle screws during intraoperative surgical procedures.

Spine Vision X-Ray Image based GUI Planning of Pedicle Screws Using Enhanced YOLOv5 for Vertebrae Segmentation

Jul 11, 2024

Abstract:In this paper, we propose an innovative Graphical User Interface (GUI) aimed at improving preoperative planning and intra-operative guidance for precise spinal screw placement through vertebrae segmentation. The methodology encompasses both front-end and back-end computations. The front end comprises a GUI that allows surgeons to precisely adjust the placement of screws on X-Ray images, thereby improving the simulation of surgical screw insertion in the patient's spine. On the other hand, the back-end processing involves several steps, including acquiring spinal X-ray images, performing pre-processing techniques to reduce noise, and training a neural network model to achieve real-time segmentation of the vertebrae. The integration of vertebral segmentation in the GUI ensures precise screw placement, reducing complications like nerve injury and ultimately improving surgical outcomes. The Spine-Vision provides a comprehensive solution with innovative features like synchronous AP-LP planning, accurate screw positioning via vertebrae segmentation, effective screw visualization, and dynamic position adjustments. This X-ray image-based GUI workflow emerges as a valuable tool, enhancing precision and safety in spinal screw placement and planning procedures.

A Hybrid-Layered System for Image-Guided Navigation and Robot Assisted Spine Surgery

Jun 07, 2024Abstract:In response to the growing demand for precise and affordable solutions for Image-Guided Spine Surgery (IGSS), this paper presents a comprehensive development of a Robot-Assisted and Navigation-Guided IGSS System. The endeavor involves integrating cutting-edge technologies to attain the required surgical precision and limit user radiation exposure, thereby addressing the limitations of manual surgical methods. We propose an IGSS workflow and system architecture employing a hybrid-layered approach, combining modular and integrated system architectures in distinctive layers to develop an affordable system for seamless integration, scalability, and reconfigurability. We developed and integrated the system and extensively tested it on phantoms and cadavers. The proposed system's accuracy using navigation guidance is 1.020 mm, and robot assistance is 1.11 mm on phantoms. Observing a similar performance in cadaveric validation where 84% of screw placements were grade A, 10% were grade B using navigation guidance, 90% were grade A, and 10% were grade B using robot assistance as per the Gertzbein-Robbins scale, proving its efficacy for an IGSS. The evaluated performance is adequate for an IGSS and at par with the existing systems in literature and those commercially available. The user radiation is lower than in the literature, given that the system requires only an average of 3 C-Arm images per pedicle screw placement and verification

* 6 Pages, 4 Figures, Published in IEEE SII Conference

Immersive Virtual Reality Platform for Robot-Assisted Antenatal Ultrasound Scanning

Sep 07, 2023Abstract:Maternal health remains a pervasive challenge in developing and underdeveloped countries. Inadequate access to basic antenatal Ultrasound (US) examinations, limited resources such as primary health services and infrastructure, and lack of skilled healthcare professionals are the major concerns. To improve the quality of maternal care, robot-assisted antenatal US systems with teleoperable and autonomous capabilities were introduced. However, the existing teleoperation systems rely on standard video stream-based approaches that are constrained by limited immersion and scene awareness. Also, there is no prior work on autonomous antenatal robotic US systems that automate standardized scanning protocols. To that end, this paper introduces a novel Virtual Reality (VR) platform for robotic antenatal ultrasound, which enables sonologists to control a robotic arm over a wired network. The effectiveness of the system is enhanced by providing a reconstructed 3D view of the environment and immersing the user in a VR space. Also, the system facilitates a better understanding of the anatomical surfaces to perform pragmatic scans using 3D models. Further, the proposed robotic system also has autonomous capabilities; under the supervision of the sonologist, it can perform the standard six-step approach for obstetric US scanning recommended by the ISUOG. Using a 23-week fetal phantom, the proposed system was demonstrated to technology and academia experts at MEDICA 2022 as a part of the KUKA Innovation Award. The positive feedback from them supports the feasibility of the system. It also gave an insight into the improvisations to be carried out to make it a clinically viable system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge