Shuyang Wang

A Sequential Optimal Learning Approach to Automated Prompt Engineering in Large Language Models

Jan 07, 2025

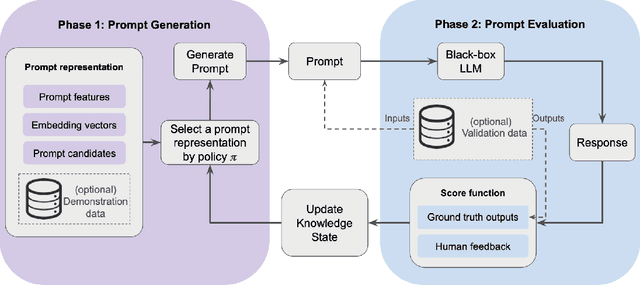

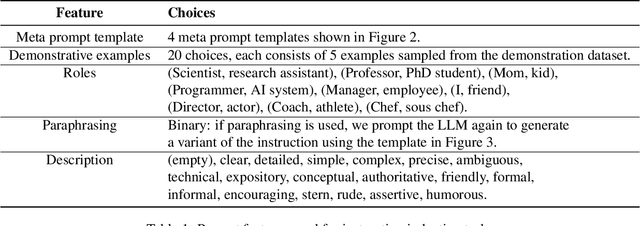

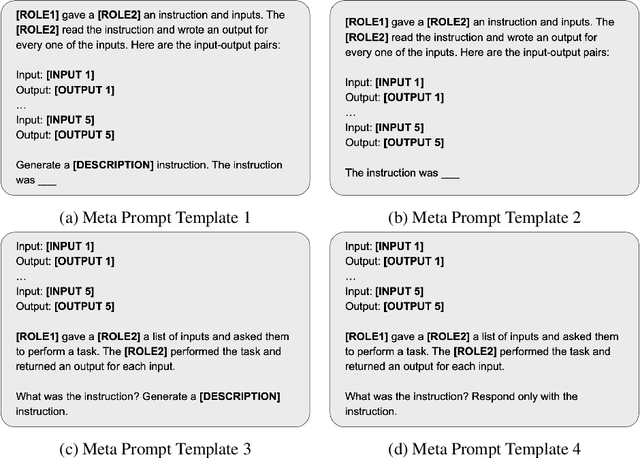

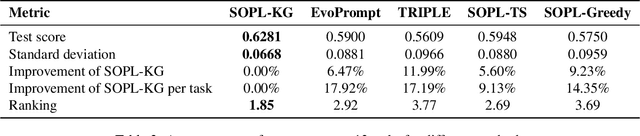

Abstract:Designing effective prompts is essential to guiding large language models (LLMs) toward desired responses. Automated prompt engineering aims to reduce reliance on manual effort by streamlining the design, refinement, and optimization of natural language prompts. This paper proposes an optimal learning framework for automated prompt engineering, designed to sequentially identify effective prompt features while efficiently allocating a limited evaluation budget. We introduce a feature-based method to express prompts, which significantly broadens the search space. Bayesian regression is employed to utilize correlations among similar prompts, accelerating the learning process. To efficiently explore the large space of prompt features for a high quality prompt, we adopt the forward-looking Knowledge-Gradient (KG) policy for sequential optimal learning. The KG policy is computed efficiently by solving mixed-integer second-order cone optimization problems, making it scalable and capable of accommodating prompts characterized only through constraints. We demonstrate that our method significantly outperforms a set of benchmark strategies assessed on instruction induction tasks. The results highlight the advantages of using the KG policy for prompt learning given a limited evaluation budget. Our framework provides a solution to deploying automated prompt engineering in a wider range applications where prompt evaluation is costly.

A Mirror Descent Perspective of Smoothed Sign Descent

Oct 18, 2024Abstract:Recent work by Woodworth et al. (2020) shows that the optimization dynamics of gradient descent for overparameterized problems can be viewed as low-dimensional dual dynamics induced by a mirror map, explaining the implicit regularization phenomenon from the mirror descent perspective. However, the methodology does not apply to algorithms where update directions deviate from true gradients, such as ADAM. We use the mirror descent framework to study the dynamics of smoothed sign descent with a stability constant $\varepsilon$ for regression problems. We propose a mirror map that establishes equivalence to dual dynamics under some assumptions. By studying dual dynamics, we characterize the convergent solution as an approximate KKT point of minimizing a Bregman divergence style function, and show the benefit of tuning the stability constant $\varepsilon$ to reduce the KKT error.

HalluAudio: Hallucinating Frequency as Concepts for Few-Shot Audio Classification

Feb 27, 2023

Abstract:Few-shot audio classification is an emerging topic that attracts more and more attention from the research community. Most existing work ignores the specificity of the form of the audio spectrogram and focuses largely on the embedding space borrowed from image tasks, while in this work, we aim to take advantage of this special audio format and propose a new method by hallucinating high-frequency and low-frequency parts as structured concepts. Extensive experiments on ESC-50 and our curated balanced Kaggle18 dataset show the proposed method outperforms the baseline by a notable margin. The way that our method hallucinates high-frequency and low-frequency parts also enables its interpretability and opens up new potentials for the few-shot audio classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge