Shubham Deshmukh

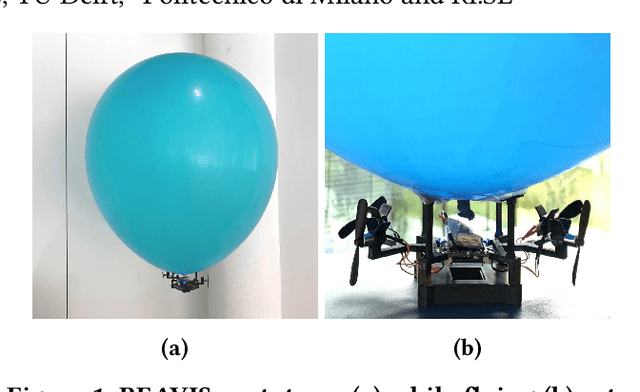

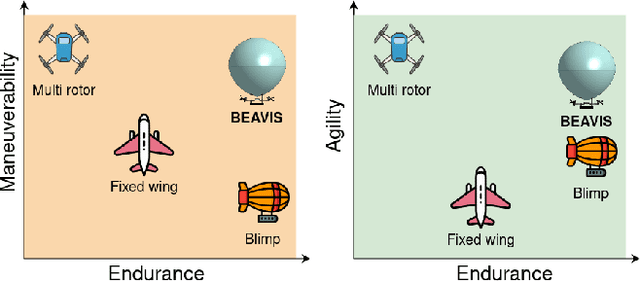

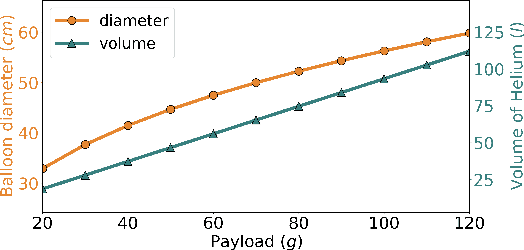

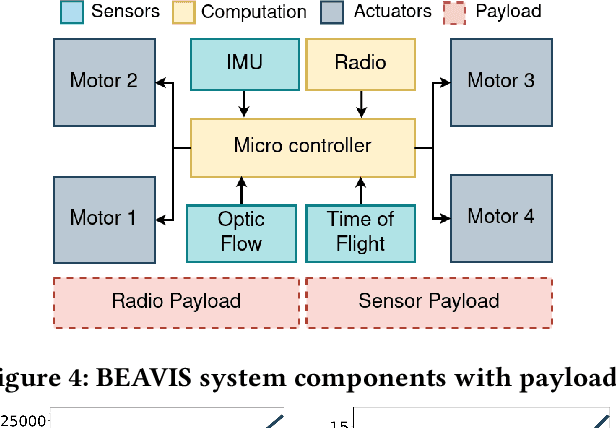

BEAVIS: Balloon Enabled Aerial Vehicle for IoT and Sensing

Aug 02, 2023

Abstract:UAVs are becoming versatile and valuable platforms for various applications. However, the main limitation is their flying time. We present BEAVIS, a novel aerial robotic platform striking an unparalleled trade-off between the manoeuvrability of drones and the long lasting capacity of blimps. BEAVIS scores highly in applications where drones enjoy unconstrained mobility yet suffer from limited lifetime. A nonlinear flight controller exploiting novel, unexplored, aerodynamic phenomena to regulate the ambient pressure and enable all translational and yaw degrees of freedom is proposed without direct actuation in the vertical direction. BEAVIS has built-in rotor fault detection and tolerance. We explain the design and the necessary background in detail. We verify the dynamics of BEAVIS and demonstrate its distinct advantages, such as agility, over existing platforms including the degrees of freedom akin to a drone with 11.36x increased lifetime. We exemplify the potential of BEAVIS to become an invaluable platform for many applications.

Sign Language Detection

Sep 08, 2022Abstract:With the advancements in Computer vision techniques the need to classify images based on its features have become a huge task and necessity. In this project we proposed 2 models i.e. feature extraction and classification using ORB and SVM and the second is using CNN architecture. The end result of the project is to understand the concept behind feature extraction and image classification. The trained CNN model will also be used to convert it to tflite format for Android Development.

Suspicious and Anomaly Detection

Sep 08, 2022Abstract:In this project we propose a CNN architecture to detect anomaly and suspicious activities; the activities chosen for the project are running, jumping and kicking in public places and carrying gun, bat and knife in public places. With the trained model we compare it with the pre-existing models like Yolo, vgg16, vgg19. The trained Model is then implemented for real time detection and also used the. tflite format of the trained .h5 model to build an android classification.

SANIP: Shopping Assistant and Navigation for the visually impaired

Sep 08, 2022Abstract:The proposed shopping assistant model SANIP is going to help blind persons to detect hand held objects and also to get a video feedback of the information retrieved from the detected and recognized objects. The proposed model consists of three python models i.e. Custom Object Detection, Text Detection and Barcode detection. For object detection of the hand held object, we have created our own custom dataset that comprises daily goods such as Parle-G, Tide, and Lays. Other than that we have also collected images of Cart and Exit signs as it is essential for any person to use a cart and also notice the exit sign in case of emergency. For the other 2 models proposed the text and barcode information retrieved is converted from text to speech and relayed to the Blind person. The model was used to detect objects that were trained on and was successful in detecting and recognizing the desired output with a good accuracy and precision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge