Shivakant Mishra

Understanding the Impact of Culture in Assessing Helpfulness of Online Reviews

Apr 27, 2023Abstract:Online reviews have become essential for users to make informed decisions in everyday tasks ranging from planning summer vacations to purchasing groceries and making financial investments. A key problem in using online reviews is the overabundance of online that overwhelms the users. As a result, recommendation systems for providing helpfulness of reviews are being developed. This paper argues that cultural background is an important feature that impacts the nature of a review written by the user, and must be considered as a feature in assessing the helpfulness of online reviews. The paper provides an in-depth study of differences in online reviews written by users from different cultural backgrounds and how incorporating culture as a feature can lead to better review helpfulness recommendations. In particular, we analyze online reviews originating from two distinct cultural spheres, namely Arabic and Western cultures, for two different products, hotels and books. Our analysis demonstrates that the nature of reviews written by users differs based on their cultural backgrounds and that this difference varies based on the specific product being reviewed. Finally, we have developed six different review helpfulness recommendation models that demonstrate that taking culture into account leads to better recommendations.

Deradicalizing YouTube: Characterization, Detection, and Personalization of Religiously Intolerant Arabic Videos

Jun 30, 2022

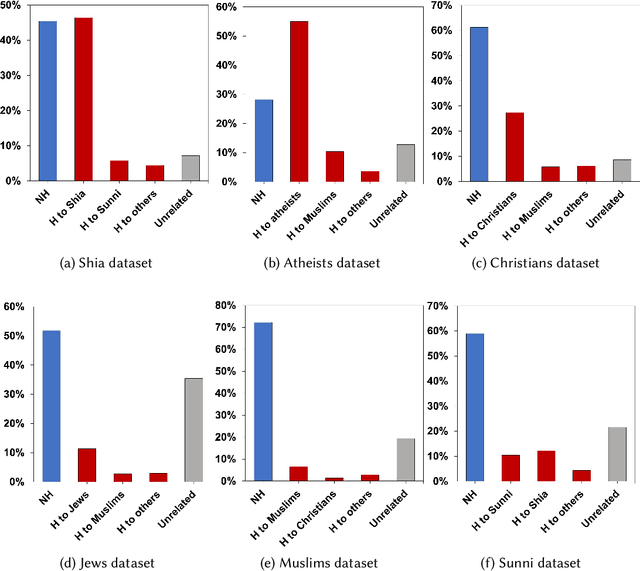

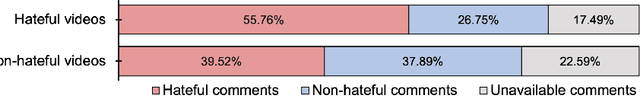

Abstract:Growing evidence suggests that YouTube's recommendation algorithm plays a role in online radicalization via surfacing extreme content. Radical Islamist groups, in particular, have been profiting from the global appeal of YouTube to disseminate hate and jihadist propaganda. In this quantitative, data-driven study, we investigate the prevalence of religiously intolerant Arabic YouTube videos, the tendency of the platform to recommend such videos, and how these recommendations are affected by demographics and watch history. Based on our deep learning classifier developed to detect hateful videos and a large-scale dataset of over 350K videos, we find that Arabic videos targeting religious minorities are particularly prevalent in search results (30%) and first-level recommendations (21%), and that 15% of overall captured recommendations point to hateful videos. Our personalized audit experiments suggest that gender and religious identity can substantially affect the extent of exposure to hateful content. Our results contribute vital insights into the phenomenon of online radicalization and facilitate curbing online harmful content.

Hateful People or Hateful Bots? Detection and Characterization of Bots Spreading Religious Hatred in Arabic Social Media

Aug 29, 2019

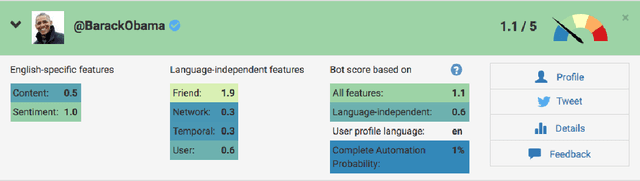

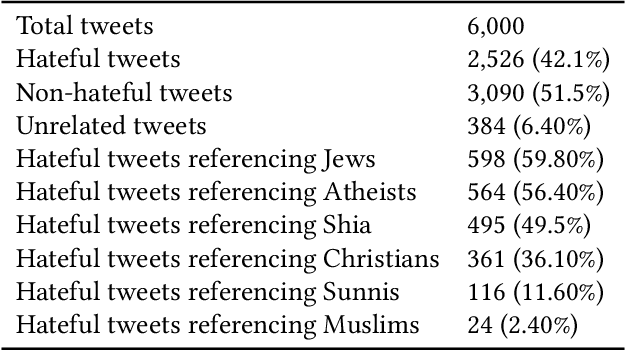

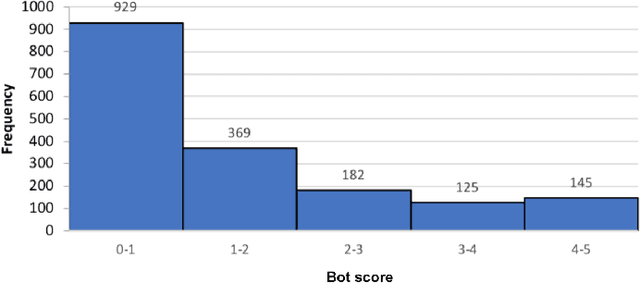

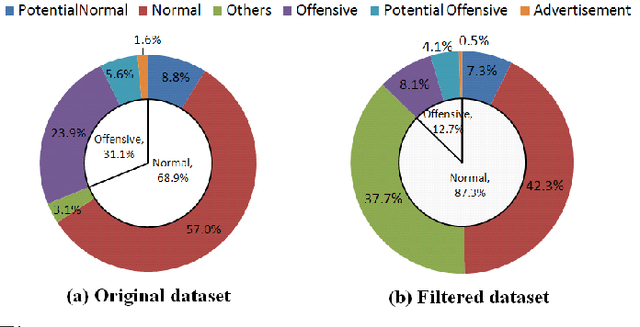

Abstract:Arabic Twitter space is crawling with bots that fuel political feuds, spread misinformation, and proliferate sectarian rhetoric. While efforts have long existed to analyze and detect English bots, Arabic bot detection and characterization remains largely understudied. In this work, we contribute new insights into the role of bots in spreading religious hatred on Arabic Twitter and introduce a novel regression model that can accurately identify Arabic language bots. Our assessment shows that existing tools that are highly accurate in detecting English bots don't perform as well on Arabic bots. We identify the possible reasons for this poor performance, perform a thorough analysis of linguistic, content, behavioral and network features, and report on the most informative features that distinguish Arabic bots from humans as well as the differences between Arabic and English bots. Our results mark an important step toward understanding the behavior of malicious bots on Arabic Twitter and pave the way for a more effective Arabic bot detection tools.

SafeVchat: Detecting Obscene Content and Misbehaving Users in Online Video Chat Services

Jan 17, 2011

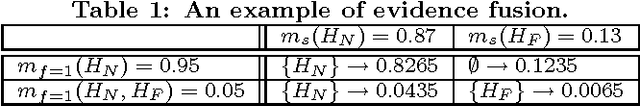

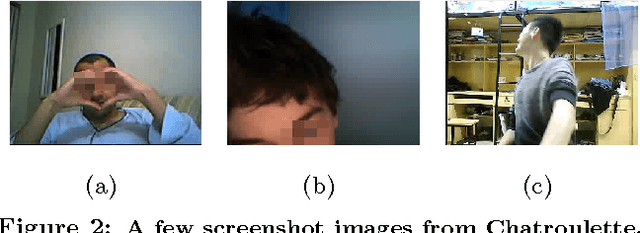

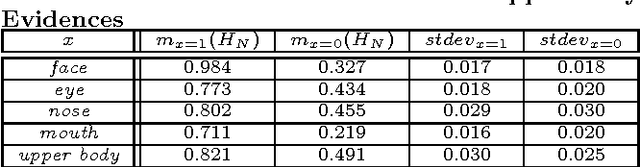

Abstract:Online video chat services such as Chatroulette, Omegle, and vChatter that randomly match pairs of users in video chat sessions are fast becoming very popular, with over a million users per month in the case of Chatroulette. A key problem encountered in such systems is the presence of flashers and obscene content. This problem is especially acute given the presence of underage minors in such systems. This paper presents SafeVchat, a novel solution to the problem of flasher detection that employs an array of image detection algorithms. A key contribution of the paper concerns how the results of the individual detectors are fused together into an overall decision classifying the user as misbehaving or not, based on Dempster-Shafer Theory. The paper introduces a novel, motion-based skin detection method that achieves significantly higher recall and better precision. The proposed methods have been evaluated over real world data and image traces obtained from Chatroulette.com.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge