Deradicalizing YouTube: Characterization, Detection, and Personalization of Religiously Intolerant Arabic Videos

Paper and Code

Jun 30, 2022

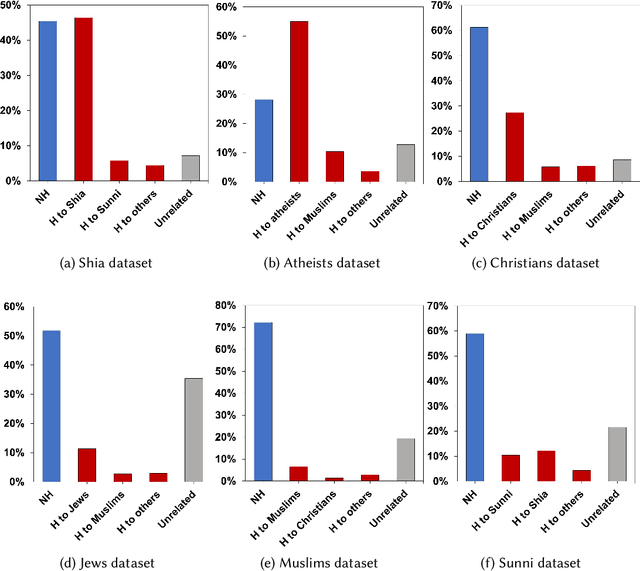

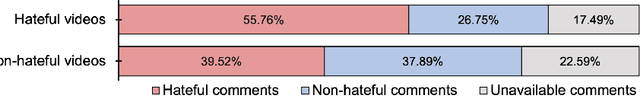

Growing evidence suggests that YouTube's recommendation algorithm plays a role in online radicalization via surfacing extreme content. Radical Islamist groups, in particular, have been profiting from the global appeal of YouTube to disseminate hate and jihadist propaganda. In this quantitative, data-driven study, we investigate the prevalence of religiously intolerant Arabic YouTube videos, the tendency of the platform to recommend such videos, and how these recommendations are affected by demographics and watch history. Based on our deep learning classifier developed to detect hateful videos and a large-scale dataset of over 350K videos, we find that Arabic videos targeting religious minorities are particularly prevalent in search results (30%) and first-level recommendations (21%), and that 15% of overall captured recommendations point to hateful videos. Our personalized audit experiments suggest that gender and religious identity can substantially affect the extent of exposure to hateful content. Our results contribute vital insights into the phenomenon of online radicalization and facilitate curbing online harmful content.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge