Sherif Sakr

AutoMLBench: A Comprehensive Experimental Evaluation of Automated Machine Learning Frameworks

Apr 18, 2022

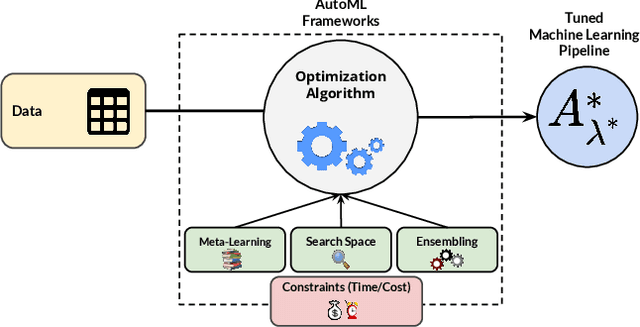

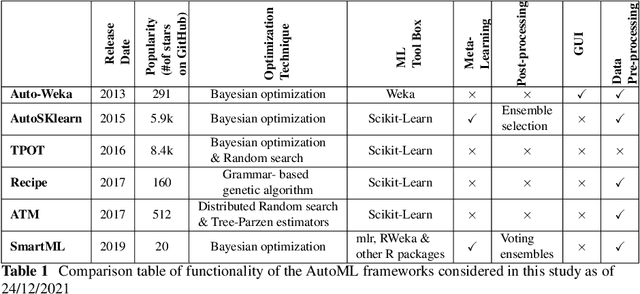

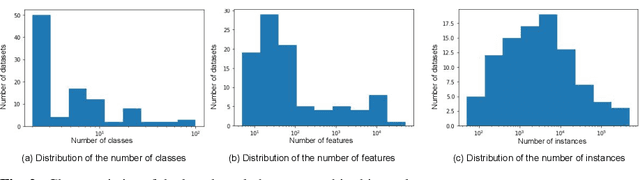

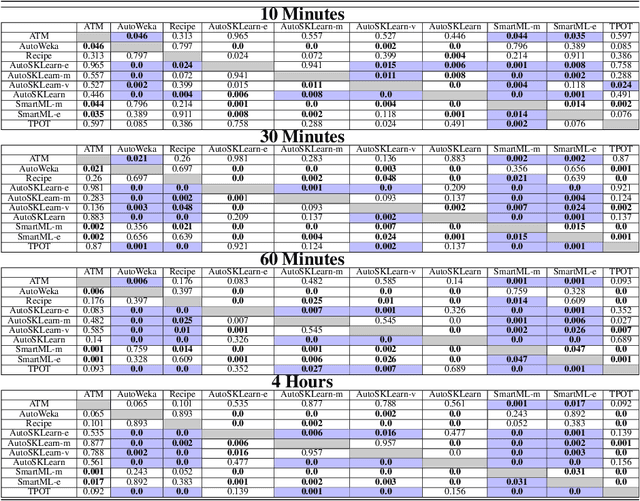

Abstract:Nowadays, machine learning is playing a crucial role in harnessing the power of the massive amounts of data that we are currently producing every day in our digital world. With the booming demand for machine learning applications, it has been recognized that the number of knowledgeable data scientists can not scale with the growing data volumes and application needs in our digital world. In response to this demand, several automated machine learning (AutoML) techniques and frameworks have been developed to fill the gap of human expertise by automating the process of building machine learning pipelines. In this study, we present a comprehensive evaluation and comparison of the performance characteristics of six popular AutoML frameworks, namely, Auto-Weka, AutoSKlearn, TPOT, Recipe, ATM, and SmartML across 100 data sets from established AutoML benchmark suites. Our experimental evaluation considers different aspects for its comparison including the performance impact of several design decisions including time budget, size of search space, meta-learning, and ensemble construction. The results of our study reveal various interesting insights that can significantly guide and impact the design of AutoML frameworks.

To tune or not to tune? An Approach for Recommending Important Hyperparameters

Aug 30, 2021

Abstract:Novel technologies in automated machine learning ease the complexity of algorithm selection and hyperparameter optimization. Hyperparameters are important for machine learning models as they significantly influence the performance of machine learning models. Many optimization techniques have achieved notable success in hyperparameter tuning and surpassed the performance of human experts. However, depending on such techniques as blackbox algorithms can leave machine learning practitioners without insight into the relative importance of different hyperparameters. In this paper, we consider building the relationship between the performance of the machine learning models and their hyperparameters to discover the trend and gain insights, with empirical results based on six classifiers and 200 datasets. Our results enable users to decide whether it is worth conducting a possibly time-consuming tuning strategy, to focus on the most important hyperparameters, and to choose adequate hyperparameter spaces for tuning. The results of our experiments show that gradient boosting and Adaboost outperform other classifiers across 200 problems. However, they need tuning to boost their performance. Overall, the results obtained from this study provide a quantitative basis to focus efforts toward guided automated hyperparameter optimization and contribute toward the development of better-automated machine learning frameworks.

Automated Machine Learning: State-of-The-Art and Open Challenges

Jun 11, 2019

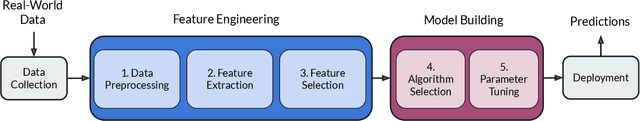

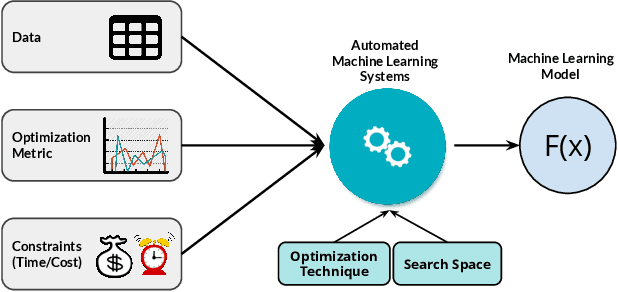

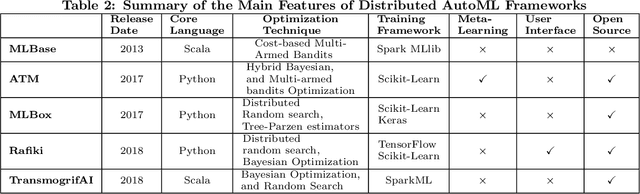

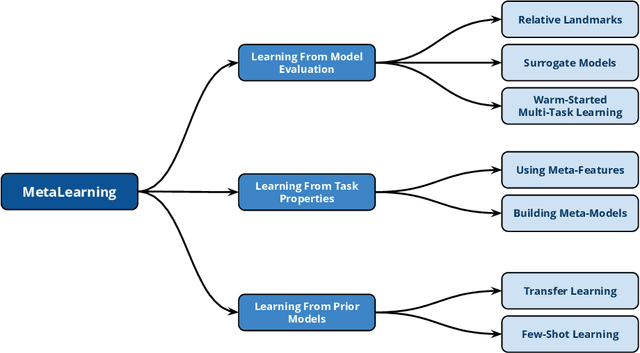

Abstract:With the continuous and vast increase in the amount of data in our digital world, it has been acknowledged that the number of knowledgeable data scientists can not scale to address these challenges. Thus, there was a crucial need for automating the process of building good machine learning models. In the last few years, several techniques and frameworks have been introduced to tackle the challenge of automating the process of Combined Algorithm Selection and Hyper-parameter tuning (CASH) in the machine learning domain. The main aim of these techniques is to reduce the role of the human in the loop and fill the gap for non-expert machine learning users by playing the role of the domain expert. In this paper, we present a comprehensive survey for the state-of-the-art efforts in tackling the CASH problem. In addition, we highlight the research work of automating the other steps of the full complex machine learning pipeline (AutoML) from data understanding till model deployment. Furthermore, we provide comprehensive coverage for the various tools and frameworks that have been introduced in this domain. Finally, we discuss some of the research directions and open challenges that need to be addressed in order to achieve the vision and goals of the AutoML process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge