Shengshi Yao

Variational Speech Waveform Compression to Catalyze Semantic Communications

Dec 13, 2022

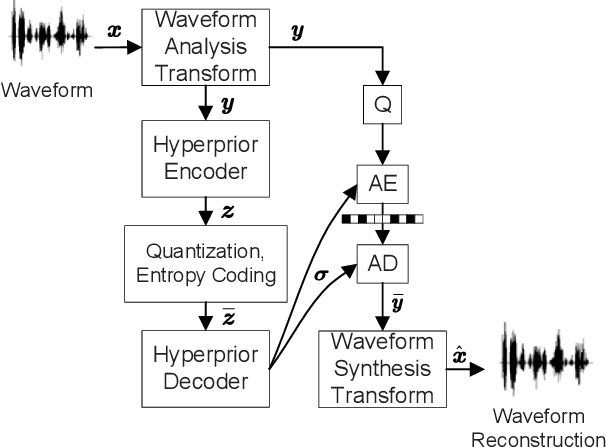

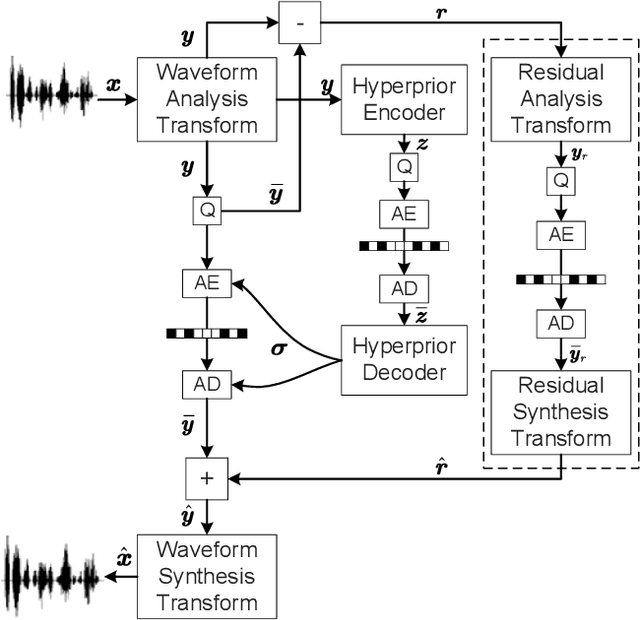

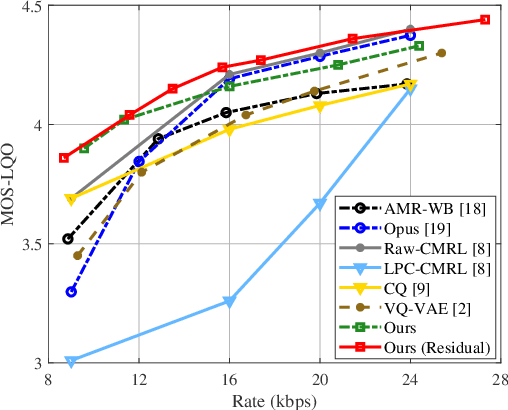

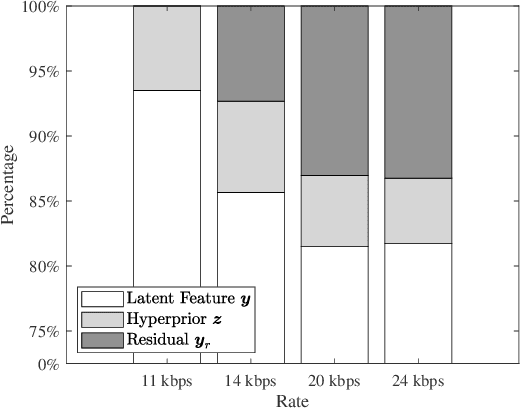

Abstract:We propose a novel neural waveform compression method to catalyze emerging speech semantic communications. By introducing nonlinear transform and variational modeling, we effectively capture the dependencies within speech frames and estimate the probabilistic distribution of the speech feature more accurately, giving rise to better compression performance. In particular, the speech signals are analyzed and synthesized by a pair of nonlinear transforms, yielding latent features. An entropy model with hyperprior is built to capture the probabilistic distribution of latent features, followed with quantization and entropy coding. The proposed waveform codec can be optimized flexibly towards arbitrary rate, and the other appealing feature is that it can be easily optimized for any differentiable loss function, including perceptual loss used in semantic communications. To further improve the fidelity, we incorporate residual coding to mitigate the degradation arising from quantization distortion at the latent space. Results indicate that achieving the same performance, the proposed method saves up to 27% coding rate than widely used adaptive multi-rate wideband (AMR-WB) codec as well as emerging neural waveform coding methods.

Wireless Deep Speech Semantic Transmission

Nov 04, 2022

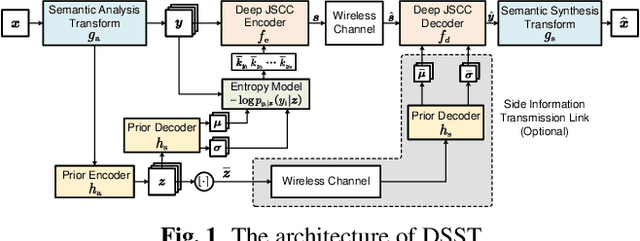

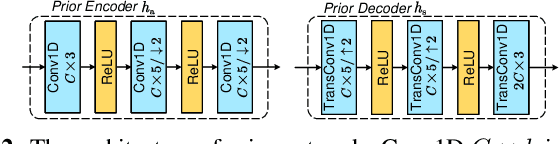

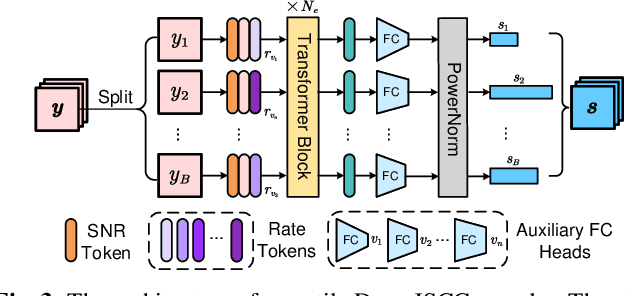

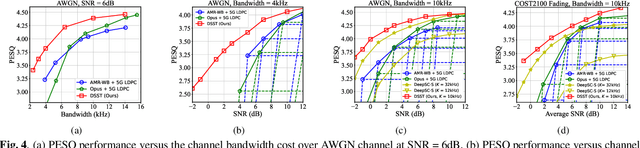

Abstract:In this paper, we propose a new class of high-efficiency semantic coded transmission methods for end-to-end speech transmission over wireless channels. We name the whole system as deep speech semantic transmission (DSST). Specifically, we introduce a nonlinear transform to map the speech source to semantic latent space and feed semantic features into source-channel encoder to generate the channel-input sequence. Guided by the variational modeling idea, we build an entropy model on the latent space to estimate the importance diversity among semantic feature embeddings. Accordingly, these semantic features of different importance can be allocated with different coding rates reasonably, which maximizes the system coding gain. Furthermore, we introduce a channel signal-to-noise ratio (SNR) adaptation mechanism such that a single model can be applied over various channel states. The end-to-end optimization of our model leads to a flexible rate-distortion (RD) trade-off, supporting versatile wireless speech semantic transmission. Experimental results verify that our DSST system clearly outperforms current engineered speech transmission systems on both objective and subjective metrics. Compared with existing neural speech semantic transmission methods, our model saves up to 75% of channel bandwidth costs when achieving the same quality. An intuitive comparison of audio demos can be found at https://ximoo123.github.io/DSST.

Versatile Semantic Coded Transmission over MIMO Fading Channels

Oct 30, 2022

Abstract:Semantic communications have shown great potential to boost the end-to-end transmission performance. To further improve the system efficiency, in this paper, we propose a class of novel semantic coded transmission (SCT) schemes over multiple-input multiple-output (MIMO) fading channels. In particular, we propose a high-efficiency SCT system supporting concurrent transmission of multiple streams, which can maximize the multiplexing gain of end-to-end semantic communication system. By jointly considering the entropy distribution on the source semantic features and the wireless MIMO channel states, we design a spatial multiplexing mechanism to realize adaptive coding rate allocation and stream mapping. As a result, source content and channel environment will be seamlessly coupled, which maximizes the coding gain of SCT system. Moreover, our SCT system is versatile: a single model can support various transmission rates. The whole model is optimized under the constraint of transmission rate-distortion (RD) tradeoff. Experimental results verify that our scheme substantially increases the throughput of semantic communication system. It also outperforms traditional MIMO communication systems under realistic fading channels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge