Shengbang Fang

Open Set Synthetic Image Source Attribution

Aug 22, 2023Abstract:AI-generated images have become increasingly realistic and have garnered significant public attention. While synthetic images are intriguing due to their realism, they also pose an important misinformation threat. To address this new threat, researchers have developed multiple algorithms to detect synthetic images and identify their source generators. However, most existing source attribution techniques are designed to operate in a closed-set scenario, i.e. they can only be used to discriminate between known image generators. By contrast, new image-generation techniques are rapidly emerging. To contend with this, there is a great need for open-set source attribution techniques that can identify when synthetic images have originated from new, unseen generators. To address this problem, we propose a new metric learning-based approach. Our technique works by learning transferrable embeddings capable of discriminating between generators, even when they are not seen during training. An image is first assigned to a candidate generator, then is accepted or rejected based on its distance in the embedding space from known generators' learned reference points. Importantly, we identify that initializing our source attribution embedding network by pretraining it on image camera identification can improve our embeddings' transferability. Through a series of experiments, we demonstrate our approach's ability to attribute the source of synthetic images in open-set scenarios.

Comprehensive Dataset of Synthetic and Manipulated Overhead Imagery for Development and Evaluation of Forensic Tools

May 09, 2023Abstract:We present a first of its kind dataset of overhead imagery for development and evaluation of forensic tools. Our dataset consists of real, fully synthetic and partially manipulated overhead imagery generated from a custom diffusion model trained on two sets of different zoom levels and on two sources of pristine data. We developed our model to support controllable generation of multiple manipulation categories including fully synthetic imagery conditioned on real and generated base maps, and location. We also support partial in-painted imagery with same conditioning options and with several types of manipulated content. The data consist of raw images and ground truth annotations describing the manipulation parameters. We also report benchmark performance on several tasks supported by our dataset including detection of fully and partially manipulated imagery, manipulation localization and classification.

VideoFACT: Detecting Video Forgeries Using Attention, Scene Context, and Forensic Traces

Nov 28, 2022Abstract:Fake videos represent an important misinformation threat. While existing forensic networks have demonstrated strong performance on image forgeries, recent results reported on the Adobe VideoSham dataset show that these networks fail to identify fake content in videos. In this paper, we propose a new network that is able to detect and localize a wide variety of video forgeries and manipulations. To overcome challenges that existing networks face when analyzing videos, our network utilizes both forensic embeddings to capture traces left by manipulation, context embeddings to exploit forensic traces' conditional dependencies upon local scene content, and spatial attention provided by a deep, transformer-based attention mechanism. We create several new video forgery datasets and use these, along with publicly available data, to experimentally evaluate our network's performance. These results show that our proposed network is able to identify a diverse set of video forgeries, including those not encountered during training. Furthermore, our results reinforce recent findings that image forensic networks largely fail to identify fake content in videos.

Attacking Image Splicing Detection and Localization Algorithms Using Synthetic Traces

Nov 22, 2022

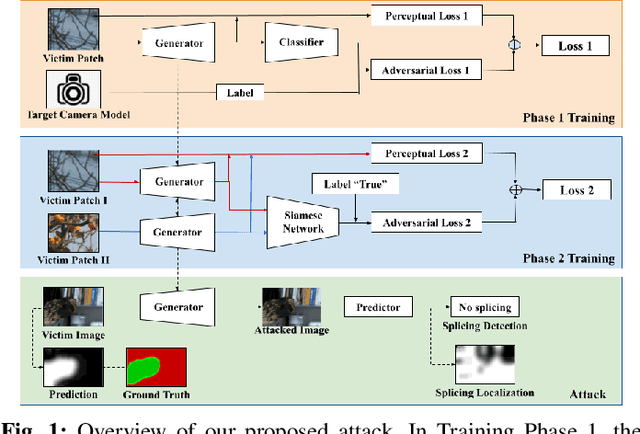

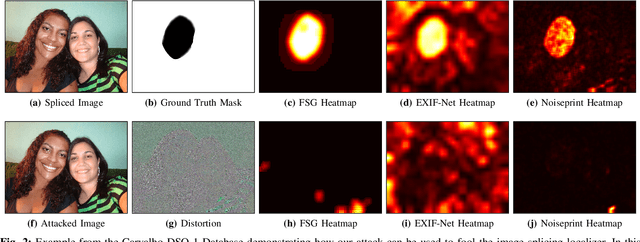

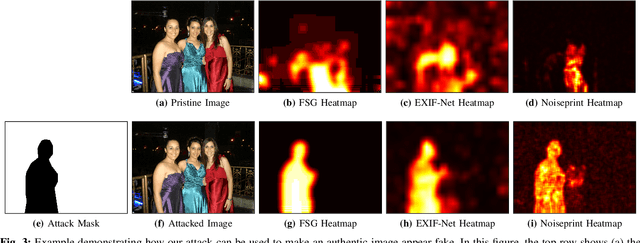

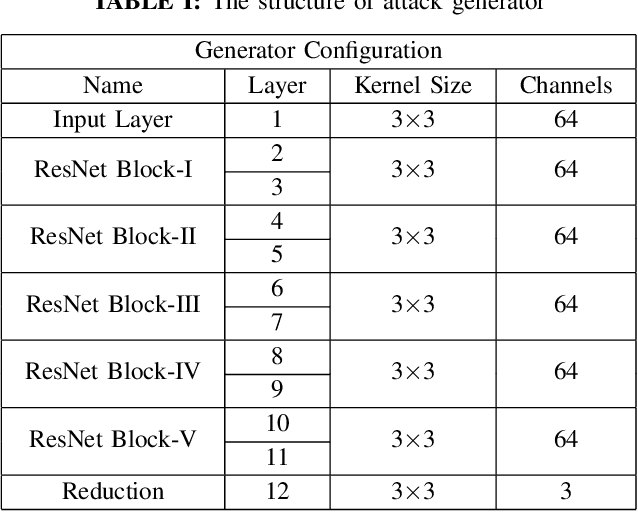

Abstract:Recent advances in deep learning have enabled forensics researchers to develop a new class of image splicing detection and localization algorithms. These algorithms identify spliced content by detecting localized inconsistencies in forensic traces using Siamese neural networks, either explicitly during analysis or implicitly during training. At the same time, deep learning has enabled new forms of anti-forensic attacks, such as adversarial examples and generative adversarial network (GAN) based attacks. Thus far, however, no anti-forensic attack has been demonstrated against image splicing detection and localization algorithms. In this paper, we propose a new GAN-based anti-forensic attack that is able to fool state-of-the-art splicing detection and localization algorithms such as EXIF-Net, Noiseprint, and Forensic Similarity Graphs. This attack operates by adversarially training an anti-forensic generator against a set of Siamese neural networks so that it is able to create synthetic forensic traces. Under analysis, these synthetic traces appear authentic and are self-consistent throughout an image. Through a series of experiments, we demonstrate that our attack is capable of fooling forensic splicing detection and localization algorithms without introducing visually detectable artifacts into an attacked image. Additionally, we demonstrate that our attack outperforms existing alternative attack approaches. %

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge