Shayan Shams

Dental CLAIRES: Contrastive LAnguage Image REtrieval Search for Dental Research

Jun 27, 2023

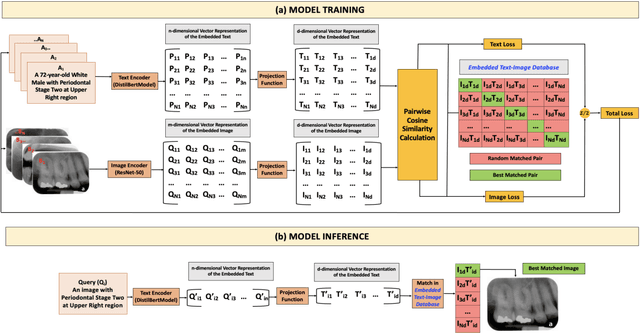

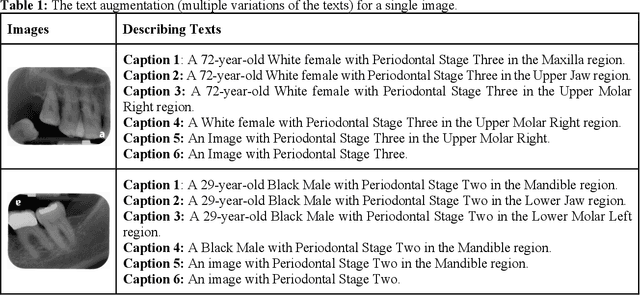

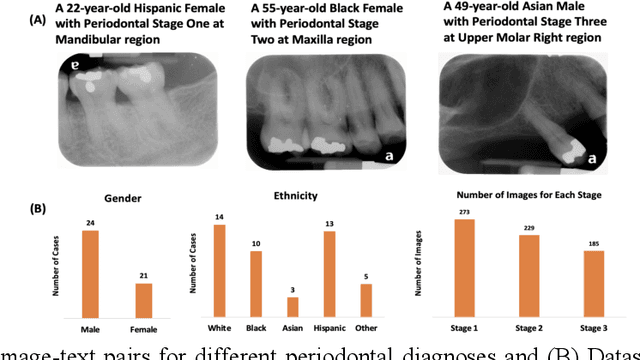

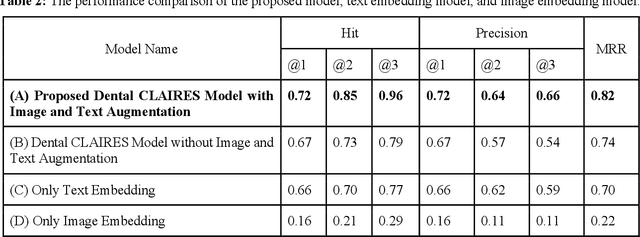

Abstract:Learning about diagnostic features and related clinical information from dental radiographs is important for dental research. However, the lack of expert-annotated data and convenient search tools poses challenges. Our primary objective is to design a search tool that uses a user's query for oral-related research. The proposed framework, Contrastive LAnguage Image REtrieval Search for dental research, Dental CLAIRES, utilizes periapical radiographs and associated clinical details such as periodontal diagnosis, demographic information to retrieve the best-matched images based on the text query. We applied a contrastive representation learning method to find images described by the user's text by maximizing the similarity score of positive pairs (true pairs) and minimizing the score of negative pairs (random pairs). Our model achieved a hit@3 ratio of 96% and a Mean Reciprocal Rank (MRR) of 0.82. We also designed a graphical user interface that allows researchers to verify the model's performance with interactions.

* 10 pages, 7 figures, 4 tables

Predicting multiple sclerosis disease severity with multimodal deep neural networks

Apr 08, 2023Abstract:Multiple Sclerosis (MS) is a chronic disease developed in human brain and spinal cord, which can cause permanent damage or deterioration of the nerves. The severity of MS disease is monitored by the Expanded Disability Status Scale (EDSS), composed of several functional sub-scores. Early and accurate classification of MS disease severity is critical for slowing down or preventing disease progression via applying early therapeutic intervention strategies. Recent advances in deep learning and the wide use of Electronic Health Records (EHR) creates opportunities to apply data-driven and predictive modeling tools for this goal. Previous studies focusing on using single-modal machine learning and deep learning algorithms were limited in terms of prediction accuracy due to the data insufficiency or model simplicity. In this paper, we proposed an idea of using patients' multimodal longitudinal and longitudinal EHR data to predict multiple sclerosis disease severity at the hospital visit. This work has two important contributions. First, we describe a pilot effort to leverage structured EHR data, neuroimaging data and clinical notes to build a multi-modal deep learning framework to predict patient's MS disease severity. The proposed pipeline demonstrates up to 25% increase in terms of the area under the Area Under the Receiver Operating Characteristic curve (AUROC) compared to models using single-modal data. Second, the study also provides insights regarding the amount useful signal embedded in each data modality with respect to MS disease prediction, which may improve data collection processes.

An End-to-end Entangled Segmentation and Classification Convolutional Neural Network for Periodontitis Stage Grading from Periapical Radiographic Images

Sep 27, 2021

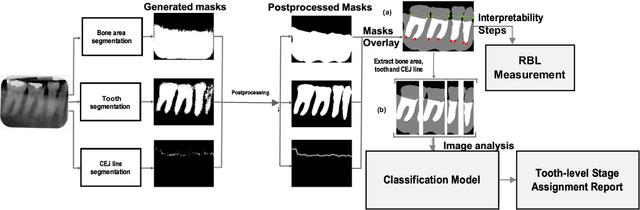

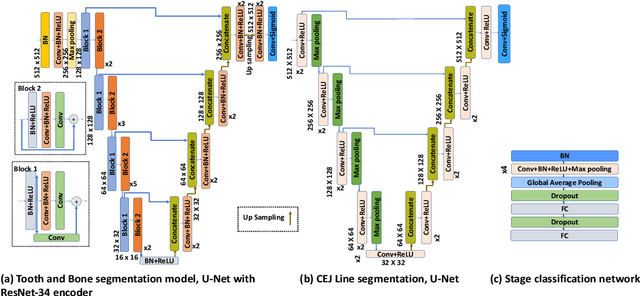

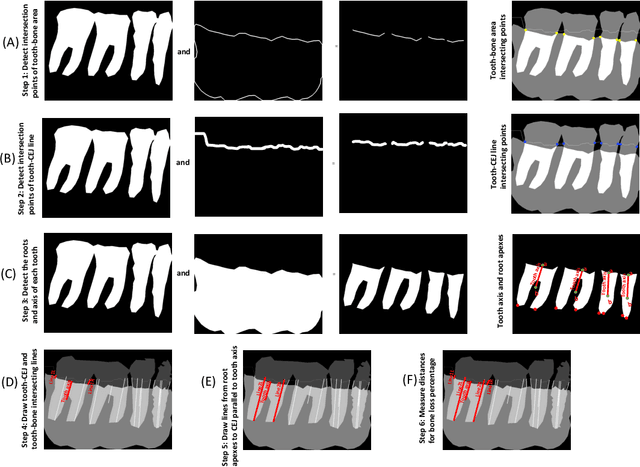

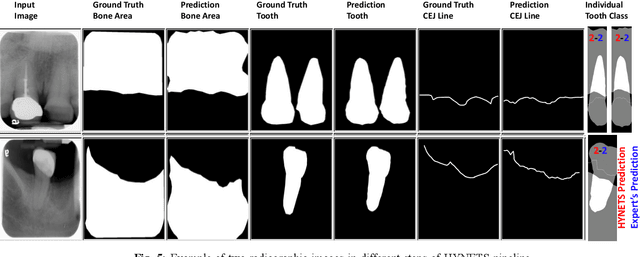

Abstract:Periodontitis is a biofilm-related chronic inflammatory disease characterized by gingivitis and bone loss in the teeth area. Approximately 61 million adults over 30 suffer from periodontitis (42.2%), with 7.8% having severe periodontitis in the United States. The measurement of radiographic bone loss (RBL) is necessary to make a correct periodontal diagnosis, especially if the comprehensive and longitudinal periodontal mapping is unavailable. However, doctors can interpret X-rays differently depending on their experience and knowledge. Computerized diagnosis support for doctors sheds light on making the diagnosis with high accuracy and consistency and drawing up an appropriate treatment plan for preventing or controlling periodontitis. We developed an end-to-end deep learning network HYNETS (Hybrid NETwork for pEriodoNTiTiS STagES from radiograpH) by integrating segmentation and classification tasks for grading periodontitis from periapical radiographic images. HYNETS leverages a multi-task learning strategy by combining a set of segmentation networks and a classification network to provide an end-to-end interpretable solution and highly accurate and consistent results. HYNETS achieved the average dice coefficient of 0.96 and 0.94 for the bone area and tooth segmentation and the average AUC of 0.97 for periodontitis stage assignment. Additionally, conventional image processing techniques provide RBL measurements and build transparency and trust in the model's prediction. HYNETS will potentially transform clinical diagnosis from a manual time-consuming, and error-prone task to an efficient and automated periodontitis stage assignment based on periapical radiographic images.

Use of the Deep Learning Approach to Measure Alveolar Bone Level

Sep 24, 2021

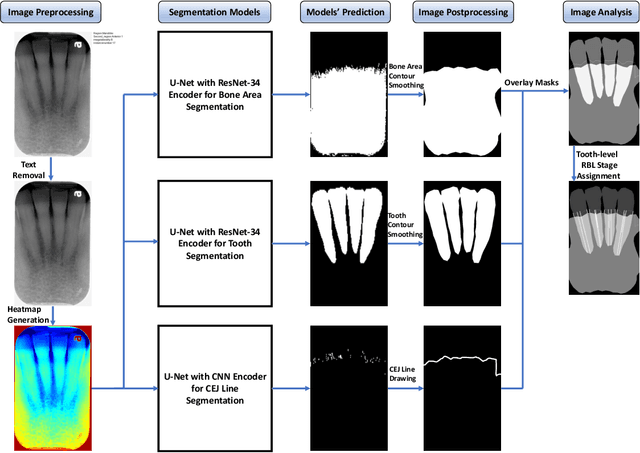

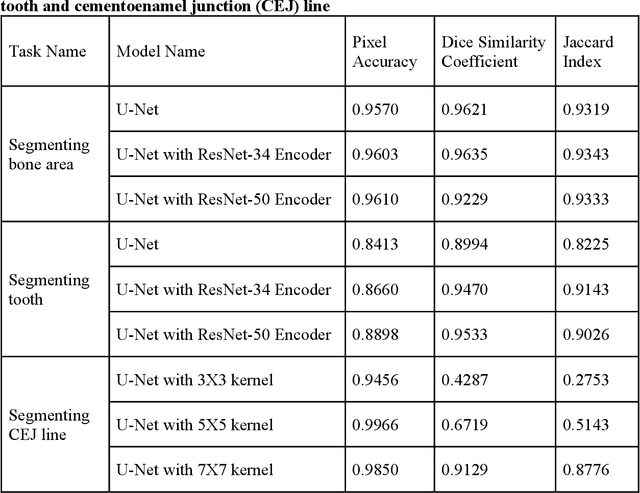

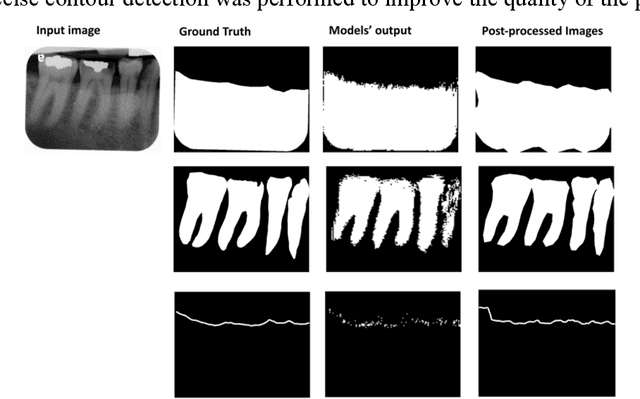

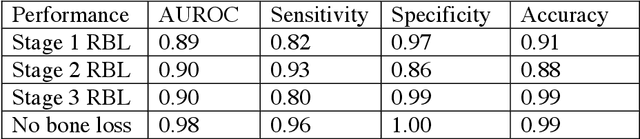

Abstract:Abstract: Aim: The goal was to use a Deep Convolutional Neural Network to measure the radiographic alveolar bone level to aid periodontal diagnosis. Material and methods: A Deep Learning (DL) model was developed by integrating three segmentation networks (bone area, tooth, cementoenamel junction) and image analysis to measure the radiographic bone level and assign radiographic bone loss (RBL) stages. The percentage of RBL was calculated to determine the stage of RBL for each tooth. A provisional periodontal diagnosis was assigned using the 2018 periodontitis classification. RBL percentage, staging, and presumptive diagnosis were compared to the measurements and diagnoses made by the independent examiners. Results: The average Dice Similarity Coefficient (DSC) for segmentation was over 0.91. There was no significant difference in RBL percentage measurements determined by DL and examiners (p=0.65). The Area Under the Receiver Operating Characteristics Curve of RBL stage assignment for stage I, II and III was 0.89, 0.90 and 0.90, respectively. The accuracy of the case diagnosis was 0.85. Conclusion: The proposed DL model provides reliable RBL measurements and image-based periodontal diagnosis using periapical radiographic images. However, this model has to be further optimized and validated by a larger number of images to facilitate its application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge