Shayan Sepahvand

Deep Visual Servoing of an Aerial Robot Using Keypoint Feature Extraction

Mar 29, 2025

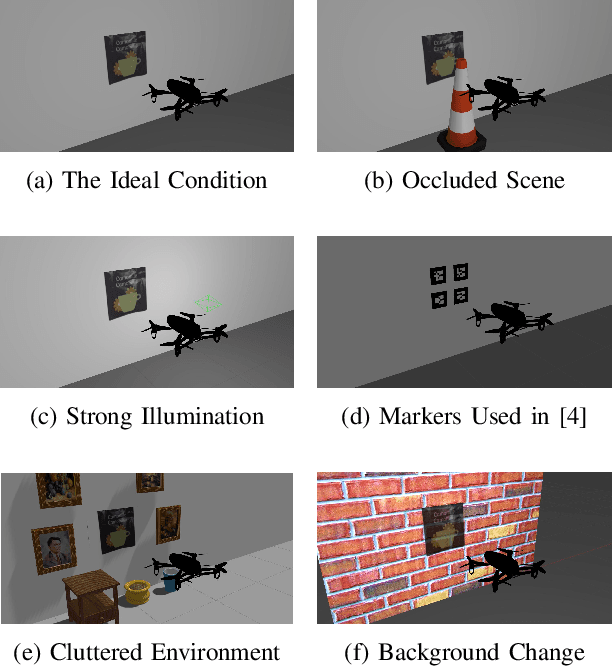

Abstract:The problem of image-based visual servoing (IBVS) of an aerial robot using deep-learning-based keypoint detection is addressed in this article. A monocular RGB camera mounted on the platform is utilized to collect the visual data. A convolutional neural network (CNN) is then employed to extract the features serving as the visual data for the servoing task. This paper contributes to the field by circumventing not only the challenge stemming from the need for man-made marker detection in conventional visual servoing techniques, but also enhancing the robustness against undesirable factors including occlusion, varying illumination, clutter, and background changes, thereby broadening the applicability of perception-guided motion control tasks in aerial robots. Additionally, extensive physics-based ROS Gazebo simulations are conducted to assess the effectiveness of this method, in contrast to many existing studies that rely solely on physics-less simulations. A demonstration video is available at https://youtu.be/Dd2Her8Ly-E.

Image-to-Joint Inverse Kinematic of a Supportive Continuum Arm Using Deep Learning

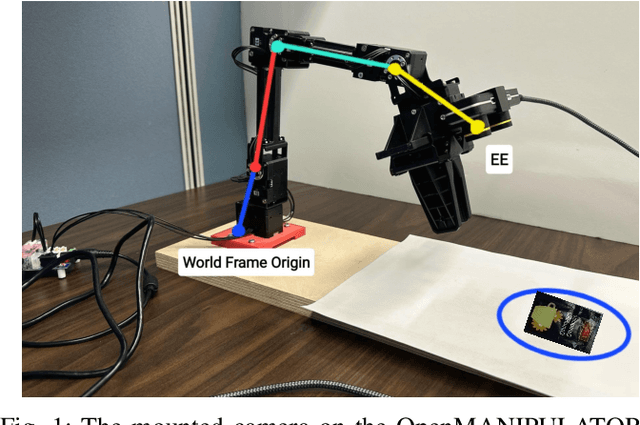

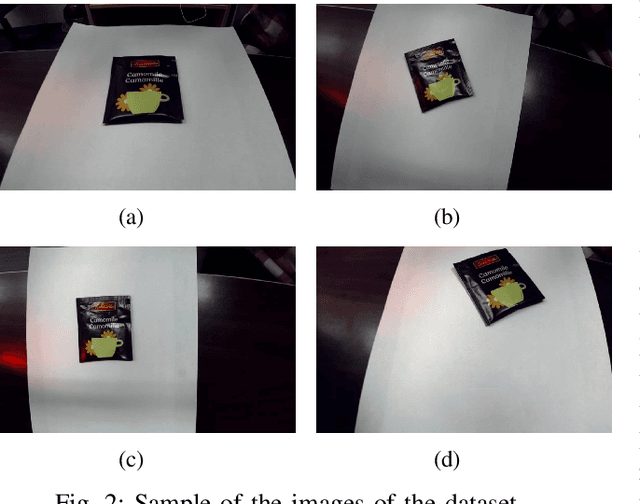

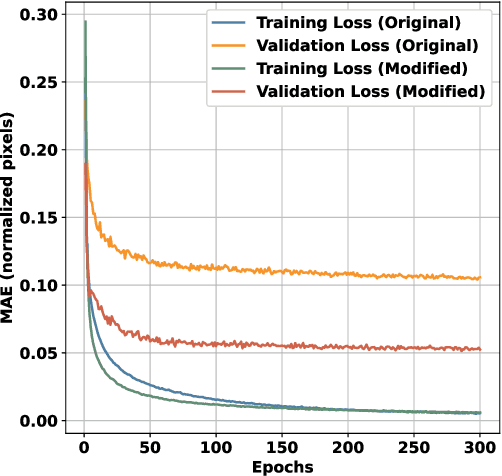

May 30, 2024Abstract:In this work, a deep learning-based technique is used to study the image-to-joint inverse kinematics of a tendon-driven supportive continuum arm. An eye-off-hand configuration is considered by mounting a camera at a fixed pose with respect to the inertial frame attached at the arm base. This camera captures an image for each distinct joint variable at each sampling time to construct the training dataset. This dataset is then employed to adapt a feed-forward deep convolutional neural network, namely the modified VGG-16 model, to estimate the joint variable. One thousand images are recorded to train the deep network, and transfer learning and fine-tuning techniques are applied to the modified VGG-16 to further improve the training. Finally, training is also completed with a larger dataset of images that are affected by various types of noises, changes in illumination, and partial occlusion. The main contribution of this research is the development of an image-to-joint network that can estimate the joint variable given an image of the arm, even if the image is not captured in an ideal condition. The key benefits of this research are twofold: 1) image-to-joint mapping can offer a real-time alternative to computationally complex inverse kinematic mapping through analytical models; and 2) the proposed technique can provide robustness against noise, occlusion, and changes in illumination. The dataset is publicly available on Kaggle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge