Shanshan Xiao

A deformation-based framework for learning solution mappings of PDEs defined on varying domains

Dec 02, 2024

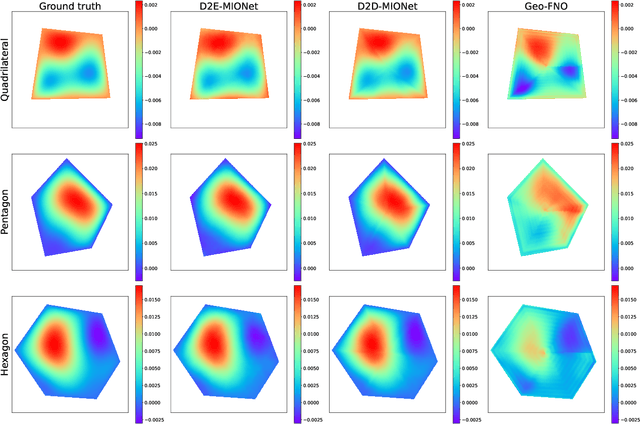

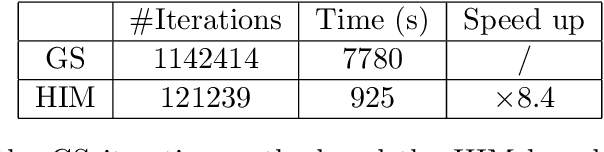

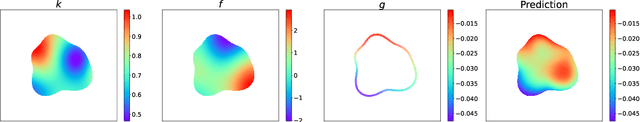

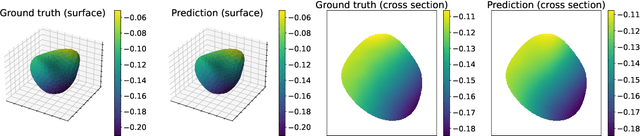

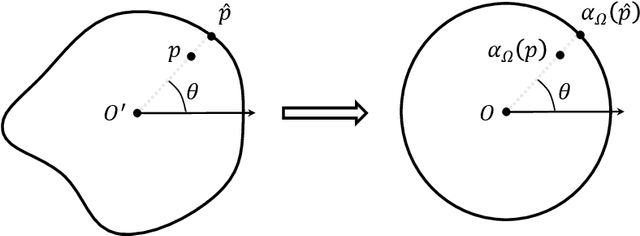

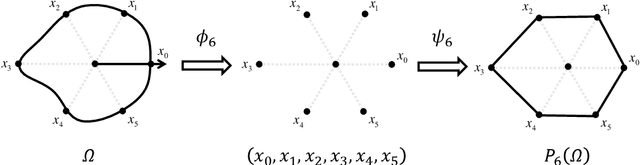

Abstract:In this work, we establish a deformation-based framework for learning solution mappings of PDEs defined on varying domains. The union of functions defined on varying domains can be identified as a metric space according to the deformation, then the solution mapping is regarded as a continuous metric-to-metric mapping, and subsequently can be represented by another continuous metric-to-Banach mapping using two different strategies, referred to as the D2D framework and the D2E framework, respectively. We point out that such a metric-to-Banach mapping can be learned by neural networks, hence the solution mapping is accordingly learned. With this framework, a rigorous convergence analysis is built for the problem of learning solution mappings of PDEs on varying domains. As the theoretical framework holds based on several pivotal assumptions which need to be verified for a given specific problem, we study the star domains as a typical example, and other situations could be similarly verified. There are three important features of this framework: (1) The domains under consideration are not required to be diffeomorphic, therefore a wide range of regions can be covered by one model provided they are homeomorphic. (2) The deformation mapping is unnecessary to be continuous, thus it can be flexibly established via combining a primary identity mapping and a local deformation mapping. This capability facilitates the resolution of large systems where only local parts of the geometry undergo change. (3) If a linearity-preserving neural operator such as MIONet is adopted, this framework still preserves the linearity of the surrogate solution mapping on its source term for linear PDEs, thus it can be applied to the hybrid iterative method. We finally present several numerical experiments to validate our theoretical results.

Learning solution operators of PDEs defined on varying domains via MIONet

Feb 23, 2024

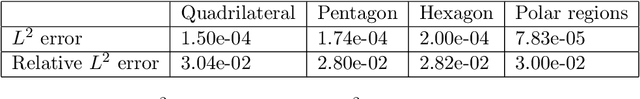

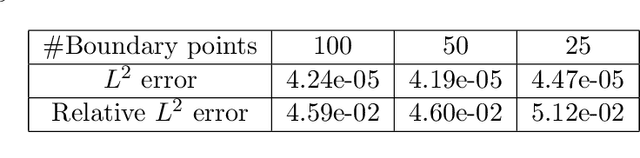

Abstract:In this work, we propose a method to learn the solution operators of PDEs defined on varying domains via MIONet, and theoretically justify this method. We first extend the approximation theory of MIONet to further deal with metric spaces, establishing that MIONet can approximate mappings with multiple inputs in metric spaces. Subsequently, we construct a set consisting of some appropriate regions and provide a metric on this set thus make it a metric space, which satisfies the approximation condition of MIONet. Building upon the theoretical foundation, we are able to learn the solution mapping of a PDE with all the parameters varying, including the parameters of the differential operator, the right-hand side term, the boundary condition, as well as the domain. Without loss of generality, we for example perform the experiments for 2-d Poisson equations, where the domains and the right-hand side terms are varying. The results provide insights into the performance of this method across convex polygons, polar regions with smooth boundary, and predictions for different levels of discretization on one task. Reasonably, we point out that this is a meshless method, hence can be flexibly used as a general solver for a type of PDE.

Generalized Lagrangian Neural Networks

Jan 09, 2024Abstract:Incorporating neural networks for the solution of Ordinary Differential Equations (ODEs) represents a pivotal research direction within computational mathematics. Within neural network architectures, the integration of the intrinsic structure of ODEs offers advantages such as enhanced predictive capabilities and reduced data utilization. Among these structural ODE forms, the Lagrangian representation stands out due to its significant physical underpinnings. Building upon this framework, Bhattoo introduced the concept of Lagrangian Neural Networks (LNNs). Then in this article, we introduce a groundbreaking extension (Genralized Lagrangian Neural Networks) to Lagrangian Neural Networks (LNNs), innovatively tailoring them for non-conservative systems. By leveraging the foundational importance of the Lagrangian within Lagrange's equations, we formulate the model based on the generalized Lagrange's equation. This modification not only enhances prediction accuracy but also guarantees Lagrangian representation in non-conservative systems. Furthermore, we perform various experiments, encompassing 1-dimensional and 2-dimensional examples, along with an examination of the impact of network parameters, which proved the superiority of Generalized Lagrangian Neural Networks(GLNNs).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge