Shangming Zhou

Towards a Shapley Value Graph Framework for Medical peer-influence

Dec 29, 2021

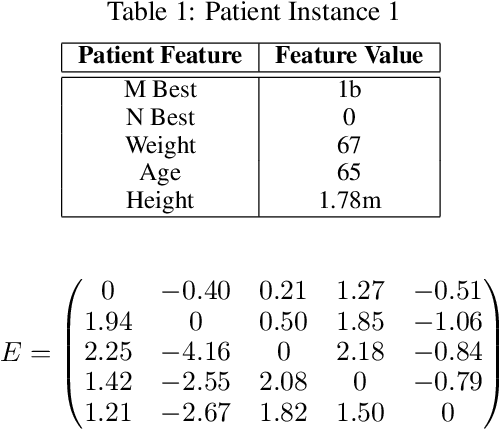

Abstract:eXplainable Artificial Intelligence (XAI) is a sub-field of Artificial Intelligence (AI) that is at the forefront of AI research. In XAI feature attribution methods produce explanations in the form of feature importance. A limitation of existing feature attribution methods is that there is a lack of explanation towards the consequence of intervention. Although contribution towards a certain prediction is highlighted, the influence between features and the consequence of intervention is not addressed. The aim of this paper is to introduce a new framework to look deeper into explanations using graph representation for feature-to-feature interactions to improve the interpretability of black-box Machine Learning (ML) models and inform intervention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge