Shane Gilroy

Classification of Driver Behaviour Using External Observation Techniques for Autonomous Vehicles

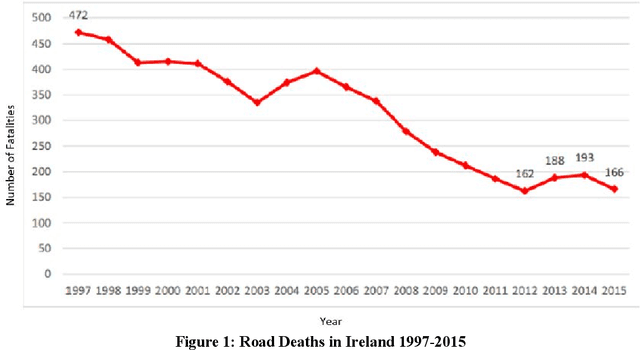

Sep 11, 2025Abstract:Road traffic accidents remain a significant global concern, with human error, particularly distracted and impaired driving, among the leading causes. This study introduces a novel driver behavior classification system that uses external observation techniques to detect indicators of distraction and impairment. The proposed framework employs advanced computer vision methodologies, including real-time object tracking, lateral displacement analysis, and lane position monitoring. The system identifies unsafe driving behaviors such as excessive lateral movement and erratic trajectory patterns by implementing the YOLO object detection model and custom lane estimation algorithms. Unlike systems reliant on inter-vehicular communication, this vision-based approach enables behavioral analysis of non-connected vehicles. Experimental evaluations on diverse video datasets demonstrate the framework's reliability and adaptability across varying road and environmental conditions.

Objective Bicycle Occlusion Level Classification using a Deformable Parts-Based Model

May 21, 2025Abstract:Road safety is a critical challenge, particularly for cyclists, who are among the most vulnerable road users. This study aims to enhance road safety by proposing a novel benchmark for bicycle occlusion level classification using advanced computer vision techniques. Utilizing a parts-based detection model, images are annotated and processed through a custom image detection pipeline. A novel method of bicycle occlusion level is proposed to objectively quantify the visibility and occlusion level of bicycle semantic parts. The findings indicate that the model robustly quantifies the visibility and occlusion level of bicycles, a significant improvement over the subjective methods used by the current state of the art. Widespread use of the proposed methodology will facilitate the accurate performance reporting of cyclist detection algorithms for occluded cyclists, informing the development of more robust vulnerable road user detection methods for autonomous vehicles.

E-Scooter Rider Detection and Classification in Dense Urban Environments

May 20, 2022

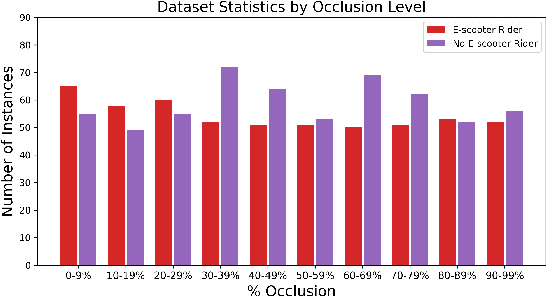

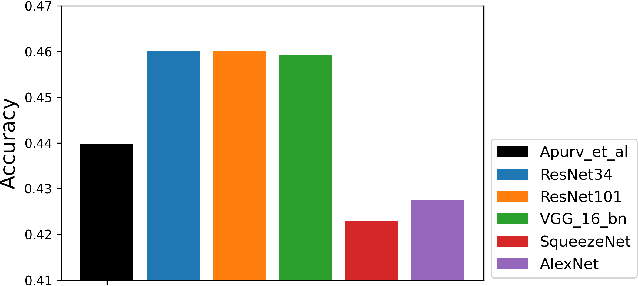

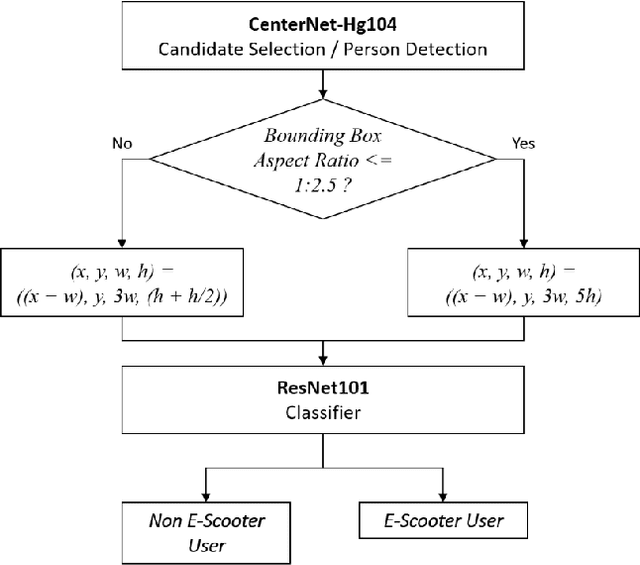

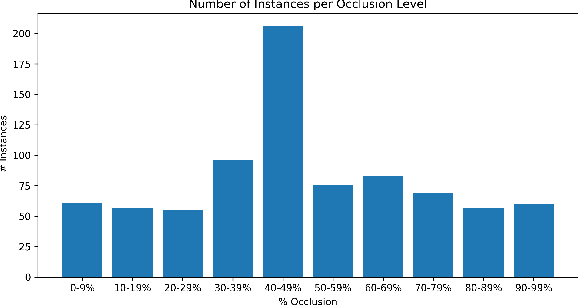

Abstract:Accurate detection and classification of vulnerable road users is a safety critical requirement for the deployment of autonomous vehicles in heterogeneous traffic. Although similar in physical appearance to pedestrians, e-scooter riders follow distinctly different characteristics of movement and can reach speeds of up to 45kmph. The challenge of detecting e-scooter riders is exacerbated in urban environments where the frequency of partial occlusion is increased as riders navigate between vehicles, traffic infrastructure and other road users. This can lead to the non-detection or mis-classification of e-scooter riders as pedestrians, providing inaccurate information for accident mitigation and path planning in autonomous vehicle applications. This research introduces a novel benchmark for partially occluded e-scooter rider detection to facilitate the objective characterization of detection models. A novel, occlusion-aware method of e-scooter rider detection is presented that achieves a 15.93% improvement in detection performance over the current state of the art.

The Impact of Partial Occlusion on Pedestrian Detectability

May 12, 2022

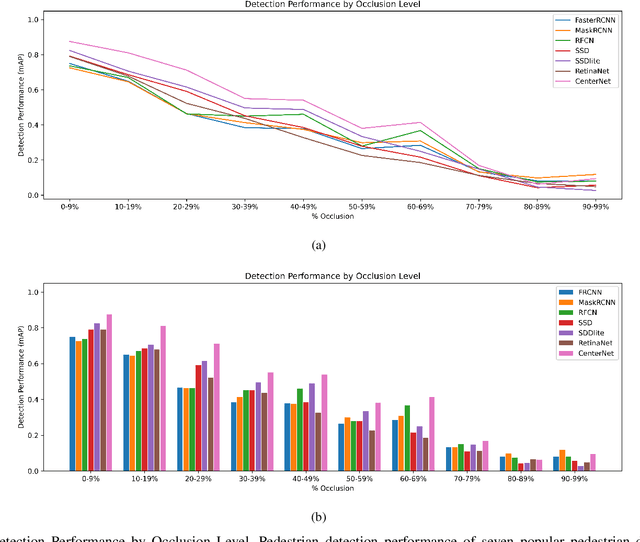

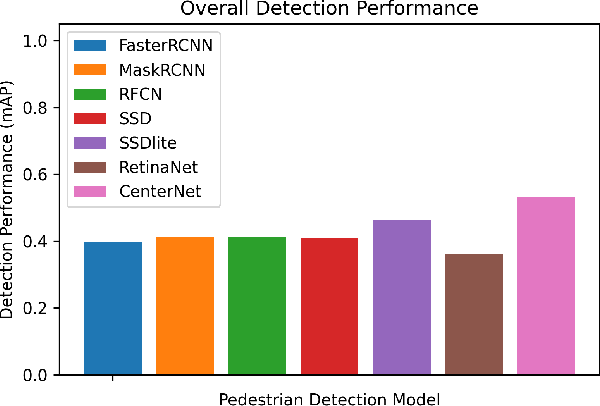

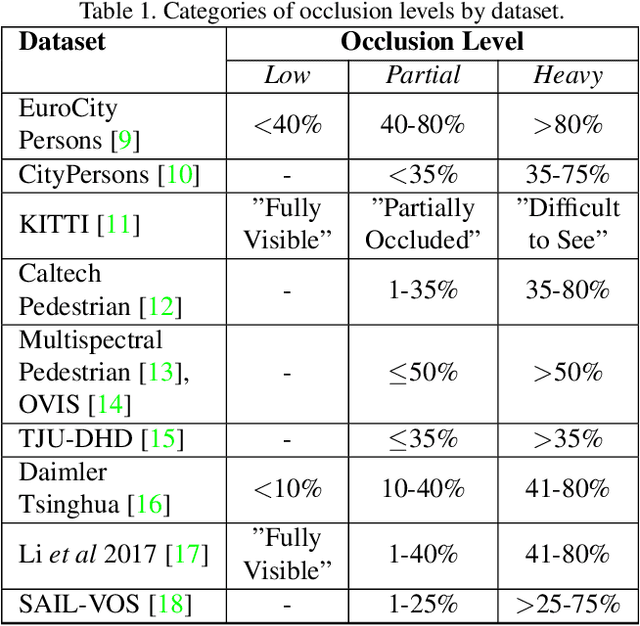

Abstract:Robust detection of vulnerable road users is a safety critical requirement for the deployment of autonomous vehicles in heterogeneous traffic. One of the most complex outstanding challenges is that of partial occlusion where a target object is only partially available to the sensor due to obstruction by another foreground object. A number of leading pedestrian detection benchmarks provide annotation for partial occlusion, however each benchmark varies greatly in their definition of the occurrence and severity of occlusion. Recent research demonstrates that a high degree of subjectivity is used to classify occlusion level in these cases and occlusion is typically categorized into 2 to 3 broad categories such as partially and heavily occluded. This can lead to inaccurate or inconsistent reporting of pedestrian detection model performance depending on which benchmark is used. This research introduces a novel, objective benchmark for partially occluded pedestrian detection to facilitate the objective characterization of pedestrian detection models. Characterization is carried out on seven popular pedestrian detection models for a range of occlusion levels from 0-99%. Results demonstrate that pedestrian detection performance degrades, and the number of false negative detections increase as pedestrian occlusion level increases. Of the seven popular pedestrian detection routines characterized, CenterNet has the greatest overall performance, followed by SSDlite. RetinaNet has the lowest overall detection performance across the range of occlusion levels.

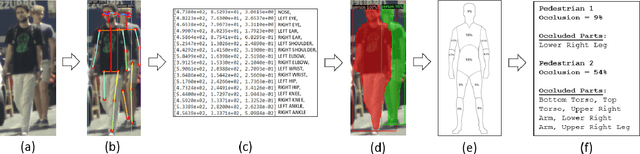

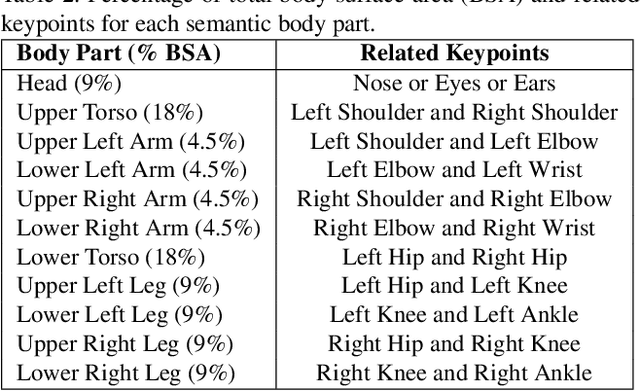

An Objective Method for Pedestrian Occlusion Level Classification

May 11, 2022

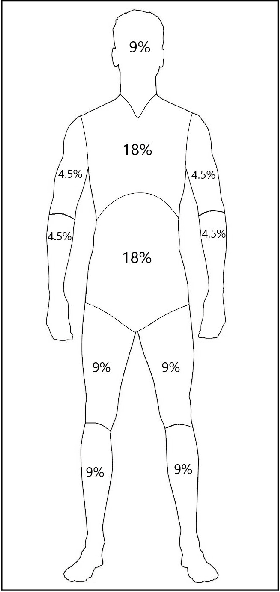

Abstract:Pedestrian detection is among the most safety-critical features of driver assistance systems for autonomous vehicles. One of the most complex detection challenges is that of partial occlusion, where a target object is only partially available to the sensor due to obstruction by another foreground object. A number of current pedestrian detection benchmarks provide annotation for partial occlusion to assess algorithm performance in these scenarios, however each benchmark varies greatly in their definition of the occurrence and severity of occlusion. In addition, current occlusion level annotation methods contain a high degree of subjectivity by the human annotator. This can lead to inaccurate or inconsistent reporting of an algorithm's detection performance for partially occluded pedestrians, depending on which benchmark is used. This research presents a novel, objective method for pedestrian occlusion level classification for ground truth annotation. Occlusion level classification is achieved through the identification of visible pedestrian keypoints and through the use of a novel, effective method of 2D body surface area estimation. Experimental results demonstrate that the proposed method reflects the pixel-wise occlusion level of pedestrians in images and is effective for all forms of occlusion, including challenging edge cases such as self-occlusion, truncation and inter-occluding pedestrians.

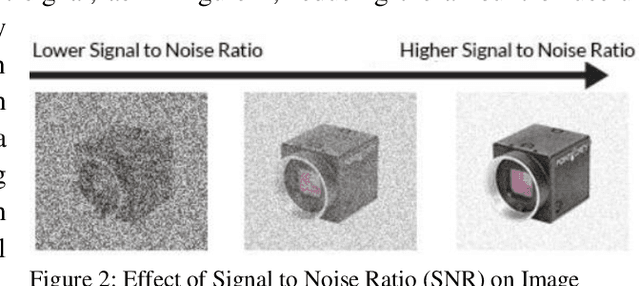

Characterisation of CMOS Image Sensor Performance in Low Light Automotive Applications

Nov 24, 2020

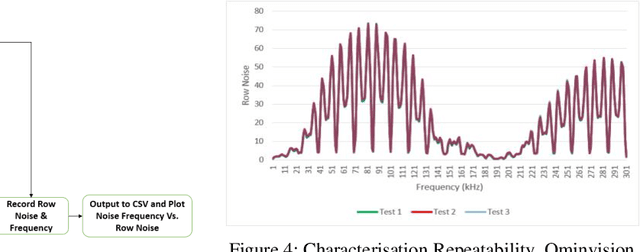

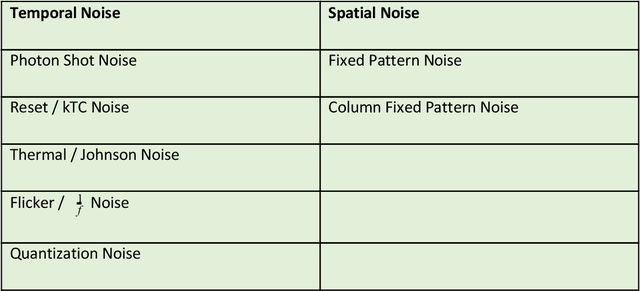

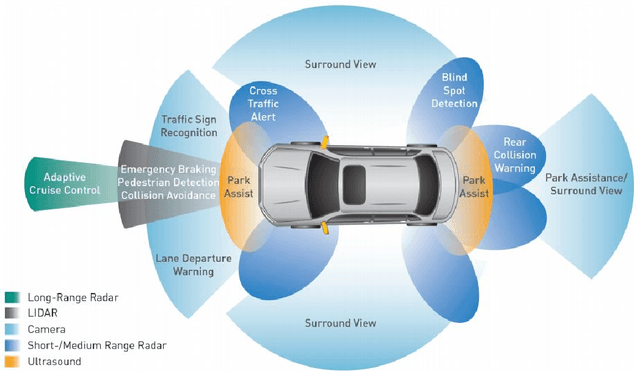

Abstract:The applications of automotive cameras in Advanced Driver-Assistance Systems (ADAS) are growing rapidly as automotive manufacturers strive to provide 360 degree protection for their customers. Vision systems must capture high quality images in both daytime and night-time scenarios in order to produce the large informational content required for software analysis in applications such as lane departure, pedestrian detection and collision detection. The challenge in producing high quality images in low light scenarios is that the signal to noise ratio is greatly reduced. This can result in noise becoming the dominant factor in a captured image thereby making these safety systems less effective at night. This paper outlines a systematic method for characterisation of state of the art image sensor performance in response to noise, so as to improve the design and performance of automotive cameras in low light scenarios. The experiment outlined in this paper demonstrates how this method can be used to characterise the performance of CMOS image sensors in response to electrical noise on the power supply lines.

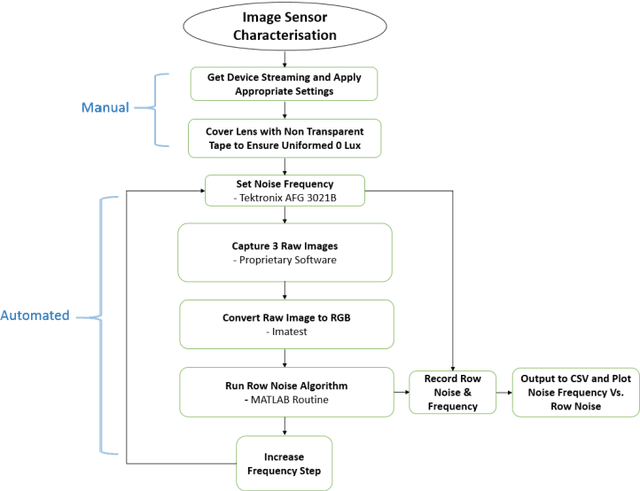

Impact of Power Supply Noise on Image Sensor Performance in Automotive Applications

Nov 24, 2020

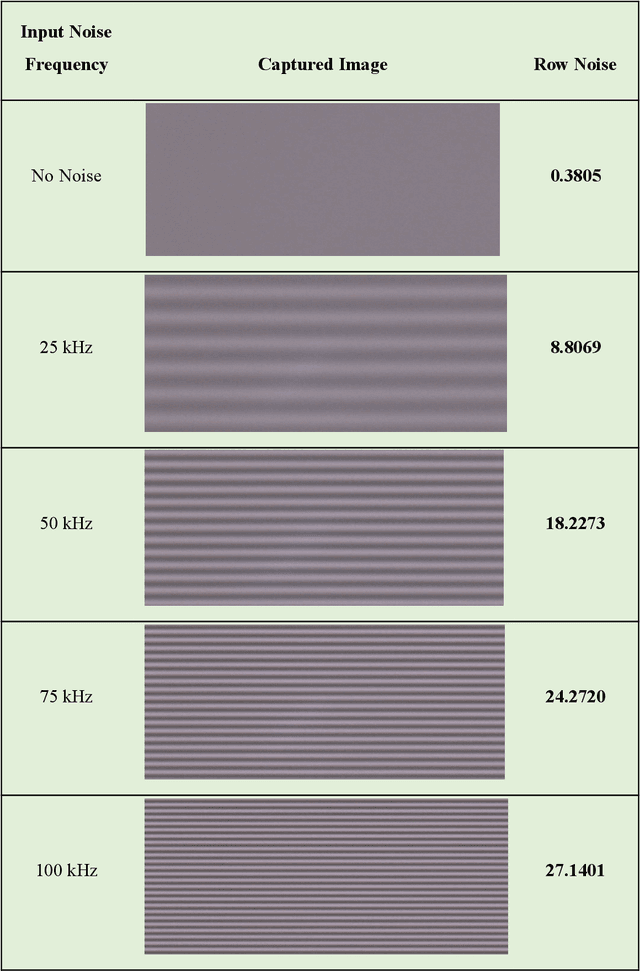

Abstract:Vision Systems are quickly becoming a large component of Active Automotive Safety Systems. In order to be effective in critical safety applications these systems must produce high quality images in both daytime and night-time scenarios in order to provide the large informational content required for software analysis in applications such as lane departure, pedestrian detection and collision detection. The challenge in capturing high quality images in low light scenarios is that the signal to noise ratio is greatly reduced, which can result in noise becoming the dominant factor in a captured image, thereby making these safety systems less effective at night. Research has been undertaken to develop a systematic method of characterising image sensor performance in response to electrical noise in order to improve the design and performance of automotive cameras in low light scenarios. The root cause of image row noise has been established and a mathematical algorithm for determining the magnitude of row noise in an image has been devised. An automated characterisation method has been developed to allow performance characterisation in response to a large frequency spectrum of electrical noise on the image sensor power supply. Various strategies of improving image sensor performance for low light applications have also been proposed from the research outcomes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge