Shaina Mehta

Su-RoBERTa: A Semi-supervised Approach to Predicting Suicide Risk through Social Media using Base Language Models

Dec 02, 2024

Abstract:In recent times, more and more people are posting about their mental states across various social media platforms. Leveraging this data, AI-based systems can be developed that help in assessing the mental health of individuals, such as suicide risk. This paper is a study done on suicidal risk assessments using Reddit data leveraging Base language models to identify patterns from social media posts. We have demonstrated that using smaller language models, i.e., less than 500M parameters, can also be effective in contrast to LLMs with greater than 500M parameters. We propose Su-RoBERTa, a fine-tuned RoBERTa on suicide risk prediction task that utilized both the labeled and unlabeled Reddit data and tackled class imbalance by data augmentation using GPT-2 model. Our Su-RoBERTa model attained a 69.84% weighted F1 score during the Final evaluation. This paper demonstrates the effectiveness of Base language models for the analysis of the risk factors related to mental health with an efficient computation pipeline

Depression Detection and Analysis using Large Language Models on Textual and Audio-Visual Modalities

Jul 08, 2024

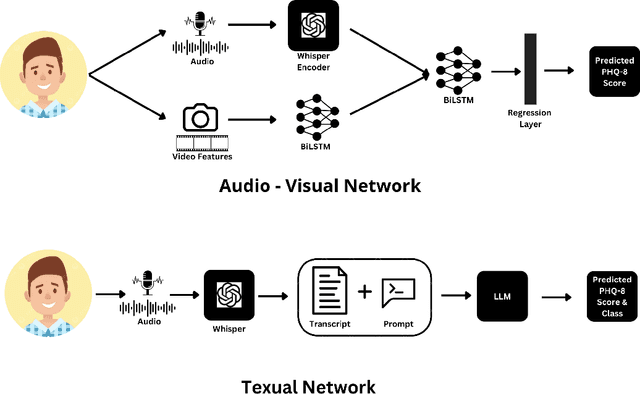

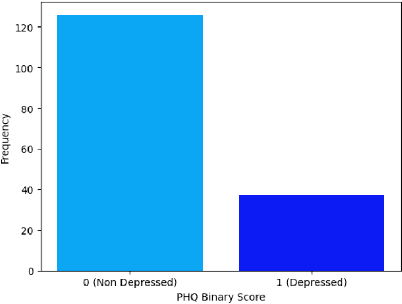

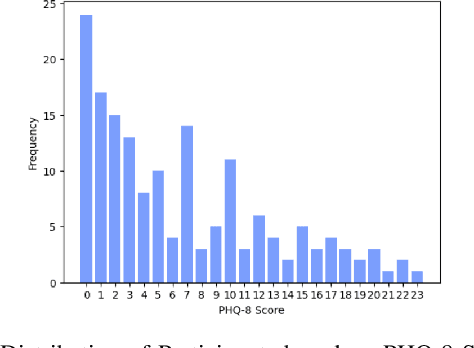

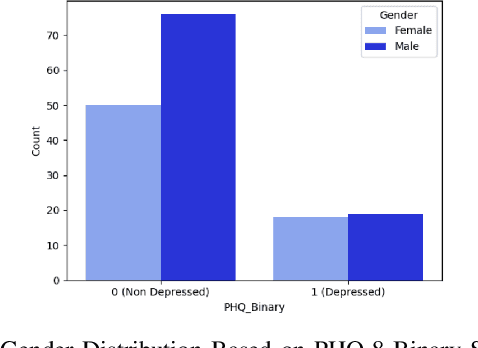

Abstract:Depression has proven to be a significant public health issue, profoundly affecting the psychological well-being of individuals. If it remains undiagnosed, depression can lead to severe health issues, which can manifest physically and even lead to suicide. Generally, Diagnosing depression or any other mental disorder involves conducting semi-structured interviews alongside supplementary questionnaires, including variants of the Patient Health Questionnaire (PHQ) by Clinicians and mental health professionals. This approach places significant reliance on the experience and judgment of trained physicians, making the diagnosis susceptible to personal biases. Given that the underlying mechanisms causing depression are still being actively researched, physicians often face challenges in diagnosing and treating the condition, particularly in its early stages of clinical presentation. Recently, significant strides have been made in Artificial neural computing to solve problems involving text, image, and speech in various domains. Our analysis has aimed to leverage these state-of-the-art (SOTA) models in our experiments to achieve optimal outcomes leveraging multiple modalities. The experiments were performed on the Extended Distress Analysis Interview Corpus Wizard of Oz dataset (E-DAIC) corpus presented in the Audio/Visual Emotion Challenge (AVEC) 2019 Challenge. The proposed solutions demonstrate better results achieved by Proprietary and Open-source Large Language Models (LLMs), which achieved a Root Mean Square Error (RMSE) score of 3.98 on Textual Modality, beating the AVEC 2019 challenge baseline results and current SOTA regression analysis architectures. Additionally, the proposed solution achieved an accuracy of 71.43% in the classification task. The paper also includes a novel audio-visual multi-modal network that predicts PHQ-8 scores with an RMSE of 6.51.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge