Seyed Rasoul Hosseini

Boosting Unconstrained Face Recognition with Targeted Style Adversary

Aug 14, 2024

Abstract:While deep face recognition models have demonstrated remarkable performance, they often struggle on the inputs from domains beyond their training data. Recent attempts aim to expand the training set by relying on computationally expensive and inherently challenging image-space augmentation of image generation modules. In an orthogonal direction, we present a simple yet effective method to expand the training data by interpolating between instance-level feature statistics across labeled and unlabeled sets. Our method, dubbed Targeted Style Adversary (TSA), is motivated by two observations: (i) the input domain is reflected in feature statistics, and (ii) face recognition model performance is influenced by style information. Shifting towards an unlabeled style implicitly synthesizes challenging training instances. We devise a recognizability metric to constraint our framework to preserve the inherent identity-related information of labeled instances. The efficacy of our method is demonstrated through evaluations on unconstrained benchmarks, outperforming or being on par with its competitors while offering nearly a 70\% improvement in training speed and 40\% less memory consumption.

Deep Reinforcement Learning with Enhanced PPO for Safe Mobile Robot Navigation

May 25, 2024

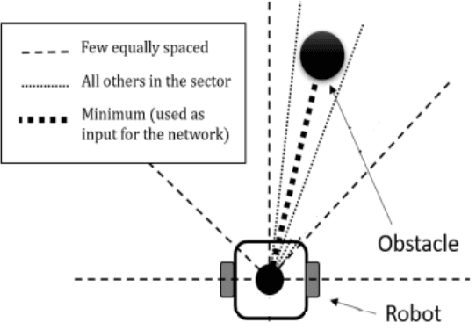

Abstract:Collision-free motion is essential for mobile robots. Most approaches to collision-free and efficient navigation with wheeled robots require parameter tuning by experts to obtain good navigation behavior. This study investigates the application of deep reinforcement learning to train a mobile robot for autonomous navigation in a complex environment. The robot utilizes LiDAR sensor data and a deep neural network to generate control signals guiding it toward a specified target while avoiding obstacles. We employ two reinforcement learning algorithms in the Gazebo simulation environment: Deep Deterministic Policy Gradient and proximal policy optimization. The study introduces an enhanced neural network structure in the Proximal Policy Optimization algorithm to boost performance, accompanied by a well-designed reward function to improve algorithm efficacy. Experimental results conducted in both obstacle and obstacle-free environments underscore the effectiveness of the proposed approach. This research significantly contributes to the advancement of autonomous robotics in complex environments through the application of deep reinforcement learning.

COVID-19 Detection Based on Blood Test Parameters using Various Artificial Intelligence Methods

Apr 02, 2024

Abstract:In 2019, the world faced a new challenge: a COVID-19 disease caused by the novel coronavirus, SARS-CoV-2. The virus rapidly spread across the globe, leading to a high rate of mortality, which prompted health organizations to take measures to control its transmission. Early disease detection is crucial in the treatment process, and computer-based automatic detection systems have been developed to aid in this effort. These systems often rely on artificial intelligence (AI) approaches such as machine learning, neural networks, fuzzy systems, and deep learning to classify diseases. This study aimed to differentiate COVID-19 patients from others using self-categorizing classifiers and employing various AI methods. This study used two datasets: the blood test samples and radiography images. The best results for the blood test samples obtained from San Raphael Hospital, which include two classes of individuals, those with COVID-19 and those with non-COVID diseases, were achieved through the use of the Ensemble method (a combination of a neural network and two machines learning methods). The results showed that this approach for COVID-19 diagnosis is cost-effective and provides results in a shorter amount of time than other methods. The proposed model achieved an accuracy of 94.09% on the dataset used. Secondly, the radiographic images were divided into four classes: normal, viral pneumonia, ground glass opacity, and COVID-19 infection. These were used for segmentation and classification. The lung lobes were extracted from the images and then categorized into specific classes. We achieved an accuracy of 91.1% on the image dataset. Generally, this study highlights the potential of AI in detecting and managing COVID-19 and underscores the importance of continued research and development in this field.

ENet-21: An Optimized light CNN Structure for Lane Detection

Mar 28, 2024Abstract:Lane detection for autonomous vehicles is an important concept, yet it is a challenging issue of driver assistance systems in modern vehicles. The emergence of deep learning leads to significant progress in self-driving cars. Conventional deep learning-based methods handle lane detection problems as a binary segmentation task and determine whether a pixel belongs to a line. These methods rely on the assumption of a fixed number of lanes, which does not always work. This study aims to develop an optimal structure for the lane detection problem, offering a promising solution for driver assistance features in modern vehicles by utilizing a machine learning method consisting of binary segmentation and Affinity Fields that can manage varying numbers of lanes and lane change scenarios. In this approach, the Convolutional Neural Network (CNN), is selected as a feature extractor, and the final output is obtained through clustering of the semantic segmentation and Affinity Field outputs. Our method uses less complex CNN architecture than exi

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge