Seungsang Yun

PeLiCal: Targetless Extrinsic Calibration via Penetrating Lines for RGB-D Cameras with Limited Co-visibility

Apr 23, 2024

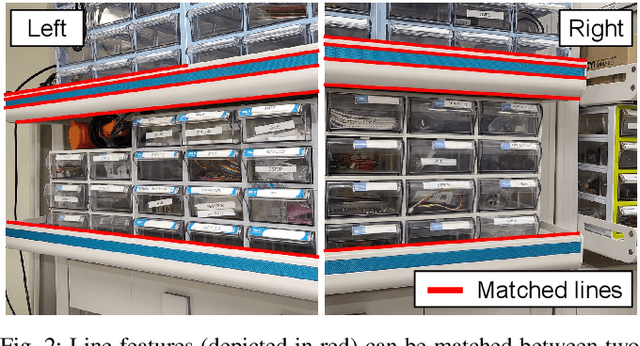

Abstract:RGB-D cameras are crucial in robotic perception, given their ability to produce images augmented with depth data. However, their limited FOV often requires multiple cameras to cover a broader area. In multi-camera RGB-D setups, the goal is typically to reduce camera overlap, optimizing spatial coverage with as few cameras as possible. The extrinsic calibration of these systems introduces additional complexities. Existing methods for extrinsic calibration either necessitate specific tools or highly depend on the accuracy of camera motion estimation. To address these issues, we present PeLiCal, a novel line-based calibration approach for RGB-D camera systems exhibiting limited overlap. Our method leverages long line features from surroundings, and filters out outliers with a novel convergence voting algorithm, achieving targetless, real-time, and outlier-robust performance compared to existing methods. We open source our implementation on https://github.com/joomeok/PeLiCal.git.

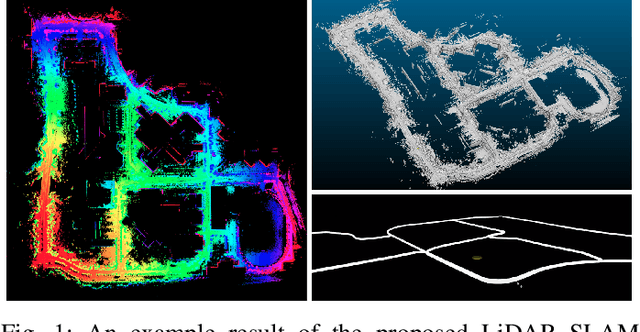

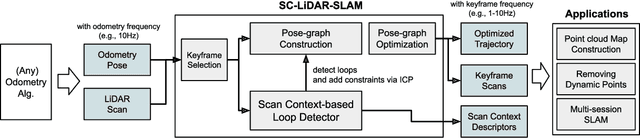

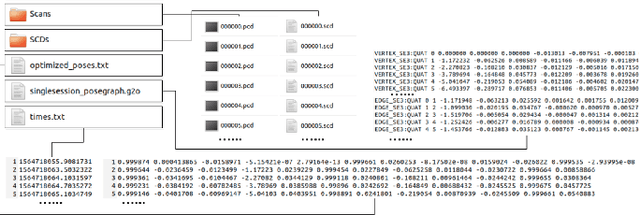

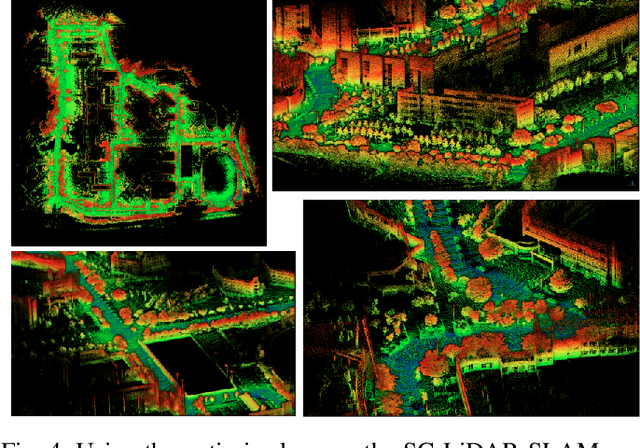

SC-LiDAR-SLAM: a Front-end Agnostic Versatile LiDAR SLAM System

Jan 17, 2022

Abstract:Accurate 3D point cloud map generation is a core task for various robot missions or even for data-driven urban analysis. To do so, light detection and ranging (LiDAR) sensor-based simultaneous localization and mapping (SLAM) technology have been elaborated. To compose a full SLAM system, many odometry and place recognition methods have independently been proposed in academia. However, they have hardly been integrated or too tightly combined so that exchanging (upgrading) either single odometry or place recognition module is very effort demanding. Recently, the performance of each module has been improved a lot, so it is necessary to build a SLAM system that can effectively integrate them and easily replace them with the latest one. In this paper, we release such a front-end agnostic LiDAR SLAM system, named SC-LiDAR-SLAM. We built a complete SLAM system by designing it modular, and successfully integrating it with Scan Context++ and diverse existing opensource LiDAR odometry methods to generate an accurate point cloud map

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge