Serge Vasylechko

An Optimized Binning and Probabilistic Slice Sharing Algorithm for Motion Correction in Abdominal DW-MRI

Sep 01, 2024Abstract:Abdominal diffusion-weighted magnetic resonance imaging (DW-MRI) is a powerful, non-invasive technique for characterizing lesions and facilitating early diagnosis. However, respiratory motion during a scan can degrade image quality. Binning image slices into respiratory phases may reduce motion artifacts, but when the standard binning algorithm is applied to DW-MRI, reconstructed volumes are often incomplete because they lack slices along the superior-inferior axis. Missing slices create black stripes within images, and prolonged scan times are required to generate complete volumes. In this study, we propose a new binning algorithm to minimize missing slices. We acquired free-breathing and shallow-breathing abdominal DW-MRI scans on seven volunteers and used our algorithm to correct for motion in free-breathing scans. First, we drew the optimal rigid bin partitions in the respiratory signal using a dynamic programming approach, assigning each slice to one bin. We then designed a probabilistic approach for selecting some slices to belong in two bins. Our proposed binning algorithm resulted in significantly fewer missing slices than standard binning (p<1.0e-16), yielding an average reduction of 82.98+/-6.07%. Our algorithm also improved lesion conspicuity and reduced motion artifacts in DW-MR images and Apparent Diffusion Coefficient (ADC) maps. ADC maps created from free-breathing images corrected for motion with our algorithm showed lower intra-subject variability compared to uncorrected free-breathing and shallow-breathing maps (p<0.001). Additionally, shallow-breathing ADC maps showed more consistency with corrected free-breathing maps rather than uncorrected free-breathing maps (p<0.01). Our proposed binning algorithm's efficacy in reducing missing slices increases anatomical accuracy and allows for shorter acquisition times compared to standard binning.

Convolution-Free Medical Image Segmentation using Transformers

Feb 26, 2021

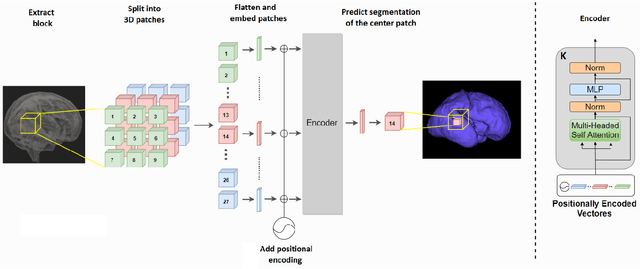

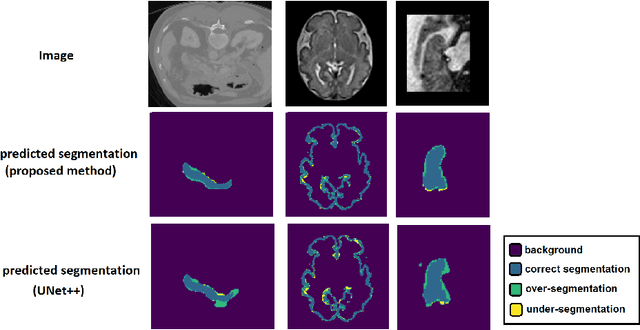

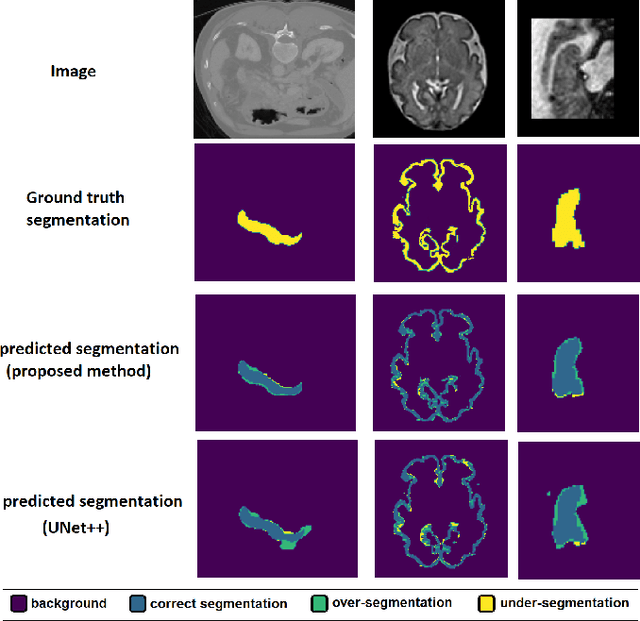

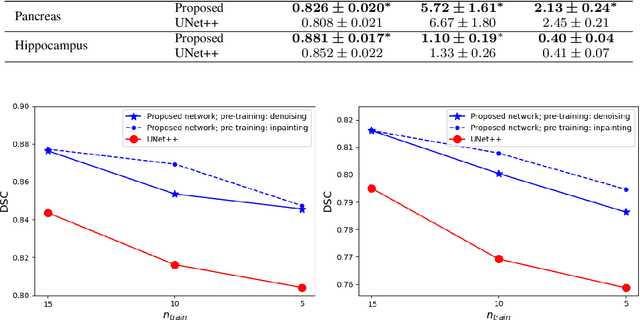

Abstract:Like other applications in computer vision, medical image segmentation has been most successfully addressed using deep learning models that rely on the convolution operation as their main building block. Convolutions enjoy important properties such as sparse interactions, weight sharing, and translation equivariance. These properties give convolutional neural networks (CNNs) a strong and useful inductive bias for vision tasks. In this work we show that a different method, based entirely on self-attention between neighboring image patches and without any convolution operations, can achieve competitive or better results. Given a 3D image block, our network divides it into $n^3$ 3D patches, where $n=3 \text{ or } 5$ and computes a 1D embedding for each patch. The network predicts the segmentation map for the center patch of the block based on the self-attention between these patch embeddings. We show that the proposed model can achieve segmentation accuracies that are better than the state of the art CNNs on three datasets. We also propose methods for pre-training this model on large corpora of unlabeled images. Our experiments show that with pre-training the advantage of our proposed network over CNNs can be significant when labeled training data is small.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge