Serafim Rodrigues

Invariants for neural automata

Feb 04, 2023Abstract:Computational modeling of neurodynamical systems often deploys neural networks and symbolic dynamics. A particular way for combining these approaches within a framework called vector symbolic architectures leads to neural automata. An interesting research direction we have pursued under this framework has been to consider mapping symbolic dynamics onto neurodynamics, represented as neural automata. This representation theory, enables us to ask questions, such as, how does the brain implement Turing computations. Specifically, in this representation theory, neural automata result from the assignment of symbols and symbol strings to numbers, known as G\"odel encoding. Under this assignment symbolic computation becomes represented by trajectories of state vectors in a real phase space, that allows for statistical correlation analyses with real-world measurements and experimental data. However, these assignments are usually completely arbitrary. Hence, it makes sense to address the problem question of, which aspects of the dynamics observed under such a representation is intrinsic to the dynamics and which are not. In this study, we develop a formally rigorous mathematical framework for the investigation of symmetries and invariants of neural automata under different encodings. As a central concept we define patterns of equality for such systems. We consider different macroscopic observables, such as the mean activation level of the neural network, and ask for their invariance properties. Our main result shows that only step functions that are defined over those patterns of equality are invariant under recodings, while the mean activation is not. Our work could be of substantial importance for related regression studies of real-world measurements with neurosymbolic processors for avoiding confounding results that are dependant on a particular encoding and not intrinsic to the dynamics.

Stochastic facilitation in heteroclinic communication channels

Oct 23, 2021

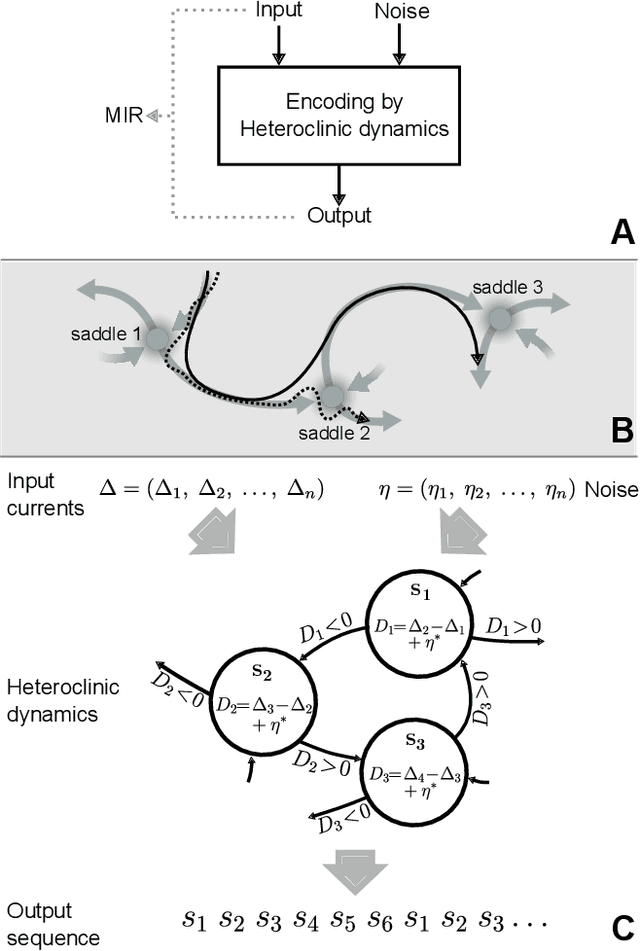

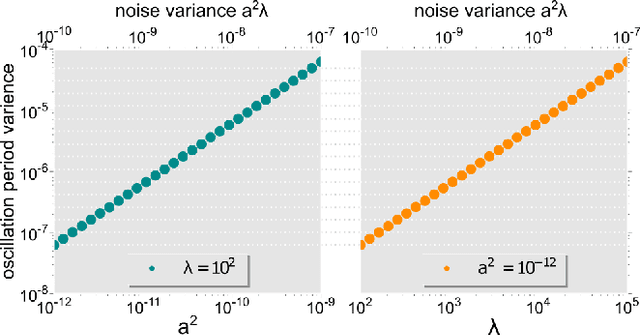

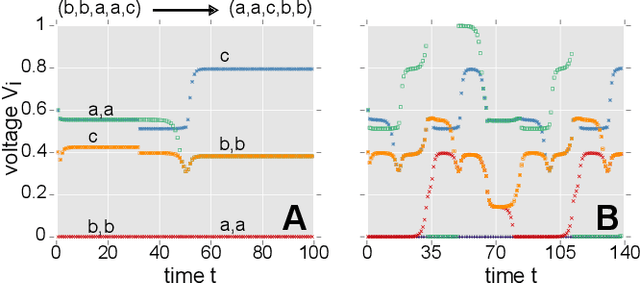

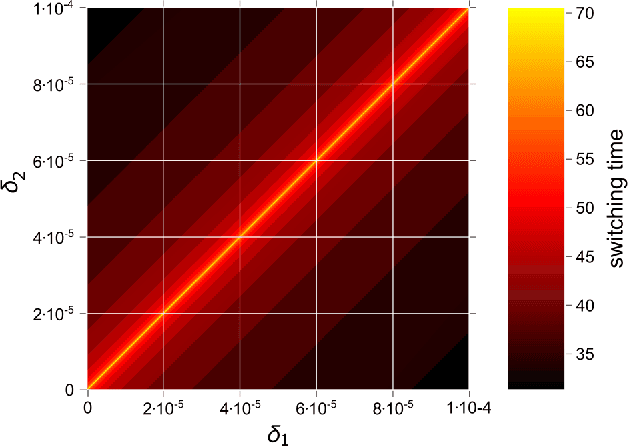

Abstract:Biological neural systems encode and transmit information as patterns of activity tracing complex trajectories in high-dimensional state-spaces, inspiring alternative paradigms of information processing. Heteroclinic networks, naturally emerging in artificial neural systems, are networks of saddles in state-space that provide a transparent approach to generate complex trajectories via controlled switches among interconnected saddles. External signals induce specific switching sequences, thus dynamically encoding inputs as trajectories. Recent works have focused either on computational aspects of heteroclinic networks, i.e. Heteroclinic Computing, or their stochastic properties under noise. Yet, how well such systems may transmit information remains an open question. Here we investigate the information transmission properties of heteroclinic networks, studying them as communication channels. Choosing a tractable but representative system exhibiting a heteroclinic network, we investigate the mutual information rate (MIR) between input signals and the resulting sequences of states as the level of noise varies. Intriguingly, MIR does not decrease monotonically with increasing noise. Intermediate noise levels indeed maximize the information transmission capacity by promoting an increased yet controlled exploration of the underlying network of states. Complementing standard stochastic resonance, these results highlight the constructive effect of stochastic facilitation (i.e. noise-enhanced information transfer) on heteroclinic communication channels and possibly on more general dynamical systems exhibiting complex trajectories in state-space.

A modular architecture for transparent computation in Recurrent Neural Networks

Sep 07, 2016

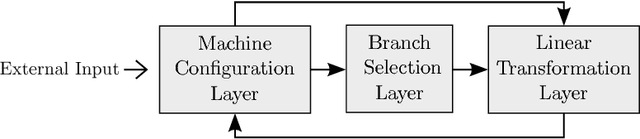

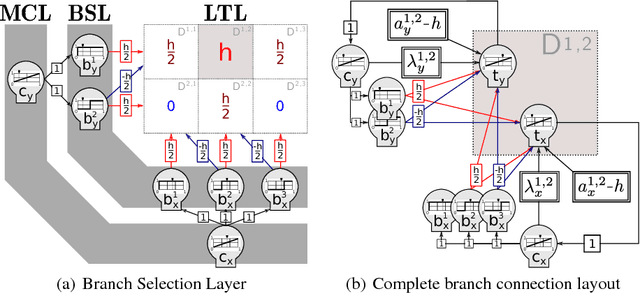

Abstract:Computation is classically studied in terms of automata, formal languages and algorithms; yet, the relation between neural dynamics and symbolic representations and operations is still unclear in traditional eliminative connectionism. Therefore, we suggest a unique perspective on this central issue, to which we would like to refer as to transparent connectionism, by proposing accounts of how symbolic computation can be implemented in neural substrates. In this study we first introduce a new model of dynamics on a symbolic space, the versatile shift, showing that it supports the real-time simulation of a range of automata. We then show that the Goedelization of versatile shifts defines nonlinear dynamical automata, dynamical systems evolving on a vectorial space. Finally, we present a mapping between nonlinear dynamical automata and recurrent artificial neural networks. The mapping defines an architecture characterized by its granular modularity, where data, symbolic operations and their control are not only distinguishable in activation space, but also spatially localizable in the network itself, while maintaining a distributed encoding of symbolic representations. The resulting networks simulate automata in real-time and are programmed directly, in absence of network training. To discuss the unique characteristics of the architecture and their consequences, we present two examples: i) the design of a Central Pattern Generator from a finite-state locomotive controller, and ii) the creation of a network simulating a system of interactive automata that supports the parsing of garden-path sentences as investigated in psycholinguistics experiments.

Turing Computation with Recurrent Artificial Neural Networks

Nov 04, 2015

Abstract:We improve the results by Siegelmann & Sontag (1995) by providing a novel and parsimonious constructive mapping between Turing Machines and Recurrent Artificial Neural Networks, based on recent developments of Nonlinear Dynamical Automata. The architecture of the resulting R-ANNs is simple and elegant, stemming from its transparent relation with the underlying NDAs. These characteristics yield promise for developments in machine learning methods and symbolic computation with continuous time dynamical systems. A framework is provided to directly program the R-ANNs from Turing Machine descriptions, in absence of network training. At the same time, the network can potentially be trained to perform algorithmic tasks, with exciting possibilities in the integration of approaches akin to Google DeepMind's Neural Turing Machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge