Sebastian Pasewaldt

Low-light Image and Video Enhancement via Selective Manipulation of Chromaticity

Mar 09, 2022

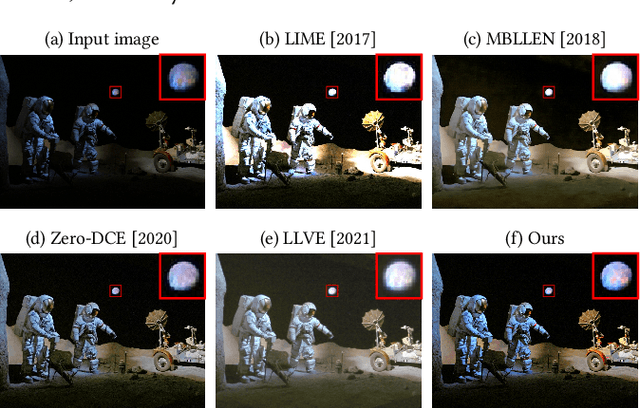

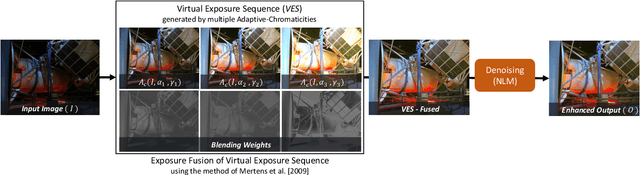

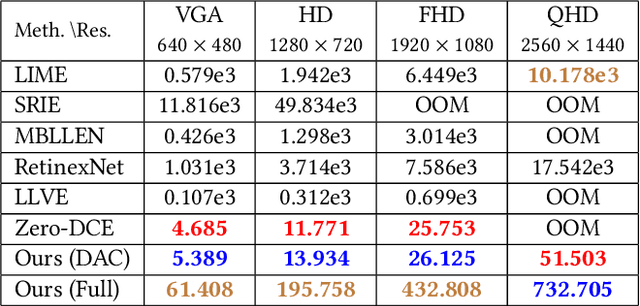

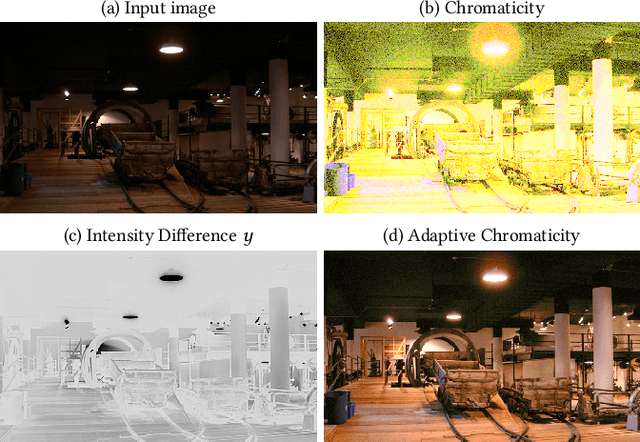

Abstract:Image acquisition in low-light conditions suffers from poor quality and significant degradation in visual aesthetics. This affects the visual perception of the acquired image and the performance of various computer vision and image processing algorithms applied after acquisition. Especially for videos, the additional temporal domain makes it more challenging, wherein we need to preserve quality in a temporally coherent manner. We present a simple yet effective approach for low-light image and video enhancement. To this end, we introduce "Adaptive Chromaticity", which refers to an adaptive computation of image chromaticity. The above adaptivity allows us to avoid the costly step of low-light image decomposition into illumination and reflectance, employed by many existing techniques. All stages in our method consist of only point-based operations and high-pass or low-pass filtering, thereby ensuring that the amount of temporal incoherence is negligible when applied on a per-frame basis for videos. Our results on standard lowlight image datasets show the efficacy of our algorithm and its qualitative and quantitative superiority over several state-of-the-art techniques. For videos captured in the wild, we perform a user study to demonstrate the preference for our method in comparison to state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge