Sebastian Lerch

Probabilistic measures afford fair comparisons of AIWP and NWP model output

Jun 04, 2025Abstract:We introduce a new measure for fair and meaningful comparisons of single-valued output from artificial intelligence based weather prediction (AIWP) and numerical weather prediction (NWP) models, called potential continuous ranked probability score (PC). In a nutshell, we subject the deterministic backbone of physics-based and data-driven models post hoc to the same statistical postprocessing technique, namely, isotonic distributional regression (IDR). Then we find PC as the mean continuous ranked probability score (CRPS) of the postprocessed probabilistic forecasts. The nonnegative PC measure quantifies potential predictive performance and is invariant under strictly increasing transformations of the model output. PC attains its most desirable value of zero if, and only if, the weather outcome Y is a fixed, non-decreasing function of the model output X. The PC measure is recorded in the unit of the outcome, has an upper bound of one half times the mean absolute difference between outcomes, and serves as a proxy for the mean CRPS of real-time, operational probabilistic products. When applied to WeatherBench 2 data, our approach demonstrates that the data-driven GraphCast model outperforms the leading, physics-based European Centre for Medium Range Weather Forecasts (ECMWF) high-resolution (HRES) model. Furthermore, the PC measure for the HRES model aligns exceptionally well with the mean CRPS of the operational ECMWF ensemble. Across application domains, our approach affords comparisons of single-valued forecasts in settings where the pre-specification of a loss function -- which is the usual, and principally superior, procedure in forecast contests, administrative, and benchmarks settings -- places competitors on unequal footings.

Learning low-dimensional representations of ensemble forecast fields using autoencoder-based methods

Feb 06, 2025Abstract:Large-scale numerical simulations often produce high-dimensional gridded data that is challenging to process for downstream applications. A prime example is numerical weather prediction, where atmospheric processes are modeled using discrete gridded representations of the physical variables and dynamics. Uncertainties are assessed by running the simulations multiple times, yielding ensembles of simulated fields as a high-dimensional stochastic representation of the forecast distribution. The high-dimensionality and large volume of ensemble datasets poses major computing challenges for subsequent forecasting stages. Data-driven dimensionality reduction techniques could help to reduce the data volume before further processing by learning meaningful and compact representations. However, existing dimensionality reduction methods are typically designed for deterministic and single-valued inputs, and thus cannot handle ensemble data from multiple randomized simulations. In this study, we propose novel dimensionality reduction approaches specifically tailored to the format of ensemble forecast fields. We present two alternative frameworks, which yield low-dimensional representations of ensemble forecasts while respecting their probabilistic character. The first approach derives a distribution-based representation of an input ensemble by applying standard dimensionality reduction techniques in a member-by-member fashion and merging the member representations into a joint parametric distribution model. The second approach achieves a similar representation by encoding all members jointly using a tailored variational autoencoder. We evaluate and compare both approaches in a case study using 10 years of temperature and wind speed forecasts over Europe. The approaches preserve key spatial and statistical characteristics of the ensemble and enable probabilistic reconstructions of the forecast fields.

Improving Model Chain Approaches for Probabilistic Solar Energy Forecasting through Post-processing and Machine Learning

Jun 06, 2024Abstract:Weather forecasts from numerical weather prediction models play a central role in solar energy forecasting, where a cascade of physics-based models is used in a model chain approach to convert forecasts of solar irradiance to solar power production, using additional weather variables as auxiliary information. Ensemble weather forecasts aim to quantify uncertainty in the future development of the weather, and can be used to propagate this uncertainty through the model chain to generate probabilistic solar energy predictions. However, ensemble prediction systems are known to exhibit systematic errors, and thus require post-processing to obtain accurate and reliable probabilistic forecasts. The overarching aim of our study is to systematically evaluate different strategies to apply post-processing methods in model chain approaches: Not applying any post-processing at all; post-processing only the irradiance predictions before the conversion; post-processing only the solar power predictions obtained from the model chain; or applying post-processing in both steps. In a case study based on a benchmark dataset for the Jacumba solar plant in the U.S., we develop statistical and machine learning methods for post-processing ensemble predictions of global horizontal irradiance and solar power generation. Further, we propose a neural network-based model for direct solar power forecasting that bypasses the model chain. Our results indicate that post-processing substantially improves the solar power generation forecasts, in particular when post-processing is applied to the power predictions. The machine learning methods for post-processing yield slightly better probabilistic forecasts, and the direct forecasting approach performs comparable to the post-processing strategies.

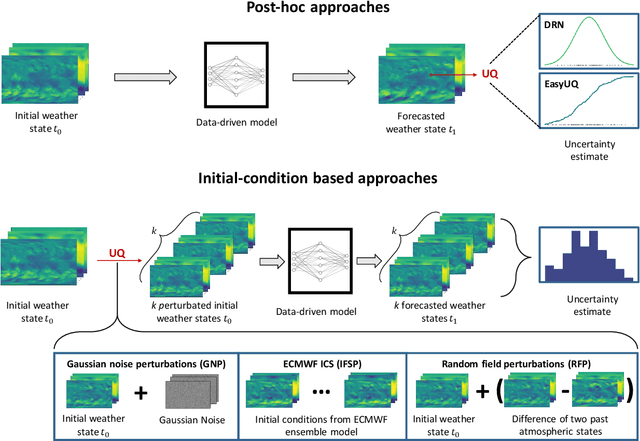

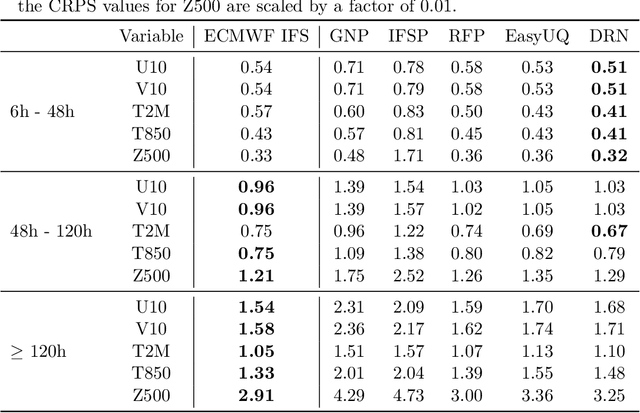

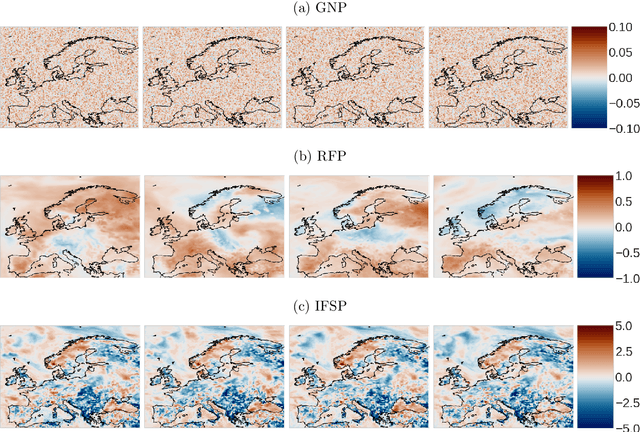

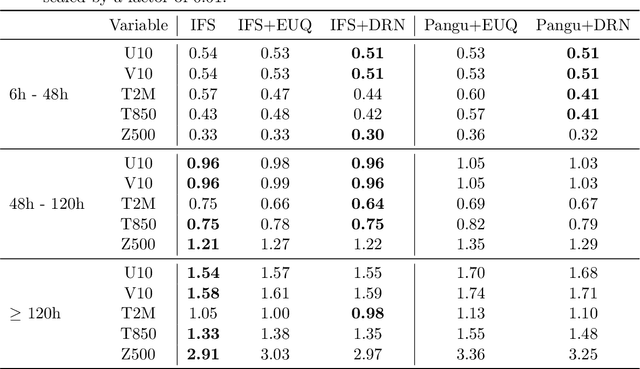

Uncertainty quantification for data-driven weather models

Mar 20, 2024

Abstract:Artificial intelligence (AI)-based data-driven weather forecasting models have experienced rapid progress over the last years. Recent studies, with models trained on reanalysis data, achieve impressive results and demonstrate substantial improvements over state-of-the-art physics-based numerical weather prediction models across a range of variables and evaluation metrics. Beyond improved predictions, the main advantages of data-driven weather models are their substantially lower computational costs and the faster generation of forecasts, once a model has been trained. However, most efforts in data-driven weather forecasting have been limited to deterministic, point-valued predictions, making it impossible to quantify forecast uncertainties, which is crucial in research and for optimal decision making in applications. Our overarching aim is to systematically study and compare uncertainty quantification methods to generate probabilistic weather forecasts from a state-of-the-art deterministic data-driven weather model, Pangu-Weather. Specifically, we compare approaches for quantifying forecast uncertainty based on generating ensemble forecasts via perturbations to the initial conditions, with the use of statistical and machine learning methods for post-hoc uncertainty quantification. In a case study on medium-range forecasts of selected weather variables over Europe, the probabilistic forecasts obtained by using the Pangu-Weather model in concert with uncertainty quantification methods show promising results and provide improvements over ensemble forecasts from the physics-based ensemble weather model of the European Centre for Medium-Range Weather Forecasts for lead times of up to 5 days.

Postprocessing of Ensemble Weather Forecasts Using Permutation-invariant Neural Networks

Sep 08, 2023Abstract:Statistical postprocessing is used to translate ensembles of raw numerical weather forecasts into reliable probabilistic forecast distributions. In this study, we examine the use of permutation-invariant neural networks for this task. In contrast to previous approaches, which often operate on ensemble summary statistics and dismiss details of the ensemble distribution, we propose networks which treat forecast ensembles as a set of unordered member forecasts and learn link functions that are by design invariant to permutations of the member ordering. We evaluate the quality of the obtained forecast distributions in terms of calibration and sharpness, and compare the models against classical and neural network-based benchmark methods. In case studies addressing the postprocessing of surface temperature and wind gust forecasts, we demonstrate state-of-the-art prediction quality. To deepen the understanding of the learned inference process, we further propose a permutation-based importance analysis for ensemble-valued predictors, which highlights specific aspects of the ensemble forecast that are considered important by the trained postprocessing models. Our results suggest that most of the relevant information is contained in few ensemble-internal degrees of freedom, which may impact the design of future ensemble forecasting and postprocessing systems.

Convolutional autoencoders for spatially-informed ensemble post-processing

Apr 08, 2022

Abstract:Ensemble weather predictions typically show systematic errors that have to be corrected via post-processing. Even state-of-the-art post-processing methods based on neural networks often solely rely on location-specific predictors that require an interpolation of the physical weather model's spatial forecast fields to the target locations. However, potentially useful predictability information contained in large-scale spatial structures within the input fields is potentially lost in this interpolation step. Therefore, we propose the use of convolutional autoencoders to learn compact representations of spatial input fields which can then be used to augment location-specific information as additional inputs to post-processing models. The benefits of including this spatial information is demonstrated in a case study of 2-m temperature forecasts at surface stations in Germany.

Aggregating distribution forecasts from deep ensembles

Apr 05, 2022

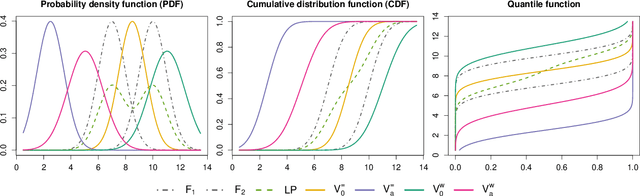

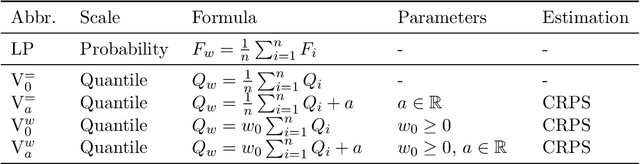

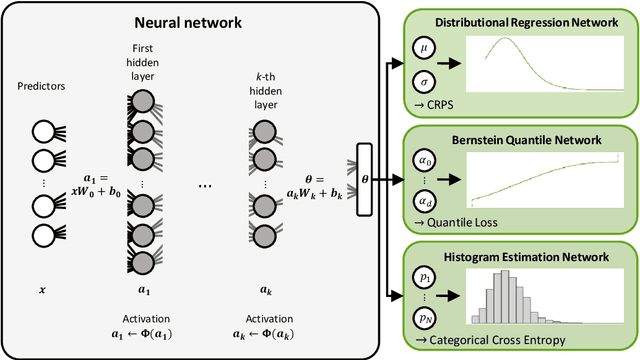

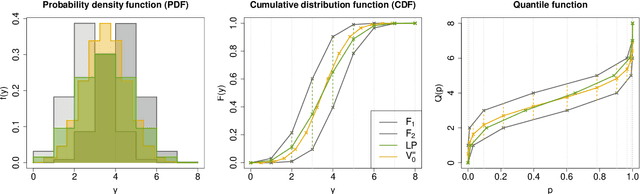

Abstract:The importance of accurately quantifying forecast uncertainty has motivated much recent research on probabilistic forecasting. In particular, a variety of deep learning approaches has been proposed, with forecast distributions obtained as output of neural networks. These neural network-based methods are often used in the form of an ensemble based on multiple model runs from different random initializations, resulting in a collection of forecast distributions that need to be aggregated into a final probabilistic prediction. With the aim of consolidating findings from the machine learning literature on ensemble methods and the statistical literature on forecast combination, we address the question of how to aggregate distribution forecasts based on such deep ensembles. Using theoretical arguments, simulation experiments and a case study on wind gust forecasting, we systematically compare probability- and quantile-based aggregation methods for three neural network-based approaches with different forecast distribution types as output. Our results show that combining forecast distributions can substantially improve the predictive performance. We propose a general quantile aggregation framework for deep ensembles that shows superior performance compared to a linear combination of the forecast densities. Finally, we investigate the effects of the ensemble size and derive recommendations of aggregating distribution forecasts from deep ensembles in practice.

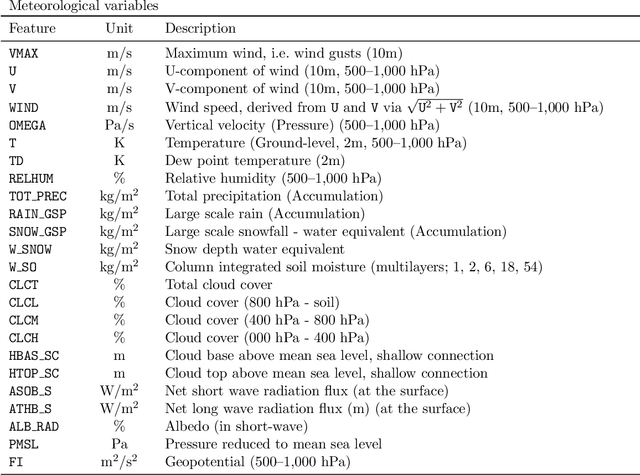

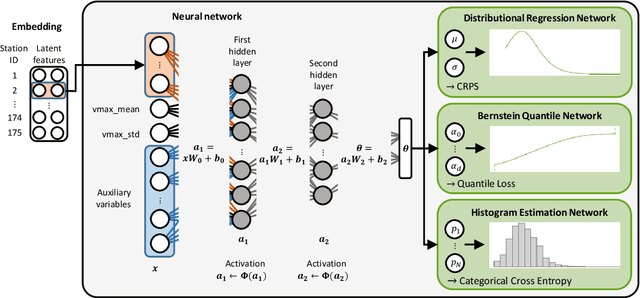

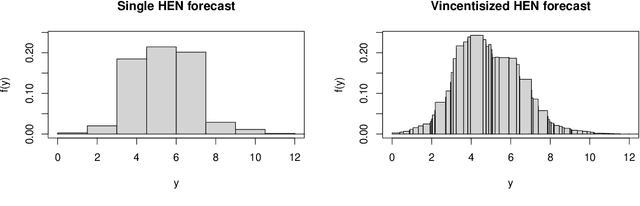

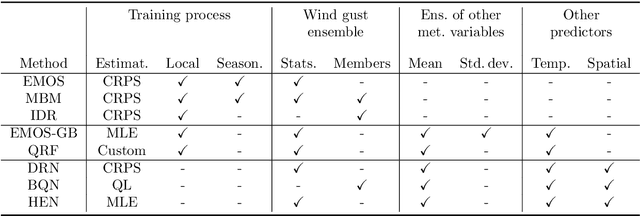

Machine learning methods for postprocessing ensemble forecasts of wind gusts: A systematic comparison

Jun 17, 2021

Abstract:Postprocessing ensemble weather predictions to correct systematic errors has become a standard practice in research and operations. However, only few recent studies have focused on ensemble postprocessing of wind gust forecasts, despite its importance for severe weather warnings. Here, we provide a comprehensive review and systematic comparison of eight statistical and machine learning methods for probabilistic wind gust forecasting via ensemble postprocessing, that can be divided in three groups: State of the art postprocessing techniques from statistics (ensemble model output statistics (EMOS), member-by-member postprocessing, isotonic distributional regression), established machine learning methods (gradient-boosting extended EMOS, quantile regression forests) and neural network-based approaches (distributional regression network, Bernstein quantile network, histogram estimation network). The methods are systematically compared using six years of data from a high-resolution, convection-permitting ensemble prediction system that was run operationally at the German weather service, and hourly observations at 175 surface weather stations in Germany. While all postprocessing methods yield calibrated forecasts and are able to correct the systematic errors of the raw ensemble predictions, incorporating information from additional meteorological predictor variables beyond wind gusts leads to significant improvements in forecast skill. In particular, we propose a flexible framework of locally adaptive neural networks with different probabilistic forecast types as output, which not only significantly outperform all benchmark postprocessing methods but also learn physically consistent relations associated with the diurnal cycle, especially the evening transition of the planetary boundary layer.

Machine learning for total cloud cover prediction

Jan 16, 2020

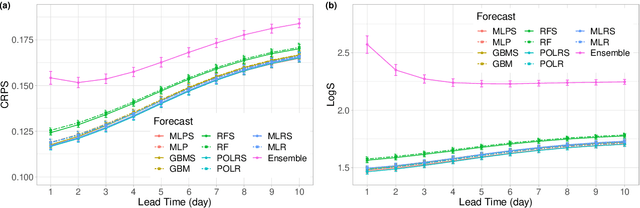

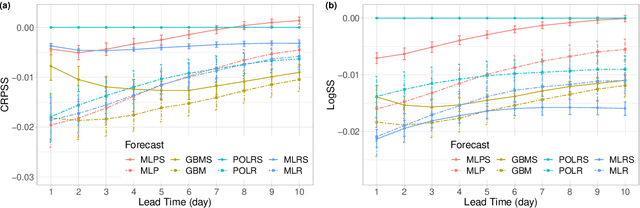

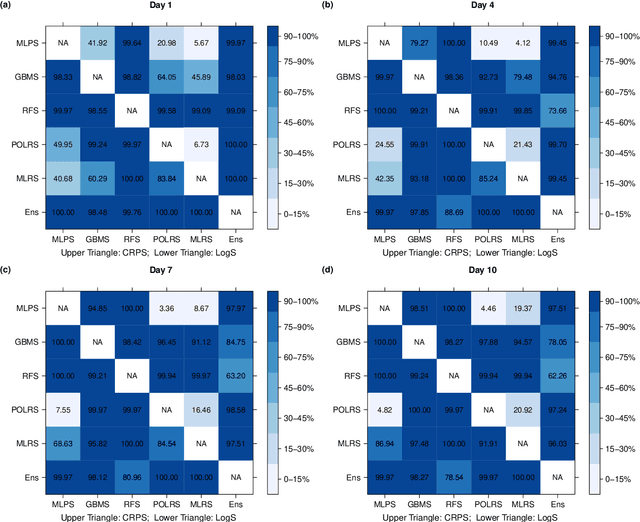

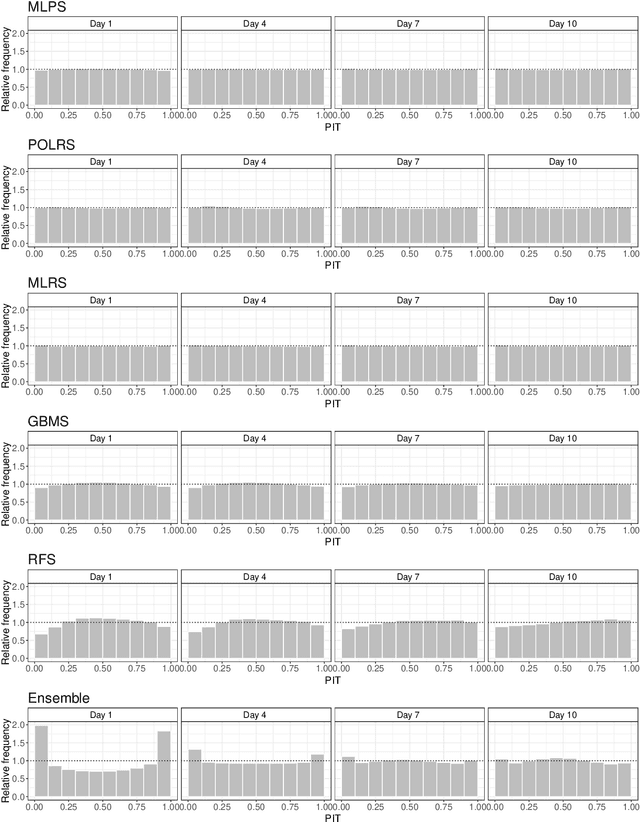

Abstract:Accurate and reliable forecasting of total cloud cover (TCC) is vital for many areas such as astronomy, energy demand and production, or agriculture. Most meteorological centres issue ensemble forecasts of TCC, however, these forecasts are often uncalibrated and exhibit worse forecast skill than ensemble forecasts of other weather variables. Hence, some form of post-processing is strongly required to improve predictive performance. As TCC observations are usually reported on a discrete scale taking just nine different values called oktas, statistical calibration of TCC ensemble forecasts can be considered a classification problem with outputs given by the probabilities of the oktas. This is a classical area where machine learning methods are applied. We investigate the performance of post-processing using multilayer perceptron (MLP) neural networks, gradient boosting machines (GBM) and random forest (RF) methods. Based on the European Centre for Medium-Range Weather Forecasts global TCC ensemble forecasts for 2002-2014 we compare these approaches with the proportional odds logistic regression (POLR) and multiclass logistic regression (MLR) models, as well as the raw TCC ensemble forecasts. We further assess whether improvements in forecast skill can be obtained by incorporating ensemble forecasts of precipitation as additional predictor. Compared to the raw ensemble, all calibration methods result in a significant improvement in forecast skill. RF models provide the smallest increase in predictive performance, while MLP, POLR and GBM approaches perform best. The use of precipitation forecast data leads to further improvements in forecast skill and except for very short lead times the extended MLP model shows the best overall performance.

Neural networks for post-processing ensemble weather forecasts

May 23, 2018

Abstract:Ensemble weather predictions require statistical post-processing of systematic errors to obtain reliable and accurate probabilistic forecasts. Traditionally, this is accomplished with distributional regression models in which the parameters of a predictive distribution are estimated from a training period. We propose a flexible alternative based on neural networks that can incorporate nonlinear relationships between arbitrary predictor variables and forecast distribution parameters that are automatically learned in a data-driven way rather than requiring pre-specified link functions. In a case study of 2-meter temperature forecasts at surface stations in Germany, the neural network approach significantly outperforms benchmark post-processing methods while being computationally more affordable. Key components to this improvement are the use of auxiliary predictor variables and station-specific information with the help of embeddings. Furthermore, the trained neural network can be used to gain insight into the importance of meteorological variables thereby challenging the notion of neural networks as uninterpretable black boxes. Our approach can easily be extended to other statistical post-processing and forecasting problems. We anticipate that recent advances in deep learning combined with the ever-increasing amounts of model and observation data will transform the post-processing of numerical weather forecasts in the coming decade.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge