Sebastian Berns

Towards Mode Balancing of Generative Models via Diversity Weights

Apr 24, 2023

Abstract:Large data-driven image models are extensively used to support creative and artistic work. Under the currently predominant distribution-fitting paradigm, a dataset is treated as ground truth to be approximated as closely as possible. Yet, many creative applications demand a diverse range of output, and creators often strive to actively diverge from a given data distribution. We argue that an adjustment of modelling objectives, from pure mode coverage towards mode balancing, is necessary to accommodate the goal of higher output diversity. We present diversity weights, a training scheme that increases a model's output diversity by balancing the modes in the training dataset. First experiments in a controlled setting demonstrate the potential of our method. We conclude by contextualising our contribution to diversity within the wider debate on bias, fairness and representation in generative machine learning.

Active Divergence with Generative Deep Learning -- A Survey and Taxonomy

Jul 12, 2021

Abstract:Generative deep learning systems offer powerful tools for artefact generation, given their ability to model distributions of data and generate high-fidelity results. In the context of computational creativity, however, a major shortcoming is that they are unable to explicitly diverge from the training data in creative ways and are limited to fitting the target data distribution. To address these limitations, there have been a growing number of approaches for optimising, hacking and rewriting these models in order to actively diverge from the training data. We present a taxonomy and comprehensive survey of the state of the art of active divergence techniques, highlighting the potential for computational creativity researchers to advance these methods and use deep generative models in truly creative systems.

Automating Generative Deep Learning for Artistic Purposes: Challenges and Opportunities

Jul 05, 2021

Abstract:We present a framework for automating generative deep learning with a specific focus on artistic applications. The framework provides opportunities to hand over creative responsibilities to a generative system as targets for automation. For the definition of targets, we adopt core concepts from automated machine learning and an analysis of generative deep learning pipelines, both in standard and artistic settings. To motivate the framework, we argue that automation aligns well with the goal of increasing the creative responsibility of a generative system, a central theme in computational creativity research. We understand automation as the challenge of granting a generative system more creative autonomy, by framing the interaction between the user and the system as a co-creative process. The development of the framework is informed by our analysis of the relationship between automation and creative autonomy. An illustrative example shows how the framework can give inspiration and guidance in the process of handing over creative responsibility.

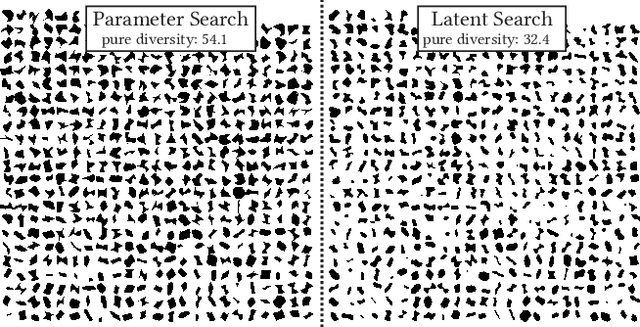

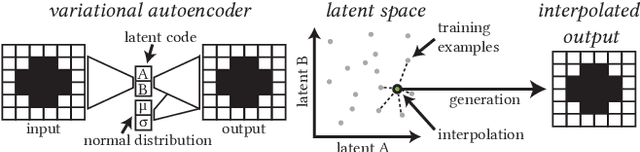

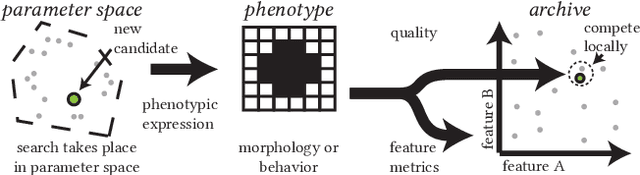

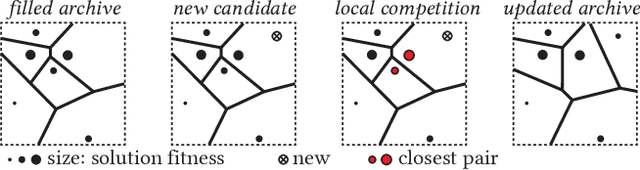

Expressivity of Parameterized and Data-driven Representations in Quality Diversity Search

May 10, 2021

Abstract:We consider multi-solution optimization and generative models for the generation of diverse artifacts and the discovery of novel solutions. In cases where the domain's factors of variation are unknown or too complex to encode manually, generative models can provide a learned latent space to approximate these factors. When used as a search space, however, the range and diversity of possible outputs are limited to the expressivity and generative capabilities of the learned model. We compare the output diversity of a quality diversity evolutionary search performed in two different search spaces: 1) a predefined parameterized space and 2) the latent space of a variational autoencoder model. We find that the search on an explicit parametric encoding creates more diverse artifact sets than searching the latent space. A learned model is better at interpolating between known data points than at extrapolating or expanding towards unseen examples. We recommend using a generative model's latent space primarily to measure similarity between artifacts rather than for search and generation. Whenever a parametric encoding is obtainable, it should be preferred over a learned representation as it produces a higher diversity of solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge