Se-Young Yoon

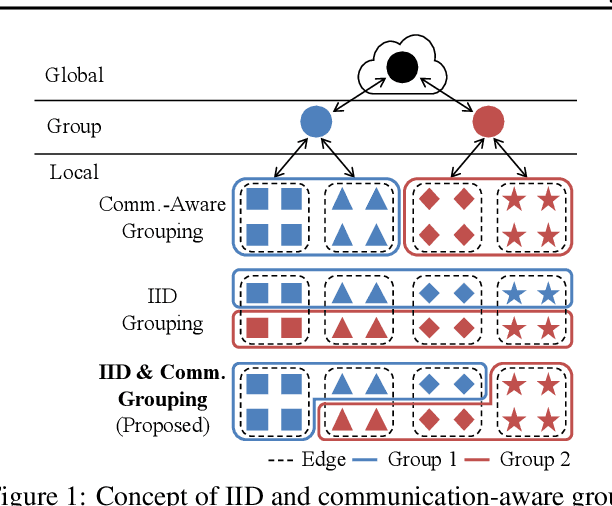

Accurate and Fast Federated Learning via IID and Communication-Aware Grouping

Dec 09, 2020

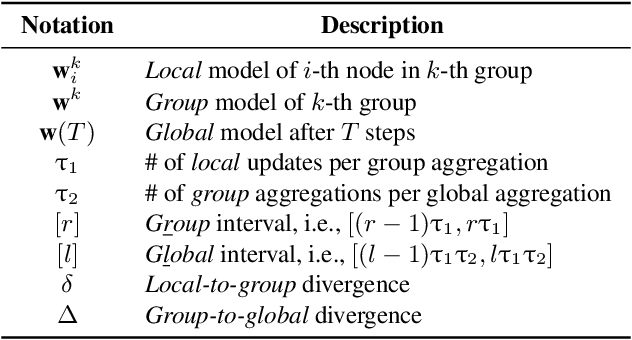

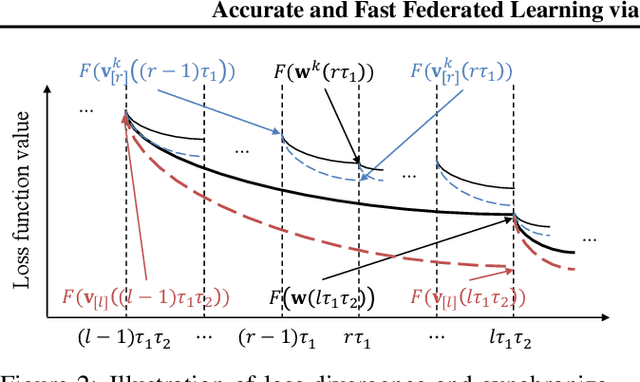

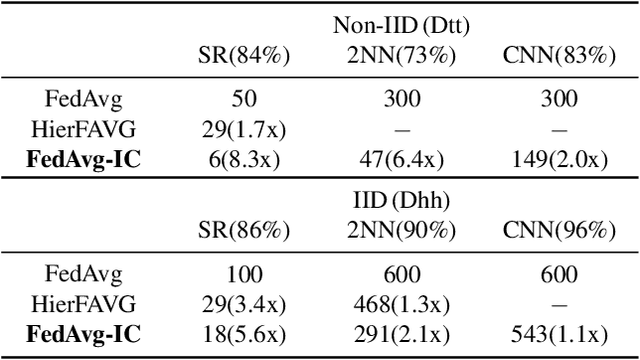

Abstract:Federated learning has emerged as a new paradigm of collaborative machine learning; however, it has also faced several challenges such as non-independent and identically distributed(IID) data and high communication cost. To this end, we propose a novel framework of IID and communication-aware group federated learning that simultaneously maximizes both accuracy and communication speed by grouping nodes based on data distributions and physical locations of the nodes. Furthermore, we provide a formal convergence analysis and an efficient optimization algorithm called FedAvg-IC. Experimental results show that, compared with the state-of-the-art algorithms, FedAvg-IC improved the test accuracy by up to 22.2% and simultaneously reduced the communication time to as small as 12%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge