Satyanarayanan R Chakravarthy

Cross-Modal Virtual Sensing for Combustion Instability Monitoring

Oct 06, 2021

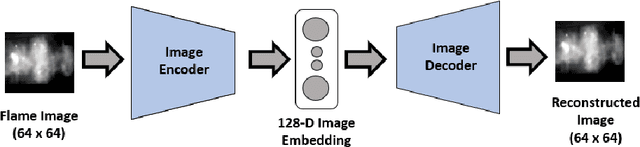

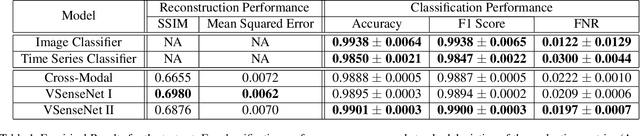

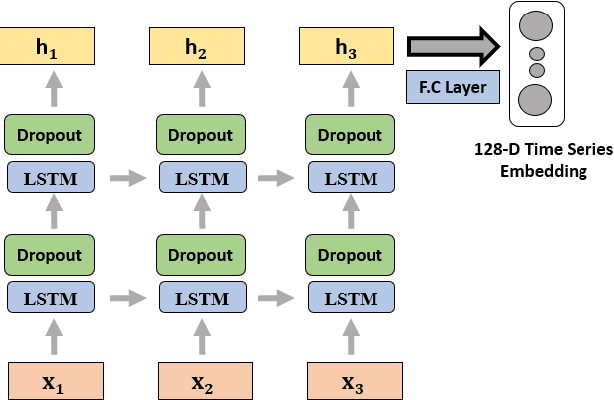

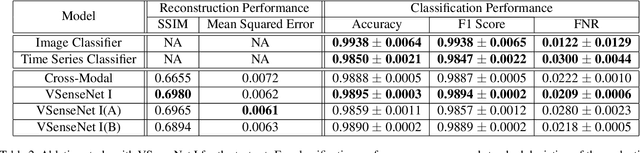

Abstract:In many cyber-physical systems, imaging can be an important but expensive or 'difficult to deploy' sensing modality. One such example is detecting combustion instability using flame images, where deep learning frameworks have demonstrated state-of-the-art performance. The proposed frameworks are also shown to be quite trustworthy such that domain experts can have sufficient confidence to use these models in real systems to prevent unwanted incidents. However, flame imaging is not a common sensing modality in engine combustors today. Therefore, the current roadblock exists on the hardware side regarding the acquisition and processing of high-volume flame images. On the other hand, the acoustic pressure time series is a more feasible modality for data collection in real combustors. To utilize acoustic time series as a sensing modality, we propose a novel cross-modal encoder-decoder architecture that can reconstruct cross-modal visual features from acoustic pressure time series in combustion systems. With the "distillation" of cross-modal features, the results demonstrate that the detection accuracy can be enhanced using the virtual visual sensing modality. By providing the benefit of cross-modal reconstruction, our framework can prove to be useful in different domains well beyond the power generation and transportation industries.

3D Convolutional Selective Autoencoder For Instability Detection in Combustion Systems

Jan 06, 2021

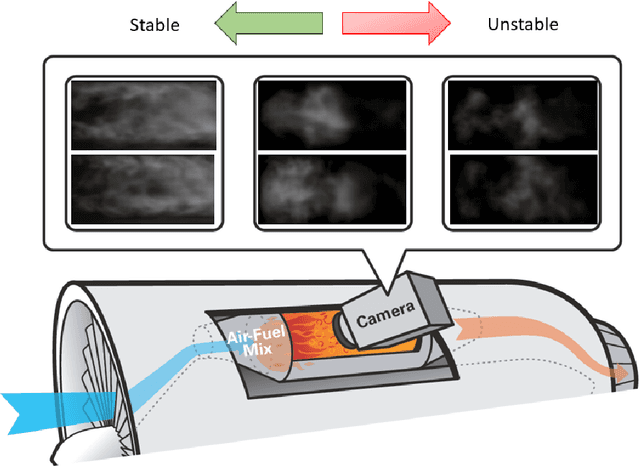

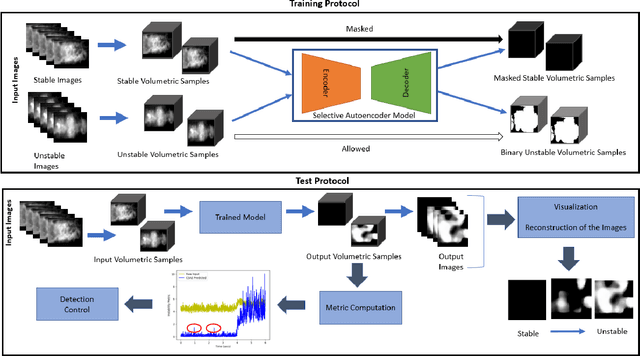

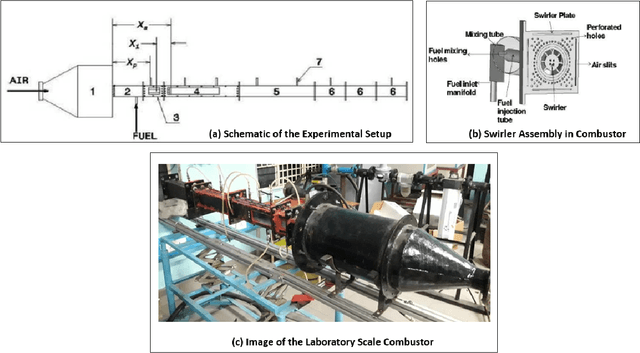

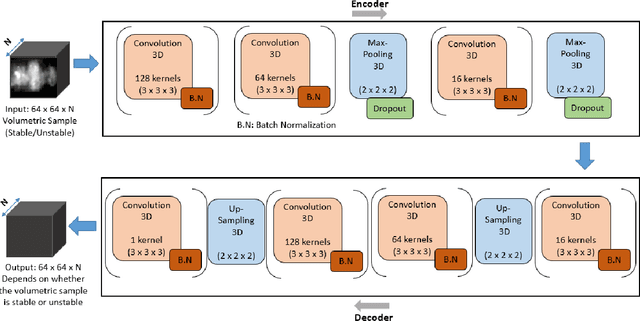

Abstract:While analytical solutions of critical (phase) transitions in physical systems are abundant for simple nonlinear systems, such analysis remains intractable for real-life dynamical systems. A key example of such a physical system is thermoacoustic instability in combustion, where prediction or early detection of an onset of instability is a hard technical challenge, which needs to be addressed to build safer and more energy-efficient gas turbine engines powering aerospace and energy industries. The instabilities arising in combustion chambers of engines are mathematically too complex to model. To address this issue in a data-driven manner instead, we propose a novel deep learning architecture called 3D convolutional selective autoencoder (3D-CSAE) to detect the evolution of self-excited oscillations using spatiotemporal data, i.e., hi-speed videos taken from a swirl-stabilized combustor (laboratory surrogate of gas turbine engine combustor). 3D-CSAE consists of filters to learn, in a hierarchical fashion, the complex visual and dynamic features related to combustion instability. We train the 3D-CSAE on frames of videos obtained from a limited set of operating conditions. We select the 3D-CSAE hyper-parameters that are effective for characterizing hierarchical and multiscale instability structure evolution by utilizing the dynamic information available in the video. The proposed model clearly shows performance improvement in detecting the precursors of instability. The machine learning-driven results are verified with physics-based off-line measures. Advanced active control mechanisms can directly leverage the proposed online detection capability of 3D-CSAE to mitigate the adverse effects of combustion instabilities on the engine operating under various stringent requirements and conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge