Satvik Tripathi

Logic Sketch Prompting (LSP): A Deterministic and Interpretable Prompting Method

Dec 24, 2025Abstract:Large language models (LLMs) excel at natural language reasoning but remain unreliable on tasks requiring strict rule adherence, determinism, and auditability. Logic Sketch Prompting (LSP) is a lightweight prompting framework that introduces typed variables, deterministic condition evaluators, and a rule based validator that produces traceable and repeatable outputs. Using two pharmacologic logic compliance tasks, we benchmark LSP against zero shot prompting, chain of thought prompting, and concise prompting across three open weight models: Gemma 2, Mistral, and Llama 3. Across both tasks and all models, LSP consistently achieves the highest accuracy (0.83 to 0.89) and F1 score (0.83 to 0.89), substantially outperforming zero shot prompting (0.24 to 0.60), concise prompts (0.16 to 0.30), and chain of thought prompting (0.56 to 0.75). McNemar tests show statistically significant gains for LSP across nearly all comparisons (p < 0.01). These results demonstrate that LSP improves determinism, interpretability, and consistency without sacrificing performance, supporting its use in clinical, regulated, and safety critical decision support systems.

EvoSTS Forecasting: Evolutionary Sparse Time-Series Forecasting

Apr 14, 2022

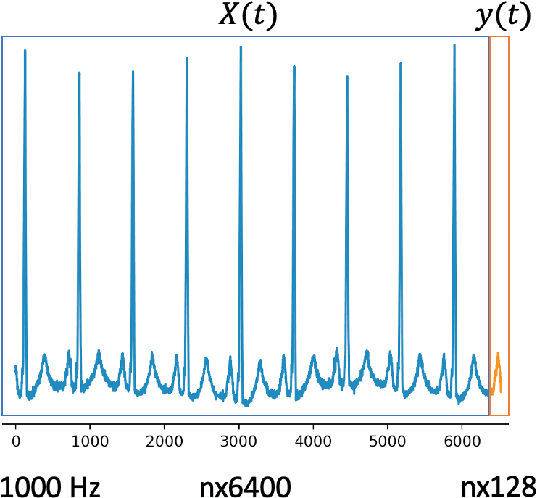

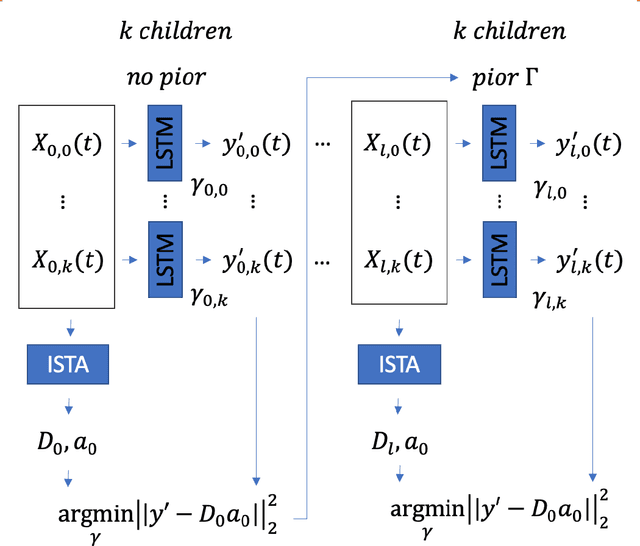

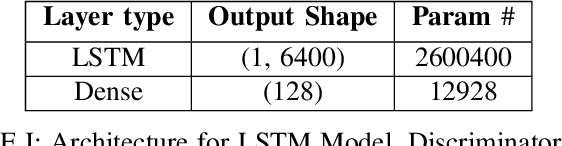

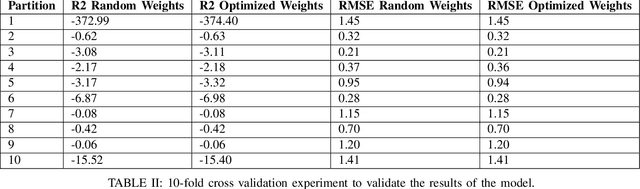

Abstract:In this work, we highlight our novel evolutionary sparse time-series forecasting algorithm also known as EvoSTS. The algorithm attempts to evolutionary prioritize weights of Long Short-Term Memory (LSTM) Network that best minimize the reconstruction loss of a predicted signal using a learned sparse coded dictionary. In each generation of our evolutionary algorithm, a set number of children with the same initial weights are spawned. Each child undergoes a training step and adjusts their weights on the same data. Due to stochastic back-propagation, the set of children has a variety of weights with different levels of performance. The weights that best minimize the reconstruction loss with a given signal dictionary are passed to the next generation. The predictions from the best-performing weights of the first and last generation are compared. We found improvements while comparing the weights of these two generations. However, due to several confounding parameters and hyperparameter limitations, some of the weights had negligible improvements. To the best of our knowledge, this is the first attempt to use sparse coding in this way to optimize time series forecasting model weights, such as those of an LSTM network.

Early Diagnostic Prediction of Covid-19 using Gradient-Boosting Machine Model

Oct 19, 2021

Abstract:With the huge spike in the COVID-19 cases across the globe and reverse transcriptase-polymerase chain reaction (RT-PCR) test remains a key component for rapid and accurate detection of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2). In recent months there has been an acute shortage of medical supplies in developing countries, especially a lack of RT-PCR testing resulting in delayed patient care and high infection rates. We present a gradient-boosting machine model that predicts the diagnostics result of SARS-CoV- 2 in an RT-PCR test by utilizing eight binary features. We used the publicly available nationwide dataset released by the Israeli Ministry of Health.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge