Sarunas Verner

Exploring the Truth and Beauty of Theory Landscapes with Machine Learning

Jan 21, 2024

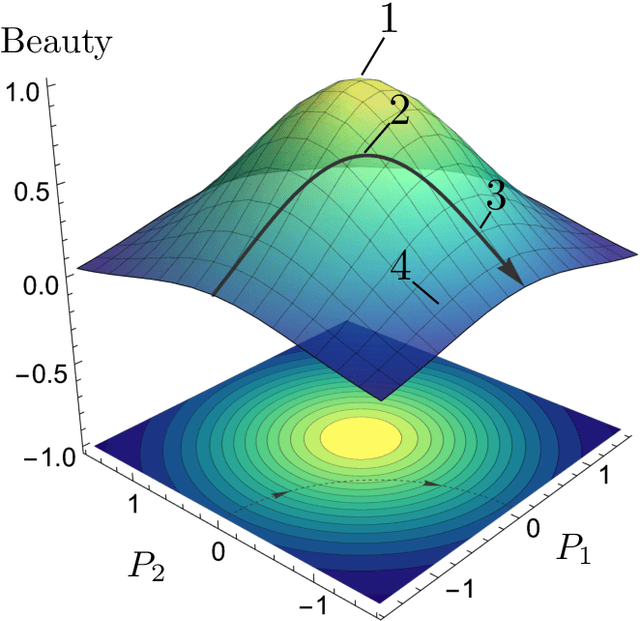

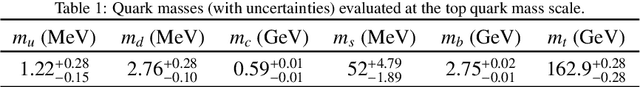

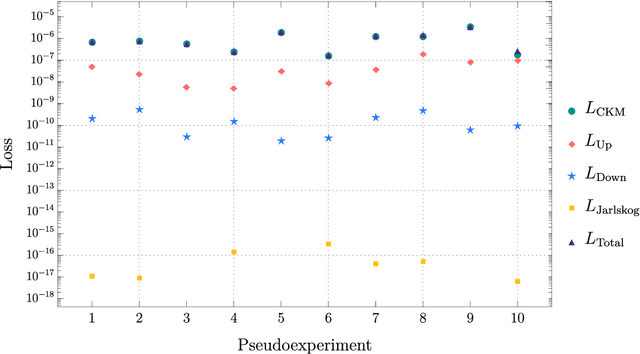

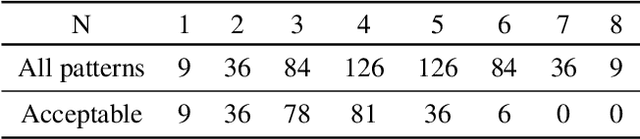

Abstract:Theoretical physicists describe nature by i) building a theory model and ii) determining the model parameters. The latter step involves the dual aspect of both fitting to the existing experimental data and satisfying abstract criteria like beauty, naturalness, etc. We use the Yukawa quark sector as a toy example to demonstrate how both of those tasks can be accomplished with machine learning techniques. We propose loss functions whose minimization results in true models that are also beautiful as measured by three different criteria - uniformity, sparsity, or symmetry.

Seeking Truth and Beauty in Flavor Physics with Machine Learning

Oct 31, 2023Abstract:The discovery process of building new theoretical physics models involves the dual aspect of both fitting to the existing experimental data and satisfying abstract theorists' criteria like beauty, naturalness, etc. We design loss functions for performing both of those tasks with machine learning techniques. We use the Yukawa quark sector as a toy example to demonstrate that the optimization of these loss functions results in true and beautiful models.

Identifying the Group-Theoretic Structure of Machine-Learned Symmetries

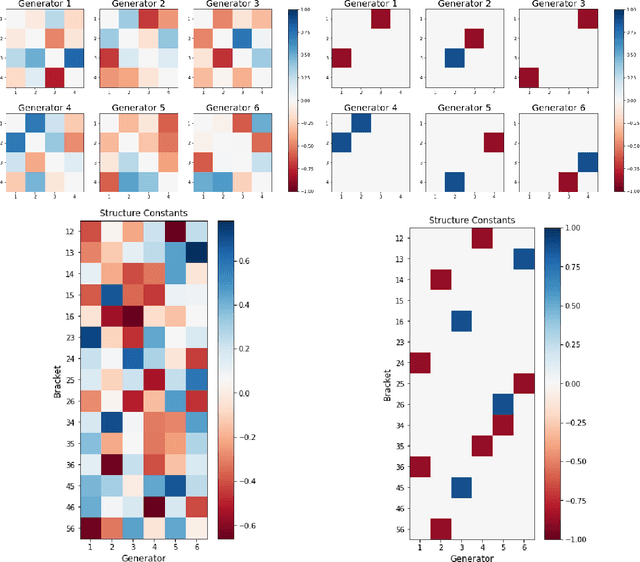

Sep 14, 2023Abstract:Deep learning was recently successfully used in deriving symmetry transformations that preserve important physics quantities. Being completely agnostic, these techniques postpone the identification of the discovered symmetries to a later stage. In this letter we propose methods for examining and identifying the group-theoretic structure of such machine-learned symmetries. We design loss functions which probe the subalgebra structure either during the deep learning stage of symmetry discovery or in a subsequent post-processing stage. We illustrate the new methods with examples from the U(n) Lie group family, obtaining the respective subalgebra decompositions. As an application to particle physics, we demonstrate the identification of the residual symmetries after the spontaneous breaking of non-Abelian gauge symmetries like SU(3) and SU(5) which are commonly used in model building.

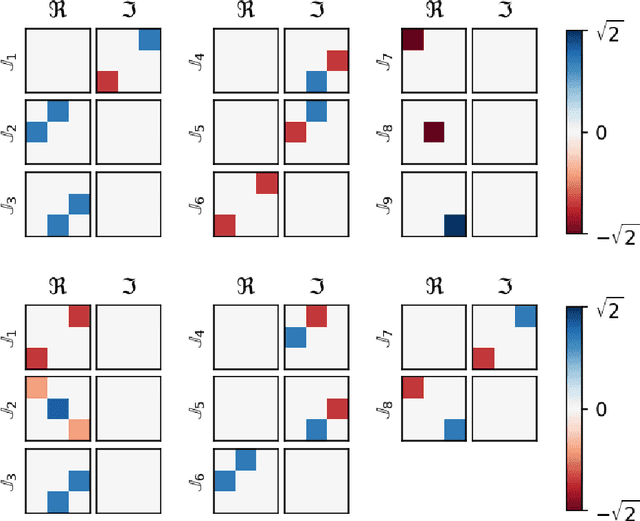

Accelerated Discovery of Machine-Learned Symmetries: Deriving the Exceptional Lie Groups G2, F4 and E6

Jul 10, 2023Abstract:Recent work has applied supervised deep learning to derive continuous symmetry transformations that preserve the data labels and to obtain the corresponding algebras of symmetry generators. This letter introduces two improved algorithms that significantly speed up the discovery of these symmetry transformations. The new methods are demonstrated by deriving the complete set of generators for the unitary groups U(n) and the exceptional Lie groups $G_2$, $F_4$, and $E_6$. A third post-processing algorithm renders the found generators in sparse form. We benchmark the performance improvement of the new algorithms relative to the standard approach. Given the significant complexity of the exceptional Lie groups, our results demonstrate that this machine-learning method for discovering symmetries is completely general and can be applied to a wide variety of labeled datasets.

Discovering Sparse Representations of Lie Groups with Machine Learning

Feb 10, 2023

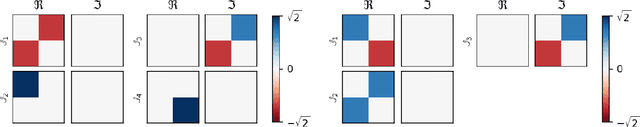

Abstract:Recent work has used deep learning to derive symmetry transformations, which preserve conserved quantities, and to obtain the corresponding algebras of generators. In this letter, we extend this technique to derive sparse representations of arbitrary Lie algebras. We show that our method reproduces the canonical (sparse) representations of the generators of the Lorentz group, as well as the $U(n)$ and $SU(n)$ families of Lie groups. This approach is completely general and can be used to find the infinitesimal generators for any Lie group.

Deep Learning Symmetries and Their Lie Groups, Algebras, and Subalgebras from First Principles

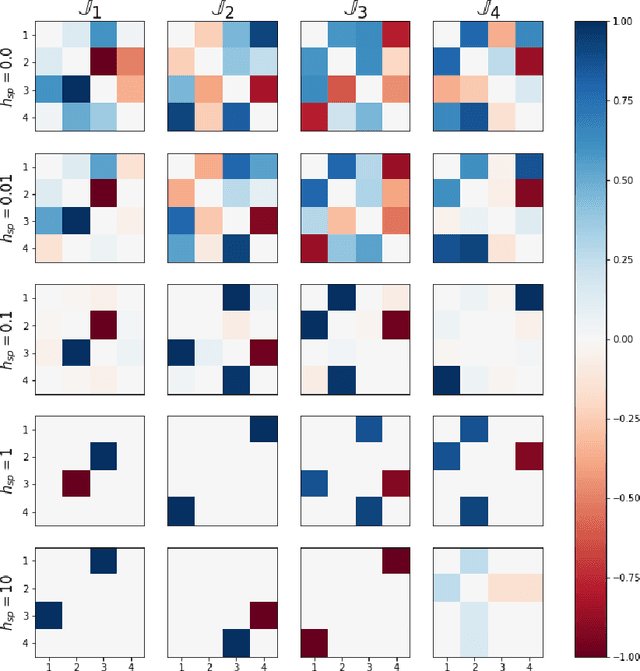

Jan 13, 2023Abstract:We design a deep-learning algorithm for the discovery and identification of the continuous group of symmetries present in a labeled dataset. We use fully connected neural networks to model the symmetry transformations and the corresponding generators. We construct loss functions that ensure that the applied transformations are symmetries and that the corresponding set of generators forms a closed (sub)algebra. Our procedure is validated with several examples illustrating different types of conserved quantities preserved by symmetry. In the process of deriving the full set of symmetries, we analyze the complete subgroup structure of the rotation groups $SO(2)$, $SO(3)$, and $SO(4)$, and of the Lorentz group $SO(1,3)$. Other examples include squeeze mapping, piecewise discontinuous labels, and $SO(10)$, demonstrating that our method is completely general, with many possible applications in physics and data science. Our study also opens the door for using a machine learning approach in the mathematical study of Lie groups and their properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge