Sara Sabrina Zemljic

Formalising and Learning a Quantum Model of Concepts

Feb 07, 2023

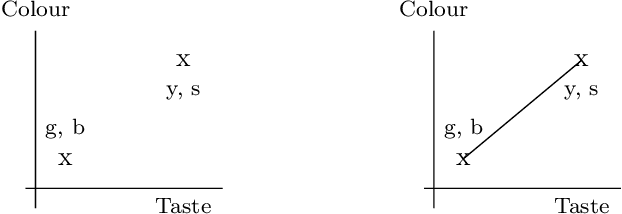

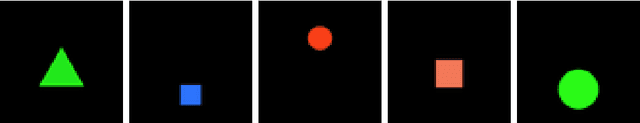

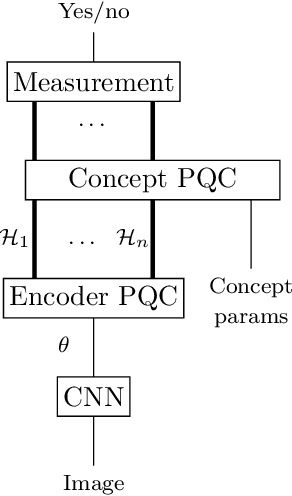

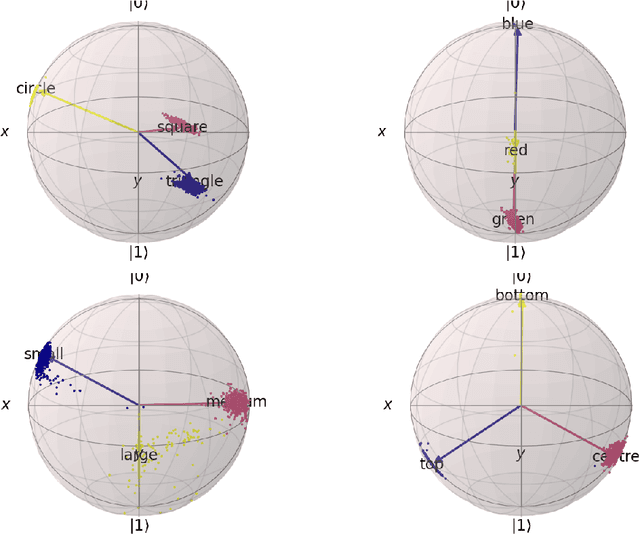

Abstract:In this report we present a new modelling framework for concepts based on quantum theory, and demonstrate how the conceptual representations can be learned automatically from data. A contribution of the work is a thorough category-theoretic formalisation of our framework. We claim that the use of category theory, and in particular the use of string diagrams to describe quantum processes, helps elucidate some of the most important features of our quantum approach to concept modelling. Our approach builds upon Gardenfors' classical framework of conceptual spaces, in which cognition is modelled geometrically through the use of convex spaces, which in turn factorise in terms of simpler spaces called domains. We show how concepts from the domains of shape, colour, size and position can be learned from images of simple shapes, where individual images are represented as quantum states and concepts as quantum effects. Concepts are learned by a hybrid classical-quantum network trained to perform concept classification, where the classical image processing is carried out by a convolutional neural network and the quantum representations are produced by a parameterised quantum circuit. We also use discarding to produce mixed effects, which can then be used to learn concepts which only apply to a subset of the domains, and show how entanglement (together with discarding) can be used to capture interesting correlations across domains. Finally, we consider the question of whether our quantum models of concepts can be considered conceptual spaces in the Gardenfors sense.

The Conceptual VAE

Mar 21, 2022

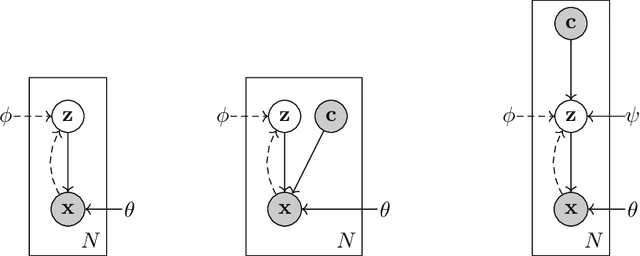

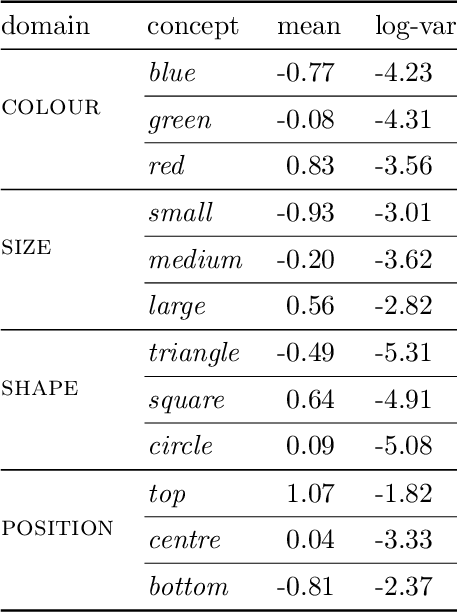

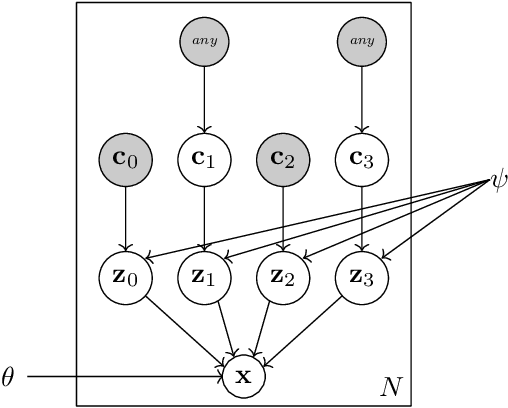

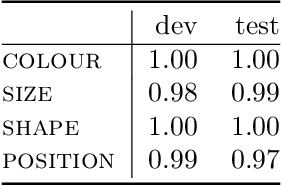

Abstract:In this report we present a new model of concepts, based on the framework of variational autoencoders, which is designed to have attractive properties such as factored conceptual domains, and at the same time be learnable from data. The model is inspired by, and closely related to, the Beta-VAE model of concepts, but is designed to be more closely connected with language, so that the names of concepts form part of the graphical model. We provide evidence that our model -- which we call the Conceptual VAE -- is able to learn interpretable conceptual representations from simple images of coloured shapes together with the corresponding concept labels. We also show how the model can be used as a concept classifier, and how it can be adapted to learn from fewer labels per instance. Finally, we formally relate our model to Gardenfors' theory of conceptual spaces, showing how the Gaussians we use to represent concepts can be formalised in terms of "fuzzy concepts" in such a space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge