Sanghun Park

A Style-aware Discriminator for Controllable Image Translation

Mar 29, 2022

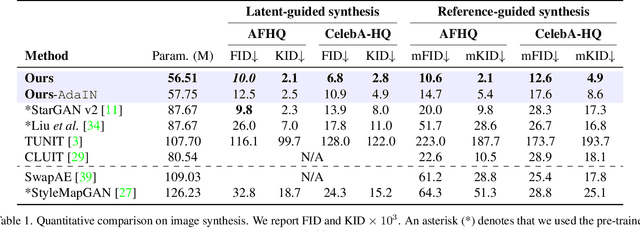

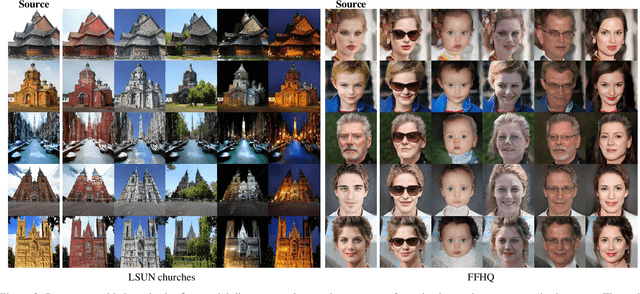

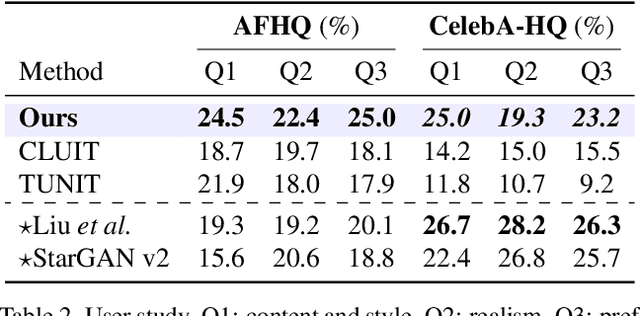

Abstract:Current image-to-image translations do not control the output domain beyond the classes used during training, nor do they interpolate between different domains well, leading to implausible results. This limitation largely arises because labels do not consider the semantic distance. To mitigate such problems, we propose a style-aware discriminator that acts as a critic as well as a style encoder to provide conditions. The style-aware discriminator learns a controllable style space using prototype-based self-supervised learning and simultaneously guides the generator. Experiments on multiple datasets verify that the proposed model outperforms current state-of-the-art image-to-image translation methods. In contrast with current methods, the proposed approach supports various applications, including style interpolation, content transplantation, and local image translation.

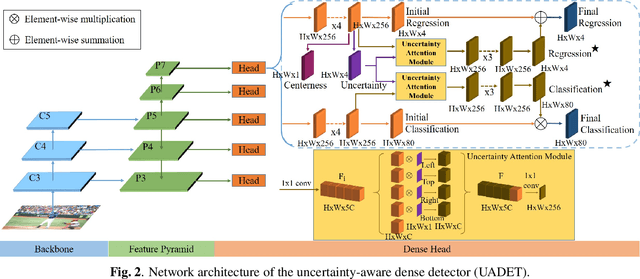

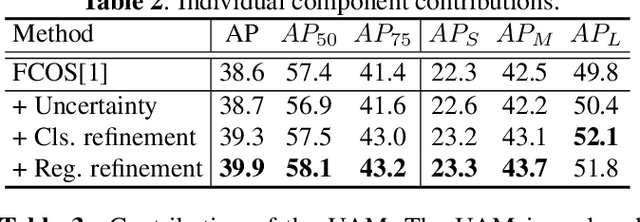

Localization Uncertainty-Based Attention for Object Detection

Aug 25, 2021

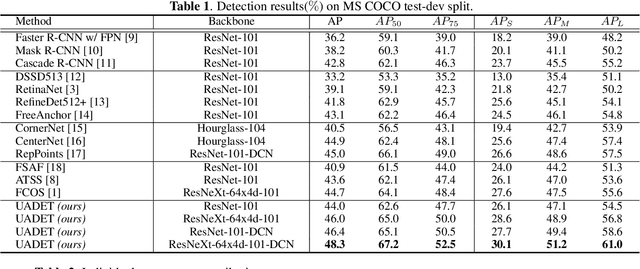

Abstract:Object detection has been applied in a wide variety of real world scenarios, so detection algorithms must provide confidence in the results to ensure that appropriate decisions can be made based on their results. Accordingly, several studies have investigated the probabilistic confidence of bounding box regression. However, such approaches have been restricted to anchor-based detectors, which use box confidence values as additional screening scores during non-maximum suppression (NMS) procedures. In this paper, we propose a more efficient uncertainty-aware dense detector (UADET) that predicts four-directional localization uncertainties via Gaussian modeling. Furthermore, a simple uncertainty attention module (UAM) that exploits box confidence maps is proposed to improve performance through feature refinement. Experiments using the MS COCO benchmark show that our UADET consistently surpasses baseline FCOS, and that our best model, ResNext-64x4d-101-DCN, obtains a single model, single-scale AP of 48.3% on COCO test-dev, thus achieving the state-of-the-art among various object detectors.

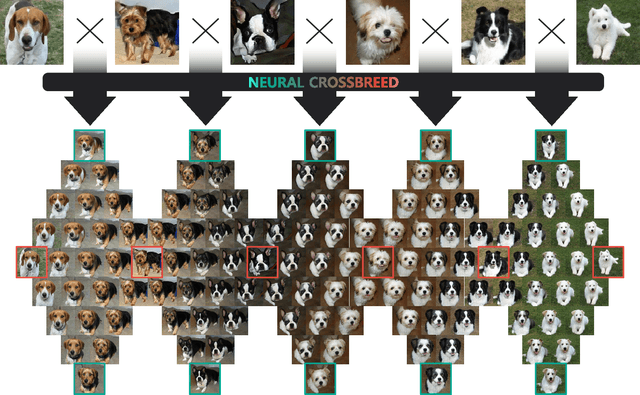

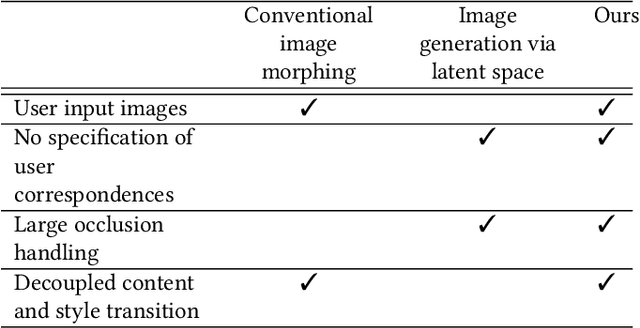

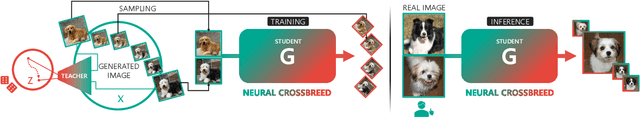

Neural Crossbreed: Neural Based Image Metamorphosis

Sep 02, 2020

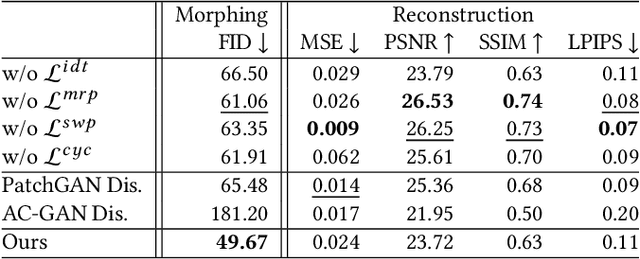

Abstract:We propose Neural Crossbreed, a feed-forward neural network that can learn a semantic change of input images in a latent space to create the morphing effect. Because the network learns a semantic change, a sequence of meaningful intermediate images can be generated without requiring the user to specify explicit correspondences. In addition, the semantic change learning makes it possible to perform the morphing between the images that contain objects with significantly different poses or camera views. Furthermore, just as in conventional morphing techniques, our morphing network can handle shape and appearance transitions separately by disentangling the content and the style transfer for rich usability. We prepare a training dataset for morphing using a pre-trained BigGAN, which generates an intermediate image by interpolating two latent vectors at an intended morphing value. This is the first attempt to address image morphing using a pre-trained generative model in order to learn semantic transformation. The experiments show that Neural Crossbreed produces high quality morphed images, overcoming various limitations associated with conventional approaches. In addition, Neural Crossbreed can be further extended for diverse applications such as multi-image morphing, appearance transfer, and video frame interpolation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge