Samuel Anthony

Has the Virtualization of the Face Changed Facial Perception? A Study of the Impact of Augmented Reality on Facial Perception

Mar 01, 2023

Abstract:Augmented reality and other photo editing filters are popular methods used to modify images, especially images of faces, posted online. Considering the important role of human facial perception in social communication, how does exposure to an increasing number of modified faces online affect human facial perception? In this paper we present the results of six surveys designed to measure familiarity with different styles of facial filters, perceived strangeness of faces edited with different facial filters, and ability to discern whether images are filtered or not. Our results indicate that faces filtered with photo editing filters that change the image color tones, modify facial structure, or add facial beautification tend to be perceived similarly to unmodified faces; however, faces filtered with augmented reality filters (\textit{i.e.,} filters that overlay digital objects) are perceived differently from unmodified faces. We also found that responses differed based on different survey question phrasings, indicating that the shift in facial perception due to the prevalence of filtered images is noisy to detect. A better understanding of shifts in facial perception caused by facial filters will help us build online spaces more responsibly and could inform the training of more accurate and equitable facial recognition models, especially those trained with human psychophysical annotations.

Modeling Score Distributions and Continuous Covariates: A Bayesian Approach

Sep 21, 2020

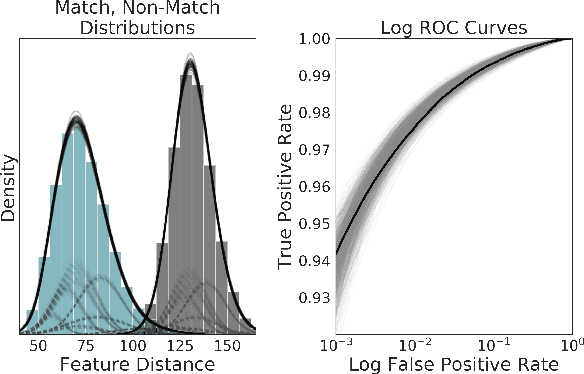

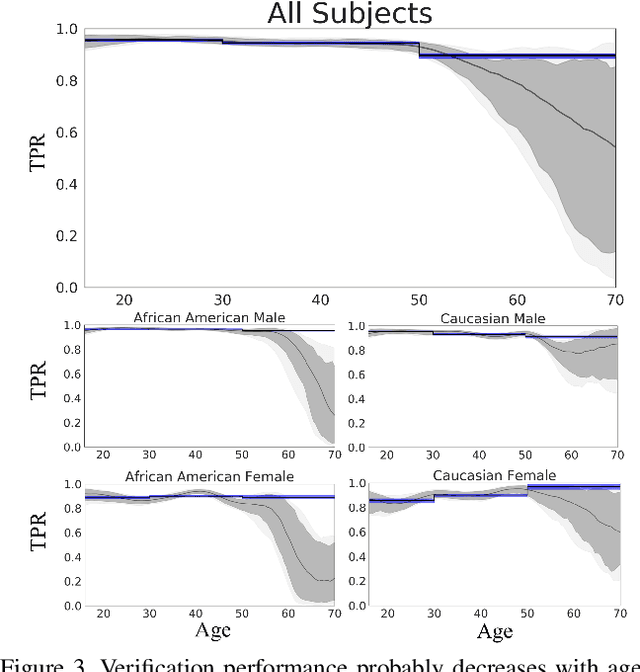

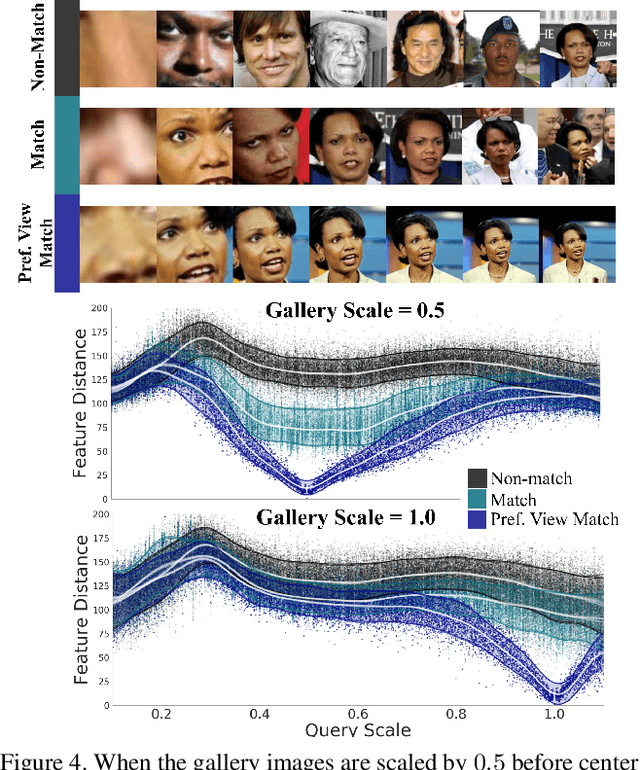

Abstract:Computer Vision practitioners must thoroughly understand their model's performance, but conditional evaluation is complex and error-prone. In biometric verification, model performance over continuous covariates---real-number attributes of images that affect performance---is particularly challenging to study. We develop a generative model of the match and non-match score distributions over continuous covariates and perform inference with modern Bayesian methods. We use mixture models to capture arbitrary distributions and local basis functions to capture non-linear, multivariate trends. Three experiments demonstrate the accuracy and effectiveness of our approach. First, we study the relationship between age and face verification performance and find previous methods may overstate performance and confidence. Second, we study preprocessing for CNNs and find a highly non-linear, multivariate surface of model performance. Our method is accurate and data efficient when evaluated against previous synthetic methods. Third, we demonstrate the novel application of our method to pedestrian tracking and calculate variable thresholds and expected performance while controlling for multiple covariates.

Predicting First Impressions with Deep Learning

May 10, 2017

Abstract:Describable visual facial attributes are now commonplace in human biometrics and affective computing, with existing algorithms even reaching a sufficient point of maturity for placement into commercial products. These algorithms model objective facets of facial appearance, such as hair and eye color, expression, and aspects of the geometry of the face. A natural extension, which has not been studied to any great extent thus far, is the ability to model subjective attributes that are assigned to a face based purely on visual judgements. For instance, with just a glance, our first impression of a face may lead us to believe that a person is smart, worthy of our trust, and perhaps even our admiration - regardless of the underlying truth behind such attributes. Psychologists believe that these judgements are based on a variety of factors such as emotional states, personality traits, and other physiognomic cues. But work in this direction leads to an interesting question: how do we create models for problems where there is no ground truth, only measurable behavior? In this paper, we introduce a new convolutional neural network-based regression framework that allows us to train predictive models of crowd behavior for social attribute assignment. Over images from the AFLW face database, these models demonstrate strong correlations with human crowd ratings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge