Sami Sebastian Brandt

Query-Guided Spatial-Temporal-Frequency Interaction for Music Audio-Visual Question Answering

Jan 27, 2026Abstract:Audio--Visual Question Answering (AVQA) is a challenging multimodal task that requires jointly reasoning over audio, visual, and textual information in a given video to answer natural language questions. Inspired by recent advances in Video QA, many existing AVQA approaches primarily focus on visual information processing, leveraging pre-trained models to extract object-level and motion-level representations. However, in those methods, the audio input is primarily treated as complementary to video analysis, and the textual question information contributes minimally to audio--visual understanding, as it is typically integrated only in the final stages of reasoning. To address these limitations, we propose a novel Query-guided Spatial--Temporal--Frequency (QSTar) interaction method, which effectively incorporates question-guided clues and exploits the distinctive frequency-domain characteristics of audio signals, alongside spatial and temporal perception, to enhance audio--visual understanding. Furthermore, we introduce a Query Context Reasoning (QCR) block inspired by prompting, which guides the model to focus more precisely on semantically relevant audio and visual features. Extensive experiments conducted on several AVQA benchmarks demonstrate the effectiveness of our proposed method, achieving significant performance improvements over existing Audio QA, Visual QA, Video QA, and AVQA approaches. The code and pretrained models will be released after publication.

Tensor-based Subspace Factorization for StyleGAN

Nov 08, 2021

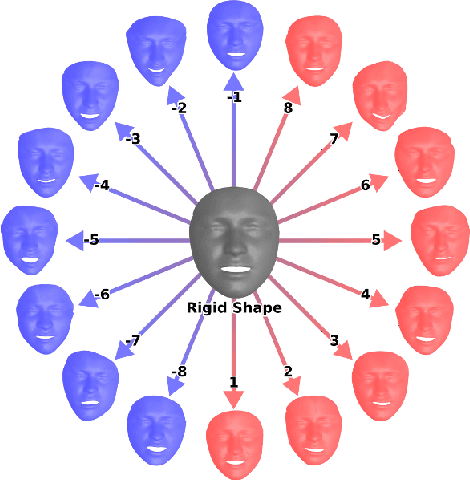

Abstract:In this paper, we propose $\tau$GAN a tensor-based method for modeling the latent space of generative models. The objective is to identify semantic directions in latent space. To this end, we propose to fit a multilinear tensor model on a structured facial expression database, which is initially embedded into latent space. We validate our approach on StyleGAN trained on FFHQ using BU-3DFE as a structured facial expression database. We show how the parameters of the multilinear tensor model can be approximated by Alternating Least Squares. Further, we introduce a tacked style-separated tensor model, defined as an ensemble of style-specific models to integrate our approach with the extended latent space of StyleGAN. We show that taking the individual styles of the extended latent space into account leads to higher model flexibility and lower reconstruction error. Finally, we do several experiments comparing our approach to former work on both GANs and multilinear models. Concretely, we analyze the expression subspace and find that the expression trajectories meet at an apathetic face that is consistent with earlier work. We also show that by changing the pose of a person, the generated image from our approach is closer to the ground truth than results from two competing approaches.

Uncalibrated Non-Rigid Factorisation by Independent Subspace Analysis

Nov 22, 2018

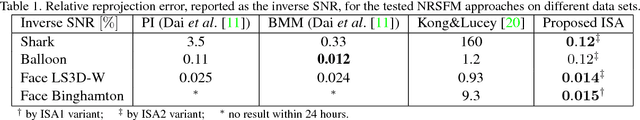

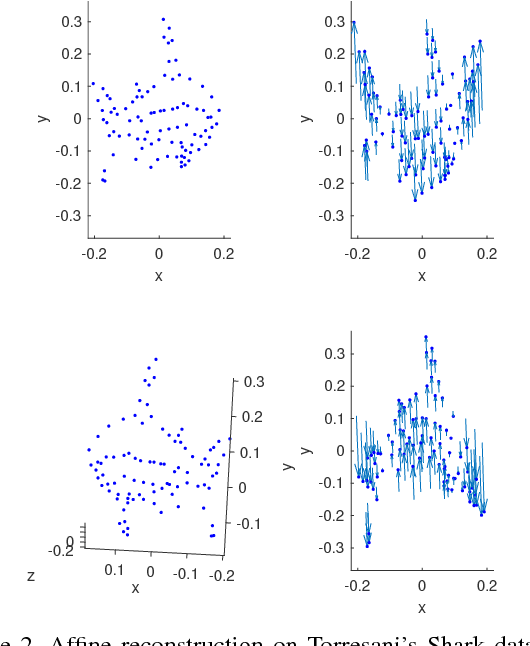

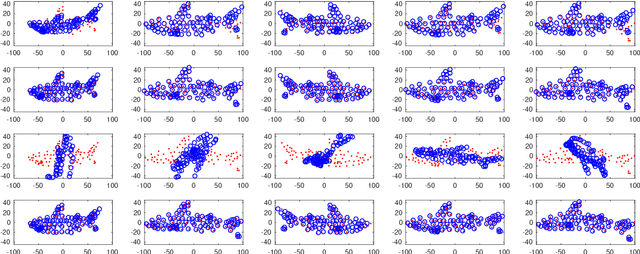

Abstract:We propose a general, prior-free approach for the uncalibrated non-rigid structure-from-motion problem for modelling and analysis of non-rigid objects such as human faces. The word general refers to an approach that recovers the non-rigid affine structure and motion from 2D point correspondences by assuming that (1) the non-rigid shapes are generated by a linear combination of rigid 3D basis shapes, (2) that the non-rigid shapes are affine in nature, i.e., they can be modelled as deviations from the mean, rigid shape, (3) and that the basis shapes are statistically independent. In contrast to the majority of existing works, no prior information is assumed for the structure and motion apart from the assumption the that underlying basis shapes are statistically independent. The independent 3D shape bases are recovered by independent subspace analysis (ISA). Likewise, in contrast to the most previous approaches, no calibration information is assumed for affine cameras; the reconstruction is solved up to a global affine ambiguity that makes our approach simple but efficient. In the experiments, we evaluated the method with several standard data sets including a real face expression data set of 7200 faces with 2D point correspondences and unknown 3D structure and motion for which we obtained promising results.

Integral Geometric Dual Distributions of Multilinear Models

Nov 22, 2018

Abstract:We propose an integral geometric approach for computing dual distributions for the parameter distributions of multilinear models. The dual distributions can be computed from, for example, the parameter distributions of conics, multiple view tensors, homographies, or as simple entities as points, lines, and planes. The dual distributions have analytical forms that follow from the asymptotic normality property of the maximum likelihood estimator and an application of integral transforms, fundamentally the generalised Radon transforms, on the probability density of the parameters. The approach allows us, for instance, to look at the uncertainty distributions in feature distributions, which are essentially tied to the distribution of training data, and helps us to derive conditional distributions for interesting variables and characterise confidence intervals of the estimates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge