Sambhav R. Jain

Trained Uniform Quantization for Accurate and Efficient Neural Network Inference on Fixed-Point Hardware

Mar 19, 2019

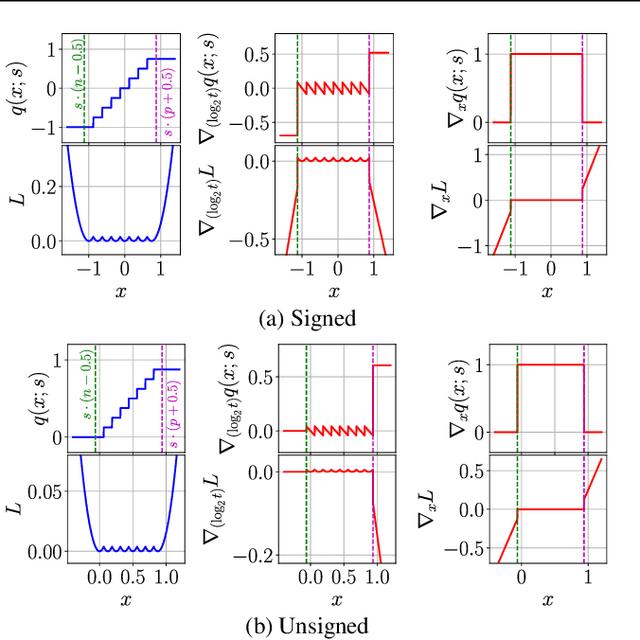

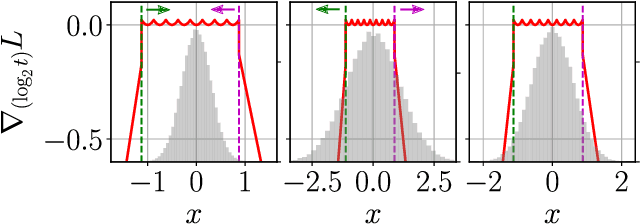

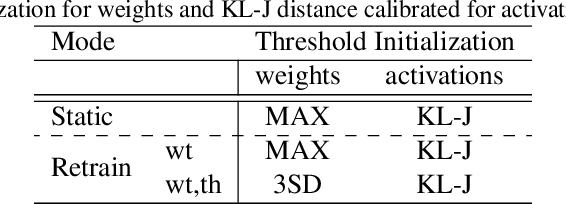

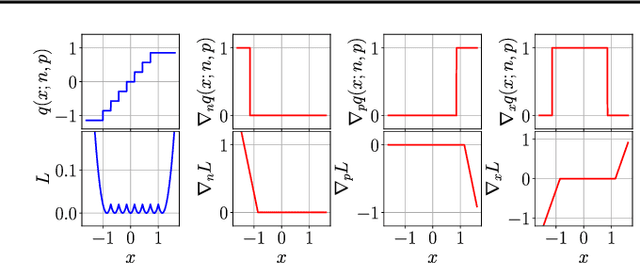

Abstract:We propose a method of training quantization clipping thresholds for uniform symmetric quantizers using standard backpropagation and gradient descent. Our quantizers are constrained to use power-of-2 scale-factors and per-tensor scaling for weights and activations. These constraints make our methods better suited for hardware implementations. Training with these difficult constraints is enabled by a combination of three techniques: using accurate threshold gradients to achieve range-precision trade-off, training thresholds in log-domain, and training with an adaptive gradient optimizer. We refer to this collection of techniques as Adaptive-Gradient Log-domain Threshold Training (ALT). We present analytical support for the general robustness of our methods and empirically validate them on various CNNs for ImageNet classification. We are able to achieve floating-point or near-floating-point accuracy on traditionally difficult networks such as MobileNets in less than 5 epochs of quantized (8-bit) retraining. Finally, we present Graffitist, a framework that enables immediate quantization of TensorFlow graphs using our methods. Code available at https://github.com/Xilinx/graffitist .

Training a Fully Convolutional Neural Network to Route Integrated Circuits

Sep 11, 2017

Abstract:We present a deep, fully convolutional neural network that learns to route a circuit layout net with appropriate choice of metal tracks and wire class combinations. Inputs to the network are the encoded layouts containing spatial location of pins to be routed. After 15 fully convolutional stages followed by a score comparator, the network outputs 8 layout layers (corresponding to 4 route layers, 3 via layers and an identity-mapped pin layer) which are then decoded to obtain the routed layouts. We formulate this as a binary segmentation problem on a per-pixel per-layer basis, where the network is trained to correctly classify pixels in each layout layer to be 'on' or 'off'. To demonstrate learnability of layout design rules, we train the network on a dataset of 50,000 train and 10,000 validation samples that we generate based on certain pre-defined layout constraints. Precision, recall and $F_1$ score metrics are used to track the training progress. Our network achieves $F_1\approx97\%$ on the train set and $F_1\approx92\%$ on the validation set. We use PyTorch for implementing our model. Code is made publicly available at https://github.com/sjain-stanford/deep-route .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge