Salvador Canas-Moreno

LIPSFUS: A neuromorphic dataset for audio-visual sensory fusion of lip reading

Mar 28, 2023

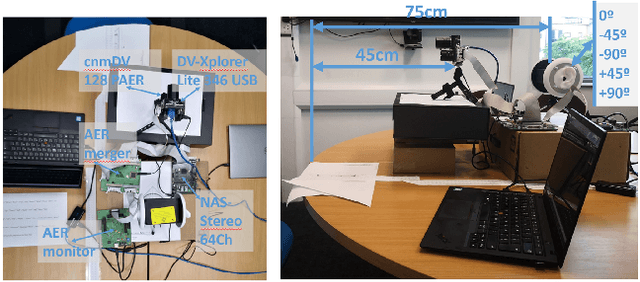

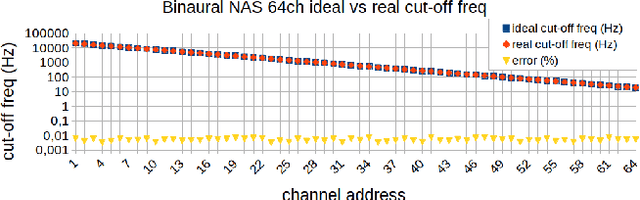

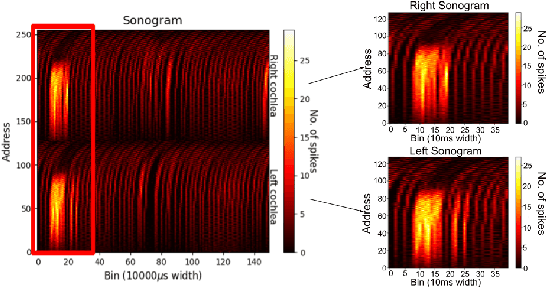

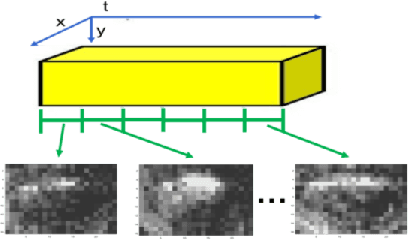

Abstract:This paper presents a sensory fusion neuromorphic dataset collected with precise temporal synchronization using a set of Address-Event-Representation sensors and tools. The target application is the lip reading of several keywords for different machine learning applications, such as digits, robotic commands, and auxiliary rich phonetic short words. The dataset is enlarged with a spiking version of an audio-visual lip reading dataset collected with frame-based cameras. LIPSFUS is publicly available and it has been validated with a deep learning architecture for audio and visual classification. It is intended for sensory fusion architectures based on both artificial and spiking neural network algorithms.

An MPSoC-based on-line Edge Infrastructure for Embedded Neuromorphic Robotic Controllers

Mar 31, 2022

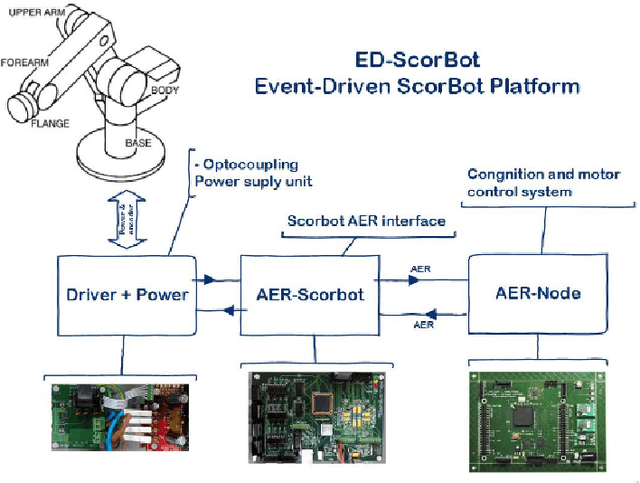

Abstract:In this work, an all-in-one neuromorphic controller system with reduced latency and power consumption for a robotic arm is presented. Biological muscle movement consists of stretching and shrinking fibres via spike-commanded signals that come from motor neurons, which in turn are connected to a central pattern generator neural structure. In addition, biological systems are able to respond to diverse stimuli rather fast and efficiently, and this is based on the way information is coded within neural processes. As opposed to human-created encoding systems, neural ones use neurons and spikes to process the information and make weighted decisions based on a continuous learning process. The Event-Driven Scorbot platform (ED-Scorbot) consists of a 6 Degrees of Freedom (DoF) robotic arm whose controller implements a Spiking Proportional-Integrative- Derivative algorithm, mimicking in this way the previously commented biological systems. In this paper, we present an infrastructure upgrade to the ED-Scorbot platform, replacing the controller hardware, which was comprised of two Spartan Field Programmable Gate Arrays (FPGAs) and a barebone computer, with an edge device, the Xilinx Zynq-7000 SoC (System on Chip) which reduces the response time, power consumption and overall complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge