Salar Cheema

ACCESS: Prompt Engineering for Automated Web Accessibility Violation Corrections

Jan 28, 2024

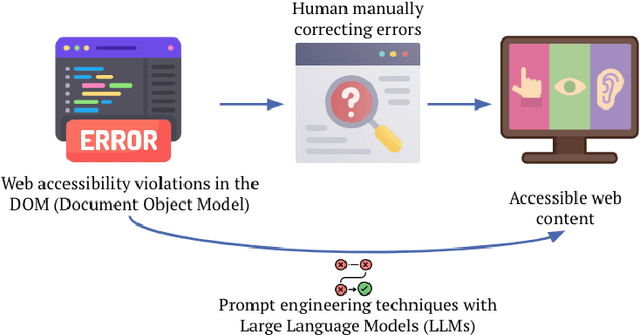

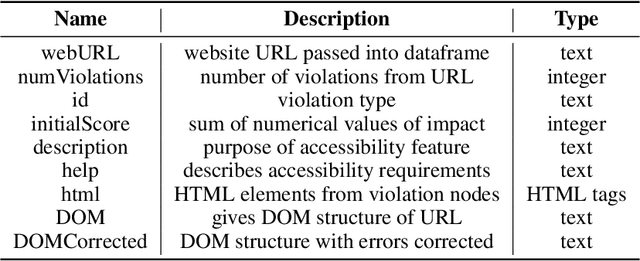

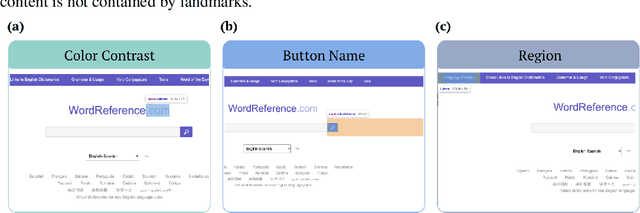

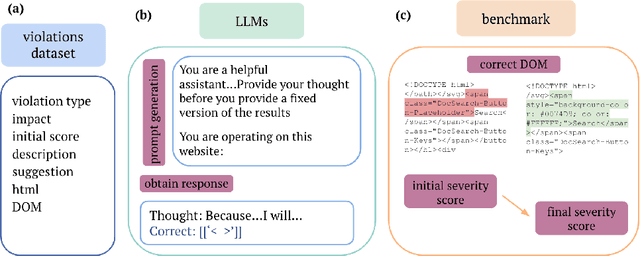

Abstract:With the increasing need for inclusive and user-friendly technology, web accessibility is crucial to ensuring equal access to online content for individuals with disabilities, including visual, auditory, cognitive, or motor impairments. Despite the existence of accessibility guidelines and standards such as Web Content Accessibility Guidelines (WCAG) and the Web Accessibility Initiative (W3C), over 90\% of websites still fail to meet the necessary accessibility requirements. For web users with disabilities, there exists a need for a tool to automatically fix web page accessibility errors. While research has demonstrated methods to find and target accessibility errors, no research has focused on effectively correcting such violations. This paper presents a novel approach to correcting accessibility violations on the web by modifying the document object model (DOM) in real time with foundation models. Leveraging accessibility error information, large language models (LLMs), and prompt engineering techniques, we achieved greater than a 51\% reduction in accessibility violation errors after corrections on our novel benchmark: ACCESS. Our work demonstrates a valuable approach toward the direction of inclusive web content, and provides directions for future research to explore advanced methods to automate web accessibility.

Two Timin': Repairing Smart Contracts With A Two-Layered Approach

Sep 14, 2023Abstract:Due to the modern relevance of blockchain technology, smart contracts present both substantial risks and benefits. Vulnerabilities within them can trigger a cascade of consequences, resulting in significant losses. Many current papers primarily focus on classifying smart contracts for malicious intent, often relying on limited contract characteristics, such as bytecode or opcode. This paper proposes a novel, two-layered framework: 1) classifying and 2) directly repairing malicious contracts. Slither's vulnerability report is combined with source code and passed through a pre-trained RandomForestClassifier (RFC) and Large Language Models (LLMs), classifying and repairing each suggested vulnerability. Experiments demonstrate the effectiveness of fine-tuned and prompt-engineered LLMs. The smart contract repair models, built from pre-trained GPT-3.5-Turbo and fine-tuned Llama-2-7B models, reduced the overall vulnerability count by 97.5% and 96.7% respectively. A manual inspection of repaired contracts shows that all retain functionality, indicating that the proposed method is appropriate for automatic batch classification and repair of vulnerabilities in smart contracts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge