Saehanseul Yi

Demand Layering for Real-Time DNN Inference with Minimized Memory Usage

Oct 08, 2022

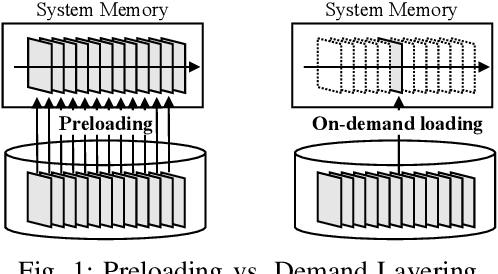

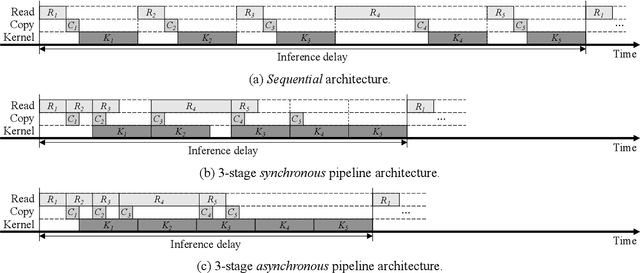

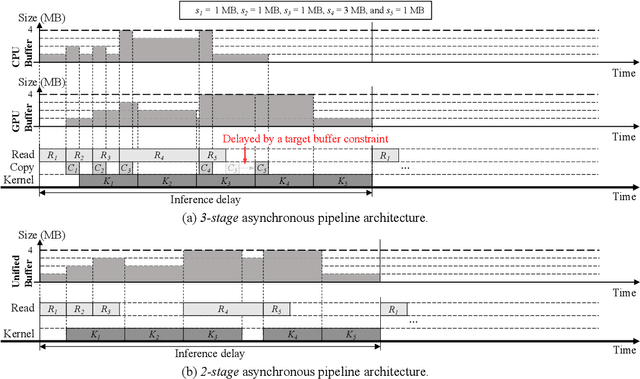

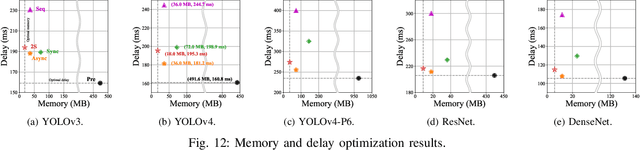

Abstract:When executing a deep neural network (DNN), its model parameters are loaded into GPU memory before execution, incurring a significant GPU memory burden. There are studies that reduce GPU memory usage by exploiting CPU memory as a swap device. However, this approach is not applicable in most embedded systems with integrated GPUs where CPU and GPU share a common memory. In this regard, we present Demand Layering, which employs a fast solid-state drive (SSD) as a co-running partner of a GPU and exploits the layer-by-layer execution of DNNs. In our approach, a DNN is loaded and executed in a layer-by-layer manner, minimizing the memory usage to the order of a single layer. Also, we developed a pipeline architecture that hides most additional delays caused by the interleaved parameter loadings alongside layer executions. Our implementation shows a 96.5% memory reduction with just 14.8% delay overhead on average for representative DNNs. Furthermore, by exploiting the memory-delay tradeoff, near-zero delay overhead (under 1 ms) can be achieved with a slightly increased memory usage (still an 88.4% reduction), showing the great potential of Demand Layering.

Energy-Efficient Adaptive System Reconfiguration for Dynamic Deadlines in Autonomous Driving

Jun 03, 2021

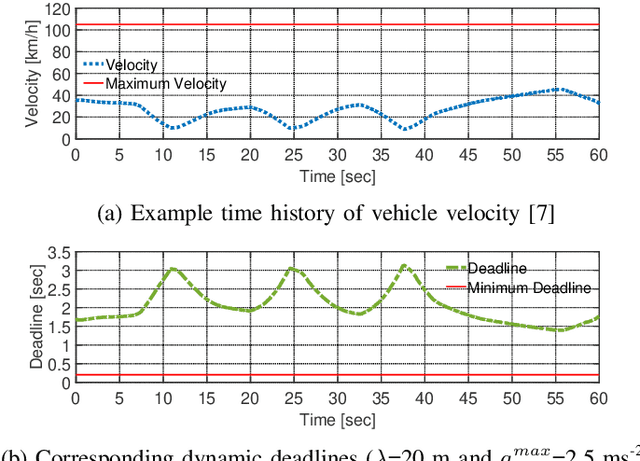

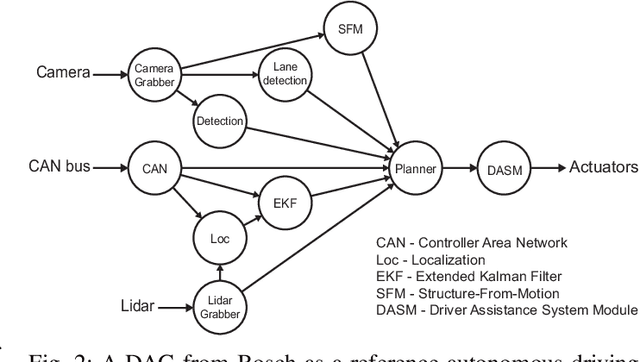

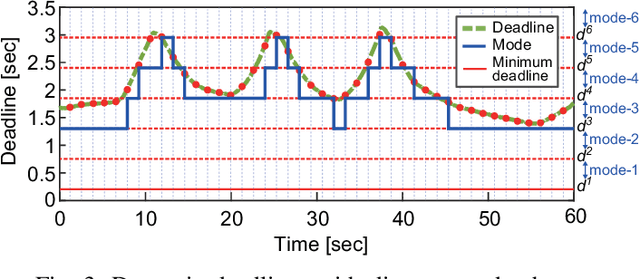

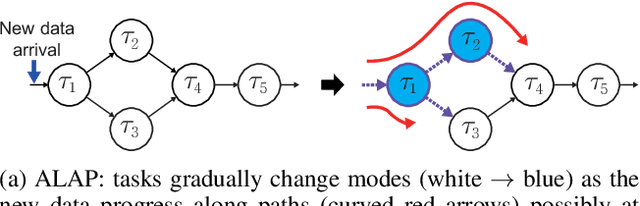

Abstract:The increasing computing demands of autonomous driving applications make energy optimizations critical for reducing battery capacity and vehicle weight. Current energy optimization methods typically target traditional real-time systems with static deadlines, resulting in conservative energy savings that are unable to exploit additional energy optimizations due to dynamic deadlines arising from the vehicle's change in velocity and driving context. We present an adaptive system optimization and reconfiguration approach that dynamically adapts the scheduling parameters and processor speeds to satisfy dynamic deadlines while consuming as little energy as possible. Our experimental results with an autonomous driving task set from Bosch and real-world driving data show energy reductions up to 46.4% on average in typical dynamic driving scenarios compared with traditional static energy optimization methods, demonstrating great potential for dynamic energy optimization gains by exploiting dynamic deadlines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge