Saadat M. Alhashmi

On the Combined Use of Extrinsic Semantic Resources for Medical Information Search

May 17, 2020

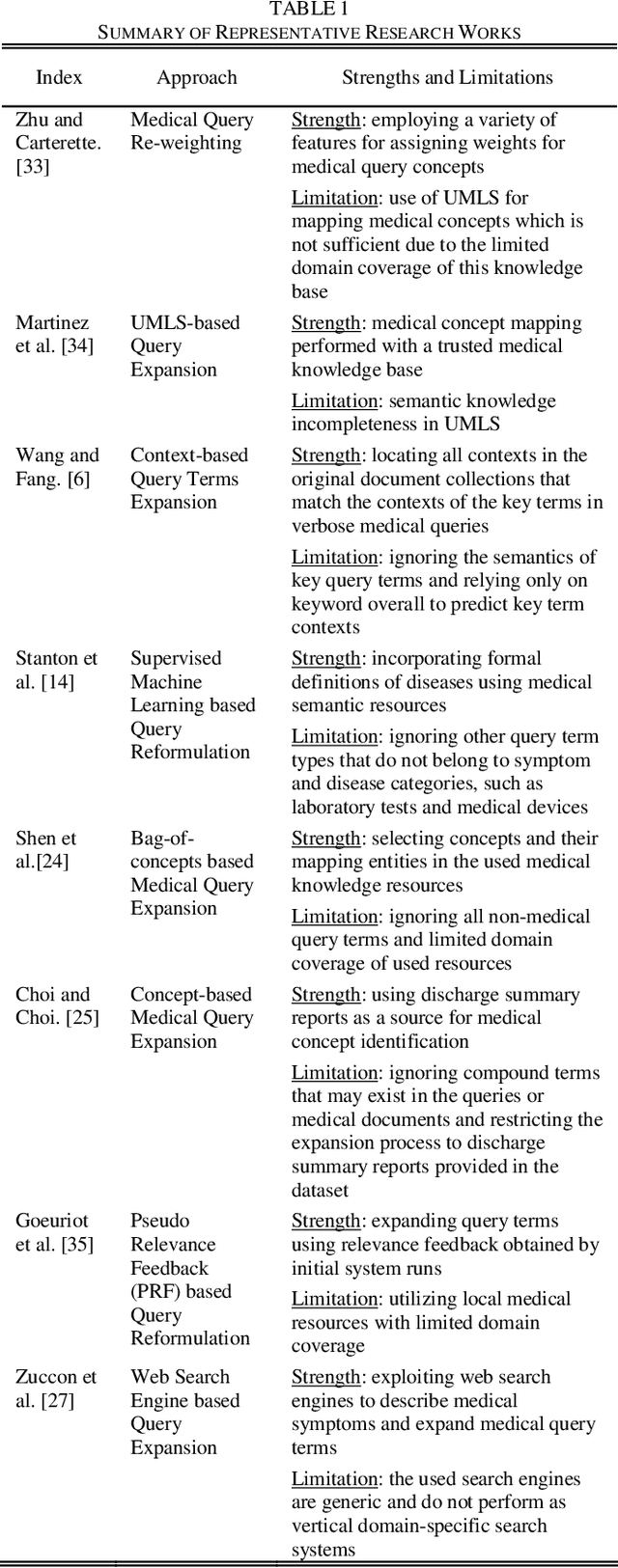

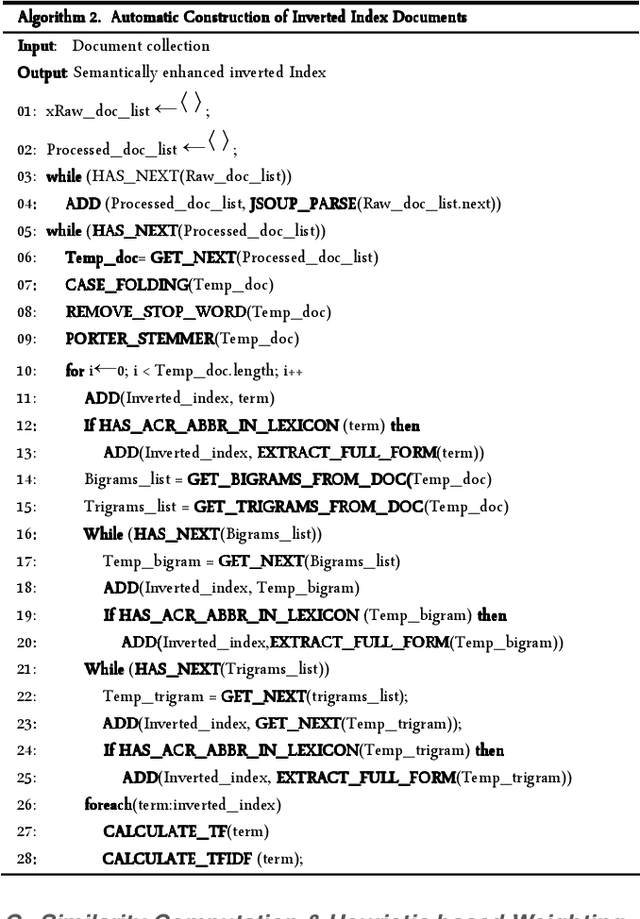

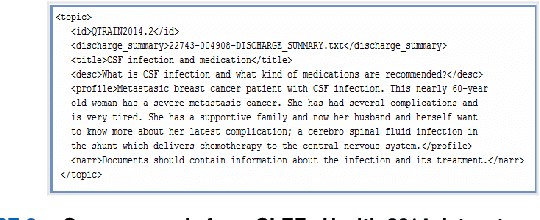

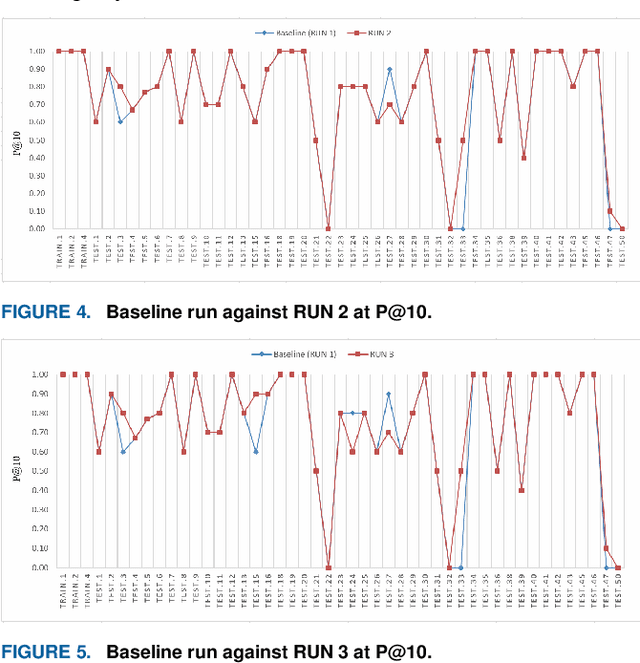

Abstract:Semantic concepts and relations encoded in domain-specific ontologies and other medical semantic resources play a crucial role in deciphering terms in medical queries and documents. The exploitation of these resources for tackling the semantic gap issue has been widely studied in the literature. However, there are challenges that hinder their widespread use in real-world applications. Among these challenges is the insufficient knowledge individually encoded in existing medical ontologies, which is magnified when users express their information needs using long-winded natural language queries. In this context, many of the users query terms are either unrecognized by the used ontologies, or cause retrieving false positives that degrade the quality of current medical information search approaches. In this article, we explore the combination of multiple extrinsic semantic resources in the development of a full-fledged medical information search framework to: i) highlight and expand head medical concepts in verbose medical queries (i.e. concepts among query terms that significantly contribute to the informativeness and intent of a given query), ii) build semantically enhanced inverted index documents, iii) contribute to a heuristical weighting technique in the query document matching process. To demonstrate the effectiveness of the proposed approach, we conducted several experiments over the CLEF eHealth 2014 dataset. Findings indicate that the proposed method combining several extrinsic semantic resources proved to be more effective than related approaches in terms of precision measure.

Review-Level Sentiment Classification with Sentence-Level Polarity Correction

Nov 07, 2015

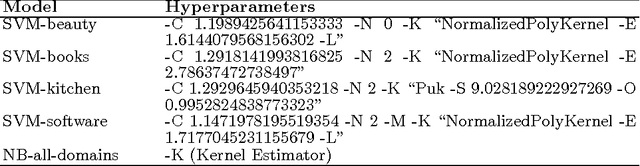

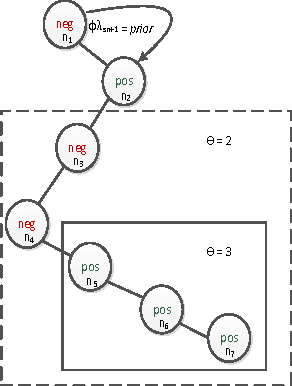

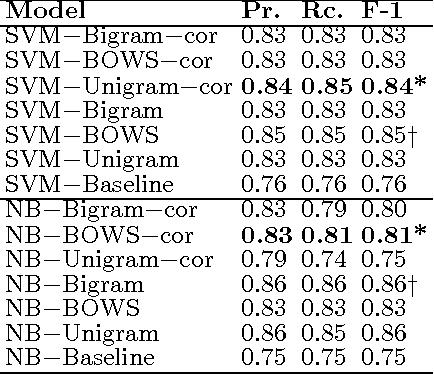

Abstract:We propose an effective technique to solving review-level sentiment classification problem by using sentence-level polarity correction. Our polarity correction technique takes into account the consistency of the polarities (positive and negative) of sentences within each product review before performing the actual machine learning task. While sentences with inconsistent polarities are removed, sentences with consistent polarities are used to learn state-of-the-art classifiers. The technique achieved better results on different types of products reviews and outperforms baseline models without the correction technique. Experimental results show an average of 82% F-measure on four different product review domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge