Saúl Alexis Heredia Pérez

Object State Estimation Through Robotic Active Interaction for Biological Autonomous Drilling

Mar 06, 2025Abstract:Estimating the state of biological specimens is challenging due to limited observation through microscopic vision. For instance, during mouse skull drilling, the appearance alters little when thinning bone tissue because of its semi-transparent property and the high-magnification microscopic vision. To obtain the object's state, we introduce an object state estimation method for biological specimens through active interaction based on the deflection. The method is integrated to enhance the autonomous drilling system developed in our previous work. The method and integrated system were evaluated through 12 autonomous eggshell drilling experiment trials. The results show that the system achieved a 91.7% successful ratio and 75% detachable ratio, showcasing its potential applicability in more complex surgical procedures such as mouse skull craniotomy. This research paves the way for further development of autonomous robotic systems capable of estimating the object's state through active interaction.

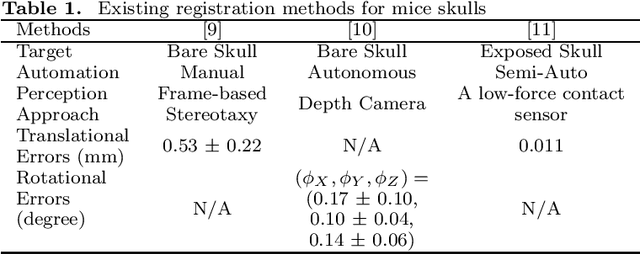

A Cranial-Feature-Based Registration Scheme for Robotic Micromanipulation Using a Microscopic Stereo Camera System

Oct 24, 2024

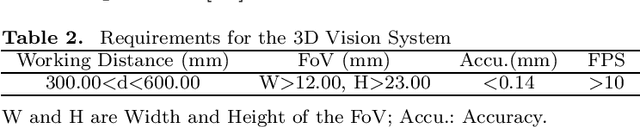

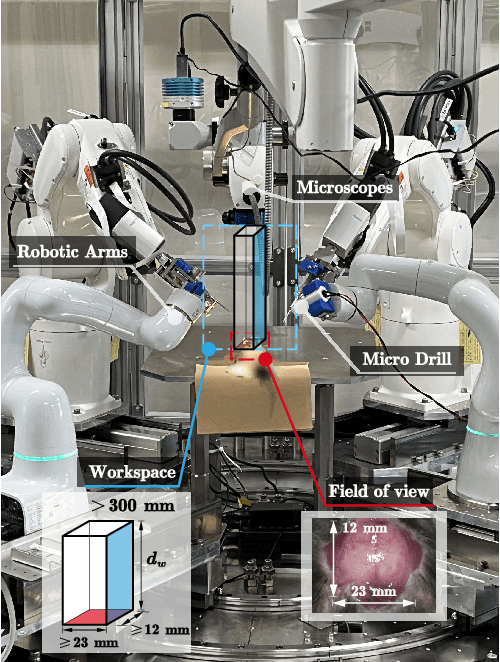

Abstract:Biological specimens exhibit significant variations in size and shape, challenging autonomous robotic manipulation. We focus on the mouse skull window creation task to illustrate these challenges. The study introduces a microscopic stereo camera system (MSCS) enhanced by the linear model for depth perception. Alongside this, a precise registration scheme is developed for the partially exposed mouse cranial surface, employing a CNN-based constrained and colorized registration strategy. These methods are integrated with the MSCS for robotic micromanipulation tasks. The MSCS demonstrated a high precision of 0.10 mm $\pm$ 0.02 mm measured in a step height experiment and real-time performance of 30 FPS in 3D reconstruction. The registration scheme proved its precision, with a translational error of 1.13 mm $\pm$ 0.31 mm and a rotational error of 3.38$^{\circ}$ $\pm$ 0.89$^{\circ}$ tested on 105 continuous frames with an average speed of 1.60 FPS. This study presents the application of a MSCS and a novel registration scheme in enhancing the precision and accuracy of robotic micromanipulation in scientific and surgical settings. The innovations presented here offer automation methodology in handling the challenges of microscopic manipulation, paving the way for more accurate, efficient, and less invasive procedures in various fields of microsurgery and scientific research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge