S. Maryam Hosseini

Distributed Learning and Inference Systems: A Networking Perspective

Jan 09, 2025

Abstract:Machine learning models have achieved, and in some cases surpassed, human-level performance in various tasks, mainly through centralized training of static models and the use of large models stored in centralized clouds for inference. However, this centralized approach has several drawbacks, including privacy concerns, high storage demands, a single point of failure, and significant computing requirements. These challenges have driven interest in developing alternative decentralized and distributed methods for AI training and inference. Distribution introduces additional complexity, as it requires managing multiple moving parts. To address these complexities and fill a gap in the development of distributed AI systems, this work proposes a novel framework, Data and Dynamics-Aware Inference and Training Networks (DA-ITN). The different components of DA-ITN and their functions are explored, and the associated challenges and research areas are highlighted.

Cluster Based Secure Multi-Party Computation in Federated Learning for Histopathology Images

Aug 21, 2022

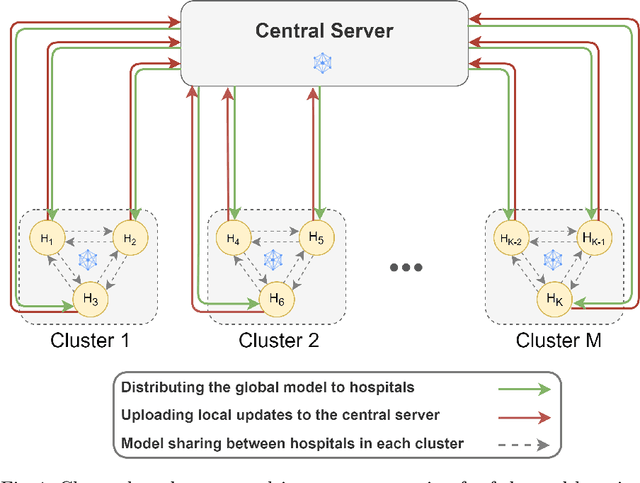

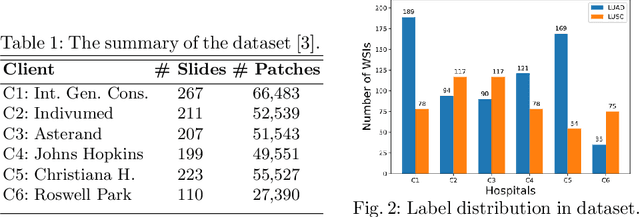

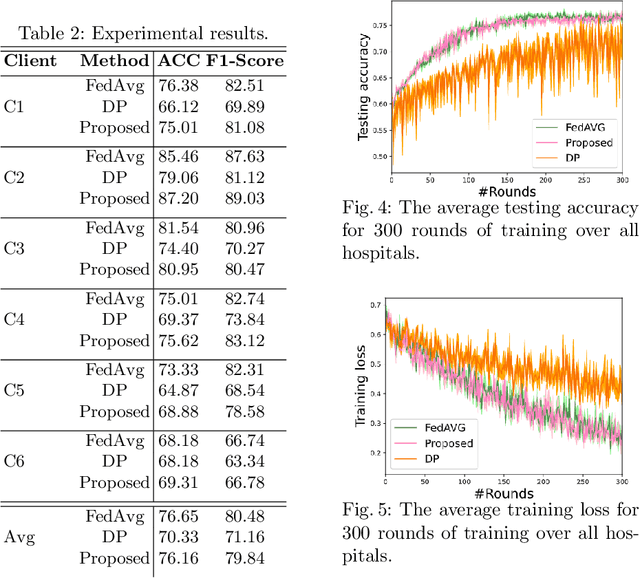

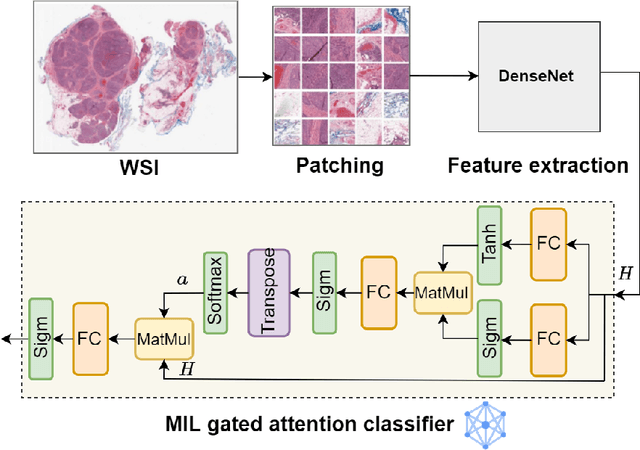

Abstract:Federated learning (FL) is a decentralized method enabling hospitals to collaboratively learn a model without sharing private patient data for training. In FL, participant hospitals periodically exchange training results rather than training samples with a central server. However, having access to model parameters or gradients can expose private training data samples. To address this challenge, we adopt secure multiparty computation (SMC) to establish a privacy-preserving federated learning framework. In our proposed method, the hospitals are divided into clusters. After local training, each hospital splits its model weights among other hospitals in the same cluster such that no single hospital can retrieve other hospitals' weights on its own. Then, all hospitals sum up the received weights, sending the results to the central server. Finally, the central server aggregates the results, retrieving the average of models' weights and updating the model without having access to individual hospitals' weights. We conduct experiments on a publicly available repository, The Cancer Genome Atlas (TCGA). We compare the performance of the proposed framework with differential privacy and federated averaging as the baseline. The results reveal that compared to differential privacy, our framework can achieve higher accuracy with no privacy leakage risk at a cost of higher communication overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge