Ryan K. L. Ko

Positive-Unlabeled Learning using Random Forests via Recursive Greedy Risk Minimization

Oct 16, 2022

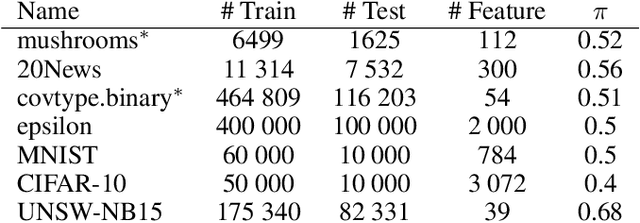

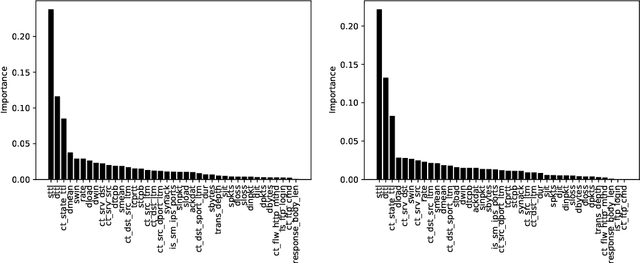

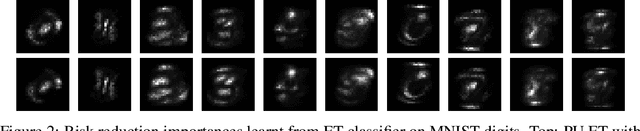

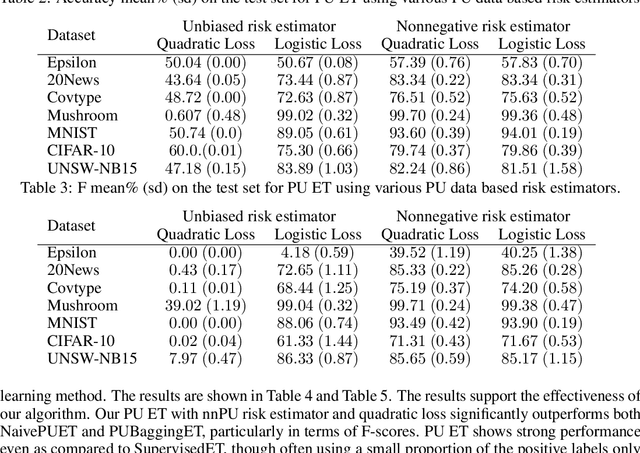

Abstract:The need to learn from positive and unlabeled data, or PU learning, arises in many applications and has attracted increasing interest. While random forests are known to perform well on many tasks with positive and negative data, recent PU algorithms are generally based on deep neural networks, and the potential of tree-based PU learning is under-explored. In this paper, we propose new random forest algorithms for PU-learning. Key to our approach is a new interpretation of decision tree algorithms for positive and negative data as \emph{recursive greedy risk minimization algorithms}. We extend this perspective to the PU setting to develop new decision tree learning algorithms that directly minimizes PU-data based estimators for the expected risk. This allows us to develop an efficient PU random forest algorithm, PU extra trees. Our approach features three desirable properties: it is robust to the choice of the loss function in the sense that various loss functions lead to the same decision trees; it requires little hyperparameter tuning as compared to neural network based PU learning; it supports a feature importance that directly measures a feature's contribution to risk minimization. Our algorithms demonstrate strong performance on several datasets. Our code is available at \url{https://github.com/puetpaper/PUExtraTrees}.

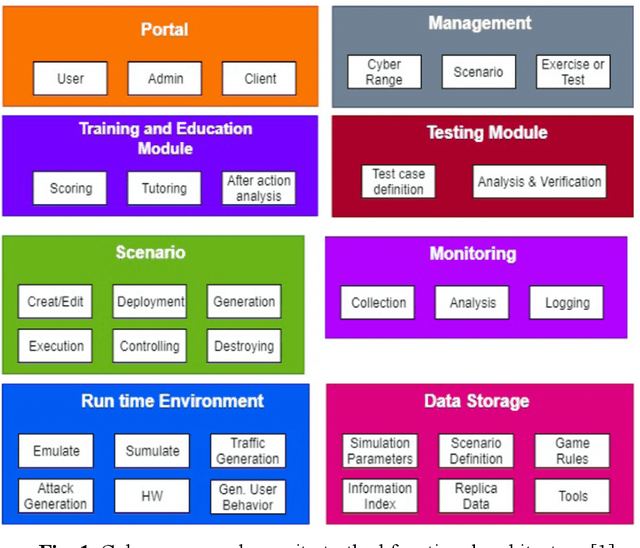

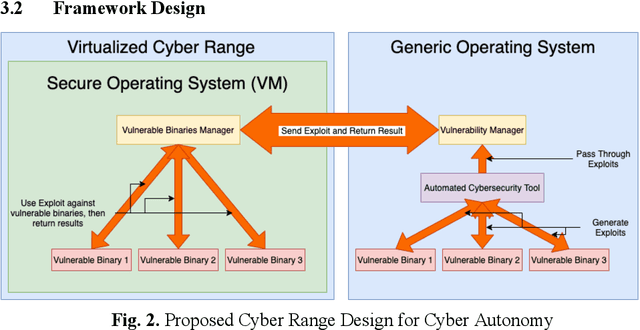

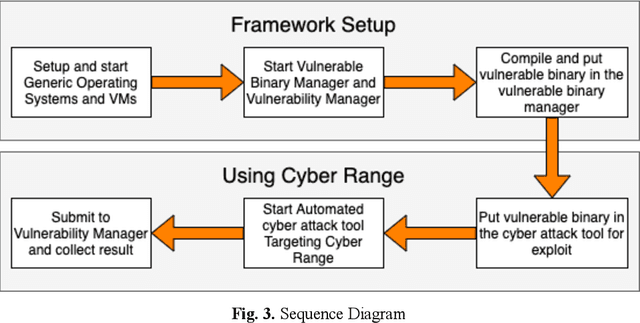

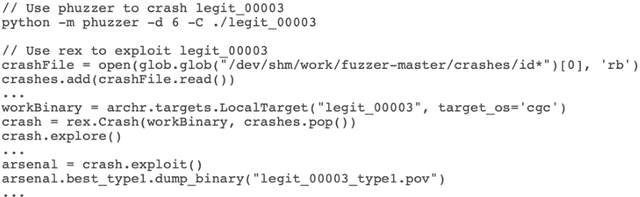

Pandora: A Cyber Range Environment for the Safe Testing and Deployment of Autonomous Cyber Attack Tools

Sep 24, 2020

Abstract:Cybersecurity tools are increasingly automated with artificial intelligent (AI) capabilities to match the exponential scale of attacks, compensate for the relatively slower rate of training new cybersecurity talents, and improve of the accuracy and performance of both tools and users. However, the safe and appropriate usage of autonomous cyber attack tools - especially at the development stages for these tools - is still largely an unaddressed gap. Our survey of current literature and tools showed that most of the existing cyber range designs are mostly using manual tools and have not considered augmenting automated tools or the potential security issues caused by the tools. In other words, there is still room for a novel cyber range design which allow security researchers to safely deploy autonomous tools and perform automated tool testing if needed. In this paper, we introduce Pandora, a safe testing environment which allows security researchers and cyber range users to perform experiments on automated cyber attack tools that may have strong potential of usage and at the same time, a strong potential for risks. Unlike existing testbeds and cyber ranges which have direct compatibility with enterprise computer systems and the potential for risk propagation across the enterprise network, our test system is intentionally designed to be incompatible with enterprise real-world computing systems to reduce the risk of attack propagation into actual infrastructure. Our design also provides a tool to convert in-development automated cyber attack tools into to executable test binaries for validation and usage realistic enterprise system environments if required. Our experiments tested automated attack tools on our proposed system to validate the usability of our proposed environment. Our experiments also proved the safety of our environment by compatibility testing using simple malicious code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge