Ruigang Niu

Viewpoint Integration and Registration with Vision Language Foundation Model for Image Change Understanding

Sep 15, 2023

Abstract:Recently, the development of pre-trained vision language foundation models (VLFMs) has led to remarkable performance in many tasks. However, these models tend to have strong single-image understanding capability but lack the ability to understand multiple images. Therefore, they cannot be directly applied to cope with image change understanding (ICU), which requires models to capture actual changes between multiple images and describe them in language. In this paper, we discover that existing VLFMs perform poorly when applied directly to ICU because of the following problems: (1) VLFMs generally learn the global representation of a single image, while ICU requires capturing nuances between multiple images. (2) The ICU performance of VLFMs is significantly affected by viewpoint variations, which is caused by the altered relationships between objects when viewpoint changes. To address these problems, we propose a Viewpoint Integration and Registration method. Concretely, we introduce a fused adapter image encoder that fine-tunes pre-trained encoders by inserting designed trainable adapters and fused adapters, to effectively capture nuances between image pairs. Additionally, a viewpoint registration flow and a semantic emphasizing module are designed to reduce the performance degradation caused by viewpoint variations in the visual and semantic space, respectively. Experimental results on CLEVR-Change and Spot-the-Diff demonstrate that our method achieves state-of-the-art performance in all metrics.

HMANet: Hybrid Multiple Attention Network for Semantic Segmentation in Aerial Images

Jan 09, 2020

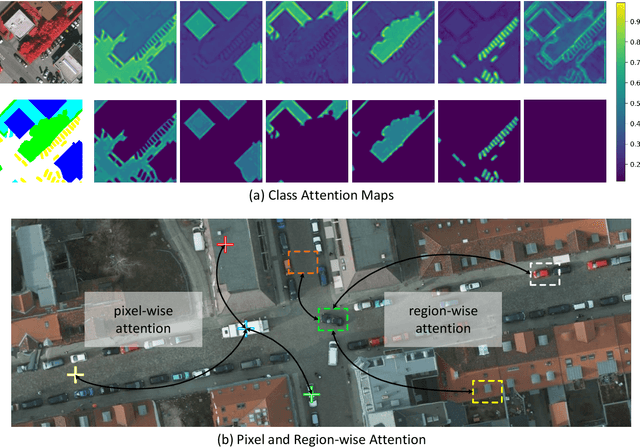

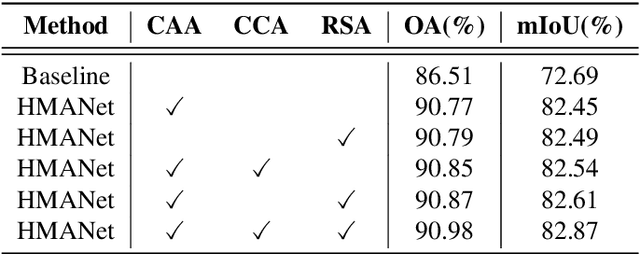

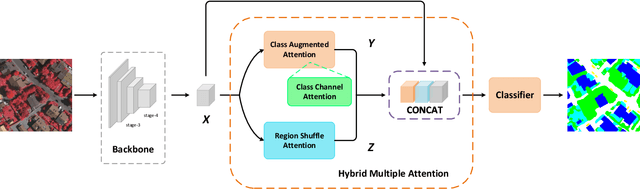

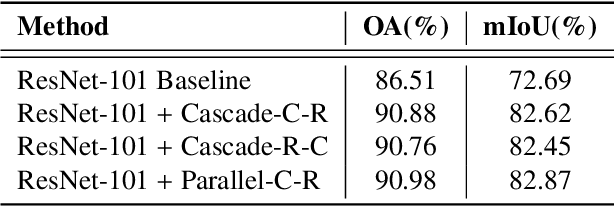

Abstract:Semantic segmentation in very high resolution (VHR) aerial images is one of the most challenging tasks in remote sensing image understanding. Most of the current approaches are based on deep convolutional neural networks (DCNNs) for its remarkable ability of feature representations. Specifically, attention-based methods can effectively capture long-range dependencies and further reconstruct the feature maps for better representation. However, limited by the mere perspective of spacial and channel attention and huge computation complexity of self-attention mechanism, it's unlikely to model the effective semantic interdependencies between each pixel-pair. In this work, we propose a novel attention-based framework named Hybrid Multiple Attention Network (HMANet) to adaptively capture global correlations from the perspective of space, channel and category in a more effective and efficient manner. Concretely, a class augmented attention (CAA) module embedded with a class channel attention (CCA) module can be used to compute category-based correlation and recalibrate the class-level information. Additionally, we introduce a simple yet region shuffle attention (RSA) module to reduce feature redundant and improve the efficiency of self-attention mechanism via region-wise representations. Extensive experimental results on the ISPRS Vaihingen and Potsdam benchmark demonstrate the effectiveness and efficiency of our HMANet over other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge