Roy Hayes

Inverse Reinforcement Learning for Strategy Identification

Jul 31, 2021

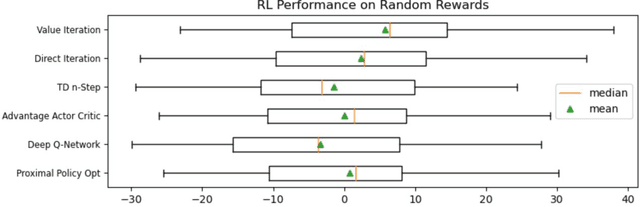

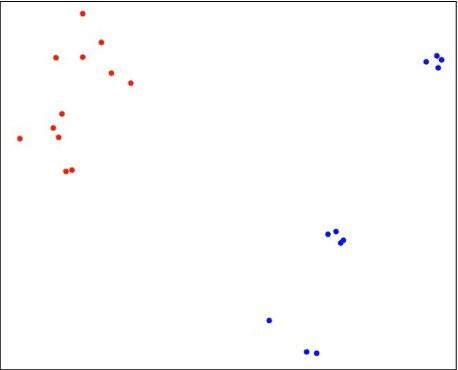

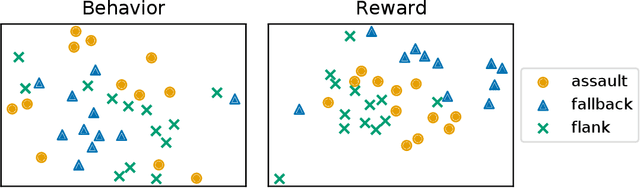

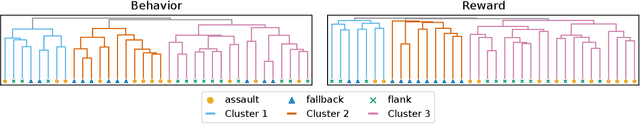

Abstract:In adversarial environments, one side could gain an advantage by identifying the opponent's strategy. For example, in combat games, if an opponents strategy is identified as overly aggressive, one could lay a trap that exploits the opponent's aggressive nature. However, an opponent's strategy is not always apparent and may need to be estimated from observations of their actions. This paper proposes to use inverse reinforcement learning (IRL) to identify strategies in adversarial environments. Specifically, the contributions of this work are 1) the demonstration of this concept on gaming combat data generated from three pre-defined strategies and 2) the framework for using IRL to achieve strategy identification. The numerical experiments demonstrate that the recovered rewards can be identified using a variety of techniques. In this paper, the recovered reward are visually displayed, clustered using unsupervised learning, and classified using a supervised learner.

Real Time Strategy Language

Jan 21, 2014Abstract:Real Time Strategy (RTS) games provide complex domain to test the latest artificial intelligence (AI) research. In much of the literature, AI systems have been limited to playing one game. Although, this specialization has resulted in stronger AI gaming systems it does not address the key concerns of AI researcher. AI researchers seek the development of AI agents that can autonomously interpret learn, and apply new knowledge. To achieve human level performance, current AI systems rely on game specific knowledge of an expert. The paper presents the full RTS language in hopes of shifting the current research focus to the development of general RTS agents. General RTS agents are AI gaming systems that can play any RTS games, defined in the RTS language. This prevents game specific knowledge from being hard coded into the system, thereby facilitating research that addresses the fundamental concerns of artificial intelligence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge