Rouwan Wu

UAVD4L: A Large-Scale Dataset for UAV 6-DoF Localization

Jan 11, 2024Abstract:Despite significant progress in global localization of Unmanned Aerial Vehicles (UAVs) in GPS-denied environments, existing methods remain constrained by the availability of datasets. Current datasets often focus on small-scale scenes and lack viewpoint variability, accurate ground truth (GT) pose, and UAV build-in sensor data. To address these limitations, we introduce a large-scale 6-DoF UAV dataset for localization (UAVD4L) and develop a two-stage 6-DoF localization pipeline (UAVLoc), which consists of offline synthetic data generation and online visual localization. Additionally, based on the 6-DoF estimator, we design a hierarchical system for tracking ground target in 3D space. Experimental results on the new dataset demonstrate the effectiveness of the proposed approach. Code and dataset are available at https://github.com/RingoWRW/UAVD4L

Render-and-Compare: Cross-View 6 DoF Localization from Noisy Prior

Feb 13, 2023

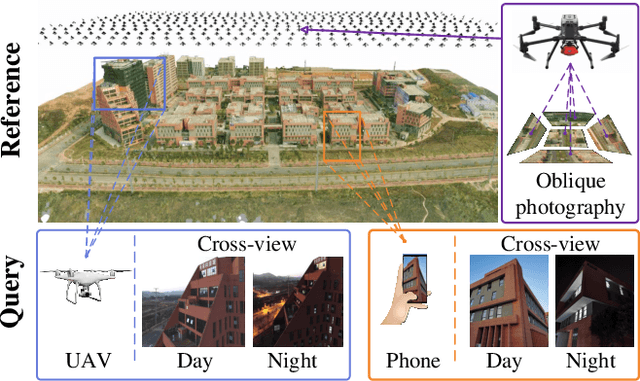

Abstract:Despite the significant progress in 6-DoF visual localization, researchers are mostly driven by ground-level benchmarks. Compared with aerial oblique photography, ground-level map collection lacks scalability and complete coverage. In this work, we propose to go beyond the traditional ground-level setting and exploit the cross-view localization from aerial to ground. We solve this problem by formulating camera pose estimation as an iterative render-and-compare pipeline and enhancing the robustness through augmenting seeds from noisy initial priors. As no public dataset exists for the studied problem, we collect a new dataset that provides a variety of cross-view images from smartphones and drones and develop a semi-automatic system to acquire ground-truth poses for query images. We benchmark our method as well as several state-of-the-art baselines and demonstrate that our method outperforms other approaches by a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge