Rongfeng Wei

WS-YOLO: Weakly Supervised Yolo Network for Surgical Tool Localization in Endoscopic Videos

Sep 27, 2023

Abstract:Being able to automatically detect and track surgical instruments in endoscopic video recordings would allow for many useful applications that could transform different aspects of surgery. In robot-assisted surgery, the potentially informative data like categories of surgical tool can be captured, which is sparse, full of noise and without spatial information. We proposed a Weakly Supervised Yolo Network (WS-YOLO) for Surgical Tool Localization in Endoscopic Videos, to generate fine-grained semantic information with location and category from coarse-grained semantic information outputted by the da Vinci surgical robot, which significantly diminished the necessary human annotation labor while striking an optimal balance between the quantity of manually annotated data and detection performance. The source code is available at https://github.com/Breezewrf/Weakly-Supervised-Yolov8.

Visual Watermark Removal Based on Deep Learning

Feb 07, 2023

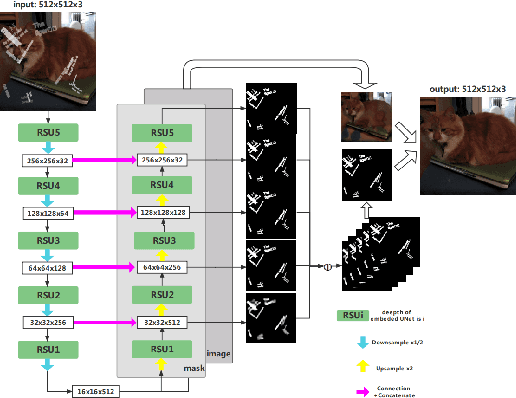

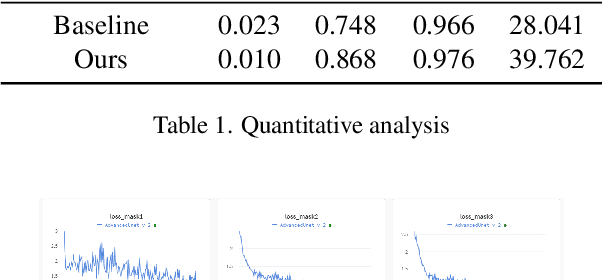

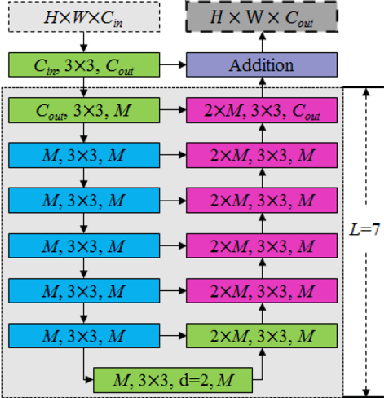

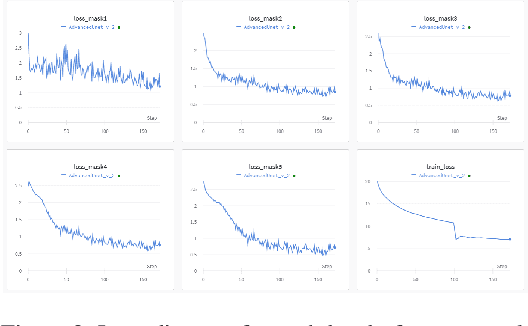

Abstract:In recent years as the internet age continues to grow, sharing images on social media has become a common occurrence. In certain cases, watermarks are used as protection for the ownership of the image, however, in more cases, one may wish to remove these watermark images to get the original image without obscuring. In this work, we proposed a deep learning method based technique for visual watermark removal. Inspired by the strong image translation performance of the U-structure, an end-to-end deep neural network model named AdvancedUnet is proposed to extract and remove the visual watermark simultaneously. On the other hand, we embed some effective RSU module instead of the common residual block used in UNet, which increases the depth of the whole architecture without significantly increasing the computational cost. The deep-supervised hybrid loss guides the network to learn the transformation between the input image and the ground truth in a multi-scale and three-level hierarchy. Comparison experiments demonstrate the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge