Rom Gutman

From Observational Data to Clinical Recommendations: A Causal Framework for Estimating Patient-level Treatment Effects and Learning Policies

Jul 16, 2025Abstract:We propose a framework for building patient-specific treatment recommendation models, building on the large recent literature on learning patient-level causal models and inspired by the target trial paradigm of Hernan and Robins. We focus on safety and validity, including the crucial issue of causal identification when using observational data. We do not provide a specific model, but rather a way to integrate existing methods and know-how into a practical pipeline. We further provide a real world use-case of treatment optimization for patients with heart failure who develop acute kidney injury during hospitalization. The results suggest our pipeline can improve patient outcomes over the current treatment regime.

Propensity score models are better when post-calibrated

Nov 02, 2022Abstract:Theoretical guarantees for causal inference using propensity scores are partly based on the scores behaving like conditional probabilities. However, scores between zero and one, especially when outputted by flexible statistical estimators, do not necessarily behave like probabilities. We perform a simulation study to assess the error in estimating the average treatment effect before and after applying a simple and well-established post-processing method to calibrate the propensity scores. We find that post-calibration reduces the error in effect estimation for expressive uncalibrated statistical estimators, and that this improvement is not mediated by better balancing. The larger the initial lack of calibration, the larger the improvement in effect estimation, with the effect on already-calibrated estimators being very small. Given the improvement in effect estimation and that post-calibration is computationally cheap, we recommend it will be adopted when modelling propensity scores with expressive models.

PyDTS: A Python Package for Discrete Time Survival Analysis with Competing Risks

Apr 12, 2022

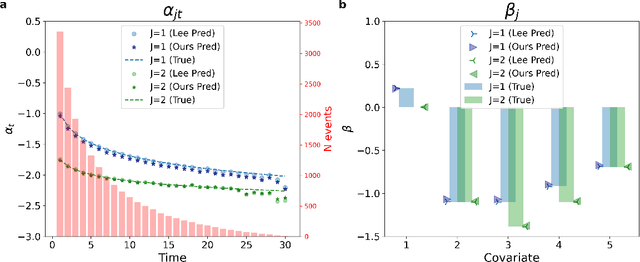

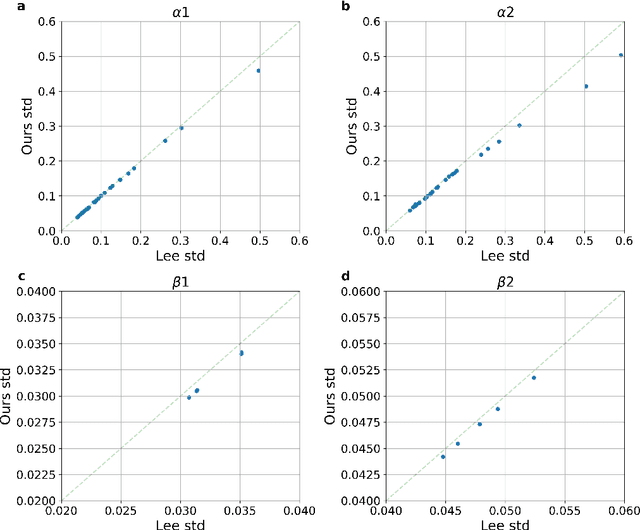

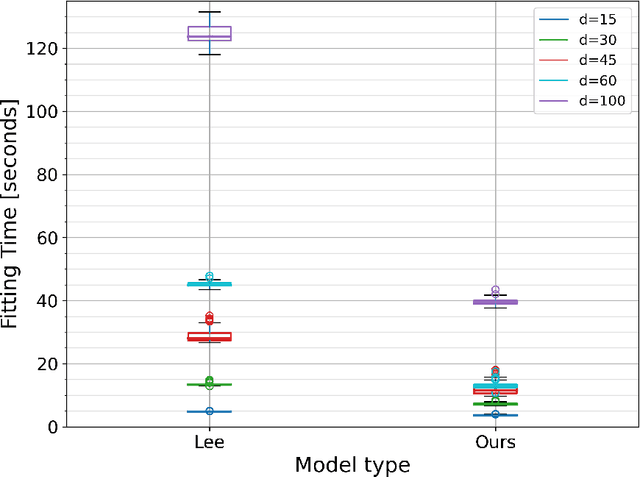

Abstract:Time-to-event analysis (survival analysis) is used when the outcome or the response of interest is the time until a pre-specified event occurs. Time-to-event data are sometimes discrete either because time itself is discrete or due to grouping of failure times into intervals or rounding off measurements. In addition, the failure of an individual could be one of several distinct failure types; known as competing risks (events) data. This work focuses on discrete-time regression with competing events. We emphasize the main difference between the continuous and discrete settings with competing events, develop a new estimation procedure, and present PyDTS, an open source Python package which implements our estimation procedure and other tools for discrete-time-survival analysis with competing risks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge