Rohini Poolat

Securing Autonomous Service Robots through Fuzzing, Detection, and Mitigation

Mar 12, 2020

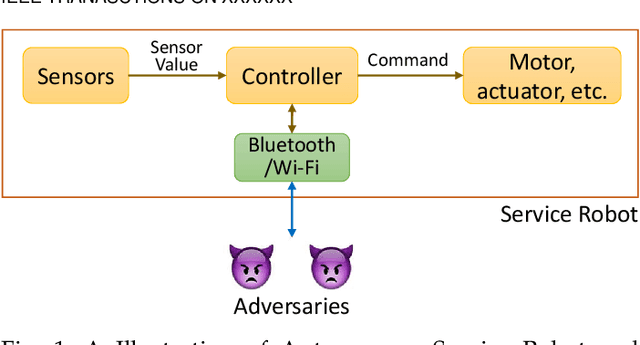

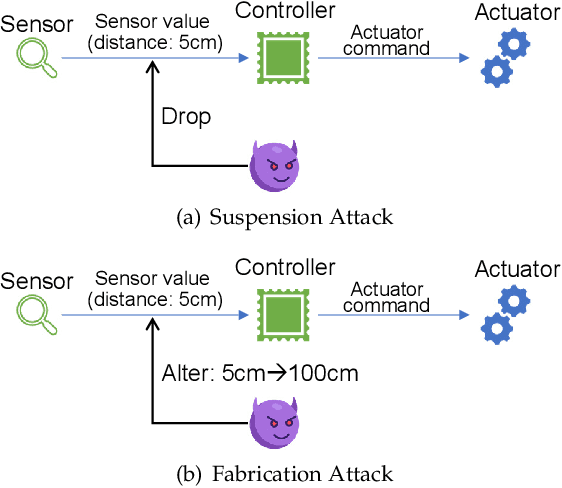

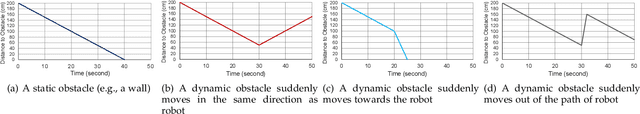

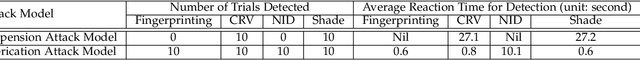

Abstract:Autonomous service robots share social spaces with humans, usually working together for domestic or professional tasks. Cyber security breaches in such robots undermine the trust between humans and robots. In this paper, we investigate how to apprehend and inflict security threats at the design and implementation stage of a movable autonomous service robot. To this end, we leverage the idea of directed fuzzing and design RoboFuzz that systematically tests an autonomous service robot in line with the robot's states and the surrounding environment. The methodology of RoboFuzz is to study critical environmental parameters affecting the robot's state transitions and subject the robot control program with rational but harmful sensor values so as to compromise the robot. Furthermore, we develop detection and mitigation algorithms to counteract the impact of RoboFuzz. The difficulties mainly lie in the trade-off among limited computation resources, timely detection and the retention of work efficiency in mitigation. In particular, we propose detection and mitigation methods that take advantage of historical records of obstacles to detect inconsistent obstacle appearances regarding untrustworthy sensor values and navigate the movable robot to continue moving so as to carry on a planned task. By doing so, we manage to maintain a low cost for detection and mitigation but also retain the robot's work efficacy. We have prototyped the bundle of RoboFuzz, detection and mitigation algorithms in a real-world movable robot. Experimental results confirm that RoboFuzz makes a success rate of up to 93.3% in imposing concrete threats to the robot while the overall loss of work efficacy is merely 4.1% at the mitigation mode.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge