Robert Barton

Spectral-Efficient LoRa with Low Complexity Detection

Jan 06, 2026Abstract:In this paper, we propose a spectral-efficient LoRa (SE-LoRa) modulation scheme with a low complexity successive interference cancellation (SIC)-based detector. The proposed communication scheme significantly improves the spectral efficiency of LoRa modulation, while achieving an acceptable error performance compared to conventional LoRa modulation, especially in higher spreading factor (SF) settings. We derive the joint maximum likelihood (ML) detection rule for the SE-LoRa transmission scheme that turns out to be of high computational complexity. To overcome this issue, and by exploiting the frequency-domain characteristics of the dechirped SE-LoRa signal, we propose a low complexity SIC-based detector with a computation complexity at the order of conventional LoRa detection. By computer simulations, we show that the proposed SE-LoRa with low complexity SIC-based detector can improve the spectral efficiency of LoRa modulation up to $445.45\%$, $1011.11\%$, and $1071.88\%$ for SF values of $7$, $9$, and $11$, respectively, while maintaining the error performance within less than $3$ dB of conventional LoRa at symbol error rate (SER) of $10^{-3}$ in Rician channel conditions.

A Tutorial on Chirp Spread Spectrum for LoRaWAN: Basics and Key Advances

Oct 16, 2023Abstract:Chirps spread spectrum (CSS) modulation is the heart of long-range (LoRa) modulation used in the context of long-range wide area network (LoRaWAN) in internet of things (IoT) scenarios. Despite being a proprietary technology owned by Semtech Corp., LoRa modulation has drawn much attention from the research and industry communities in recent years. However, to the best of our knowledge, a comprehensive tutorial, investigating the CSS modulation in the LoRaWAN application, is missing in the literature. Therefore, in the first part of this paper, we provide a thorough analysis and tutorial of CSS modulation modified by LoRa specifications, discussing various aspects such as signal generation, detection, error performance, and spectral characteristics. Moreover, a summary of key recent advances in the context of CSS modulation applications in IoT networks is presented in the second part of this paper under four main categories of transceiver configuration and design, data rate improvement, interference modeling, and synchronization algorithms.

Low-Complexity Design and Detection of Unitary Constellations in Non-Coherent SIMO Systems for URLLC

May 04, 2023

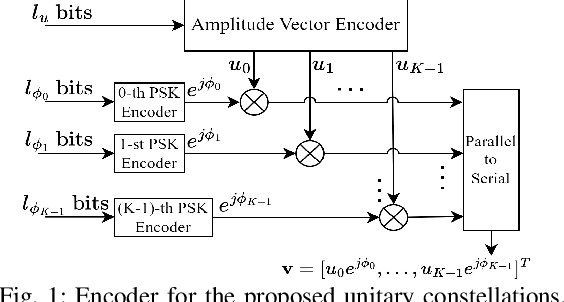

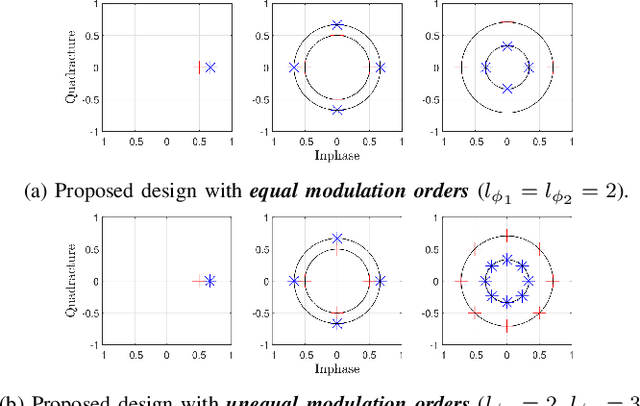

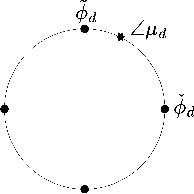

Abstract:In this paper, we propose a novel multi-symbol unitary constellation structure for non-coherent single-input multiple-output (SIMO) communications over block Rayleigh fading channels. To facilitate the design and the detection of large unitary constellations at reduced complexity, the proposed constellations are constructed as the Cartesian product of independent amplitude and phase-shift-keying (PSK) vectors, and hence, can be iteratively detected. The amplitude vector can be detected by exhaustive search, whose complexity is still sufficiently low in short packet transmissions. For detection of the PSK vector, we adopt a maximum-A-posteriori (MAP) criterion to improve the reliability of the sorted decision-feedback differential detection (sort-DFDD), which results in near-optimal error performance in the case of the same modulation order of the transmit PSK symbols at different time slots. This detector is called MAP-based-reliability-sort-DFDD (MAP-R-sort-DFDD) and has polynomial complexity. For the case of different modulation orders at different time slots, we observe that undetected symbols with lower modulation orders have a significant impact on the detection of PSK symbols with higher modulation orders. We exploit this observation and propose an improved detector called improved-MAP-R-sort-DFDD, which approaches the optimal error performance with polynomial time complexity. Simulation results show the merits of our proposed multi-symbol unitary constellation when compared to competing low-complexity unitary constellations.

D2D-aided LoRaWAN LR-FHSS in Direct-to-Satellite IoT Networks

Dec 08, 2022Abstract:In this paper, we present a device-to-device (D2D) transmission scheme for aiding long-range frequency hopping spread spectrum (LR-FHSS) LoRaWAN protocol with application in direct-to-satellite IoT networks. We consider a practical ground-to-satellite fading model, i.e. shadowed-Rice channel, and derive the outage performance of the LR-FHSS network. With the help of network coding, D2D-aided LR-FHSS transmission scheme is proposed to improve the network capacity for which a closed-form outage probability expression is also derived. The obtained analytical expressions for both LR-FHSS and D2D-aided LR-FHSS outage probabilities are validated by computer simulations for different parts of the analysis capturing the effects of noise, fading, unslotted ALOHA-based time scheduling, the receiver's capture effect, IoT device distributions, and distance from node to satellite. The total outage probability for the D2D-aided LR-FHSS shows a considerable increase of 249.9% and 150.1% in network capacity at a typical outage of 10^-2 for DR6 and DR5, respectively, when compared to LR-FHSS. This is obtained at the cost of minimum of one and maximum of two additional transmissions per each IoT end device imposed by the D2D scheme in each time-slot.

Joint Cluster Head Selection and Trajectory Planning in UAV-Aided IoT Networks by Reinforcement Learning with Sequential Model

Dec 01, 2021

Abstract:Employing unmanned aerial vehicles (UAVs) has attracted growing interests and emerged as the state-of-the-art technology for data collection in Internet-of-Things (IoT) networks. In this paper, with the objective of minimizing the total energy consumption of the UAV-IoT system, we formulate the problem of jointly designing the UAV's trajectory and selecting cluster heads in the IoT network as a constrained combinatorial optimization problem which is classified as NP-hard and challenging to solve. We propose a novel deep reinforcement learning (DRL) with a sequential model strategy that can effectively learn the policy represented by a sequence-to-sequence neural network for the UAV's trajectory design in an unsupervised manner. Through extensive simulations, the obtained results show that the proposed DRL method can find the UAV's trajectory that requires much less energy consumption when compared to other baseline algorithms and achieves close-to-optimal performance. In addition, simulation results show that the trained model by our proposed DRL algorithm has an excellent generalization ability to larger problem sizes without the need to retrain the model.

UAV Trajectory Planning in Wireless Sensor Networks for Energy Consumption Minimization by Deep Reinforcement Learning

Aug 01, 2021

Abstract:Unmanned aerial vehicles (UAVs) have emerged as a promising candidate solution for data collection of large-scale wireless sensor networks (WSNs). In this paper, we investigate a UAV-aided WSN, where cluster heads (CHs) receive data from their member nodes, and a UAV is dispatched to collect data from CHs along the planned trajectory. We aim to minimize the total energy consumption of the UAV-WSN system in a complete round of data collection. Toward this end, we formulate the energy consumption minimization problem as a constrained combinatorial optimization problem by jointly selecting CHs from nodes within clusters and planning the UAV's visiting order to the selected CHs. The formulated energy consumption minimization problem is NP-hard, and hence, hard to solve optimally. In order to tackle this challenge, we propose a novel deep reinforcement learning (DRL) technique, pointer network-A* (Ptr-A*), which can efficiently learn from experiences the UAV trajectory policy for minimizing the energy consumption. The UAV's start point and the WSN with a set of pre-determined clusters are fed into the Ptr-A*, and the Ptr-A* outputs a group of CHs and the visiting order to these CHs, i.e., the UAV's trajectory. The parameters of the Ptr-A* are trained on small-scale clusters problem instances for faster training by using the actor-critic algorithm in an unsupervised manner. At inference, three search strategies are also proposed to improve the quality of solutions. Simulation results show that the trained models based on 20-clusters and 40-clusters have a good generalization ability to solve the UAV's trajectory planning problem in WSNs with different numbers of clusters, without the need to retrain the models. Furthermore, the results show that our proposed DRL algorithm outperforms two baseline techniques.

Hypergraph Pre-training with Graph Neural Networks

May 23, 2021

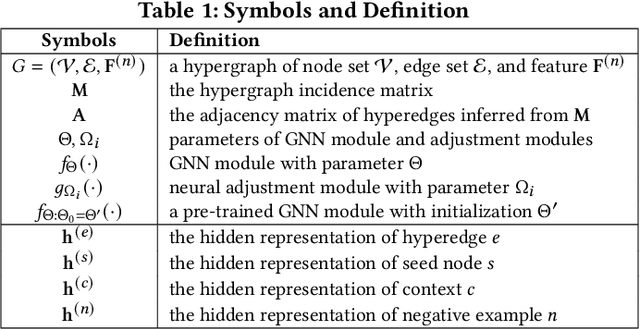

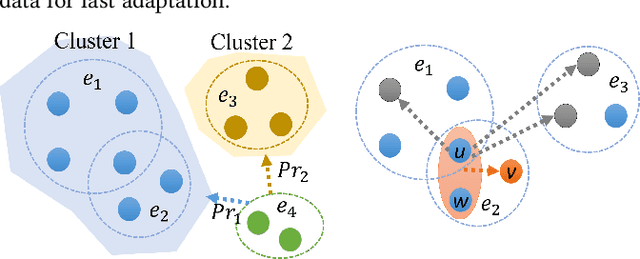

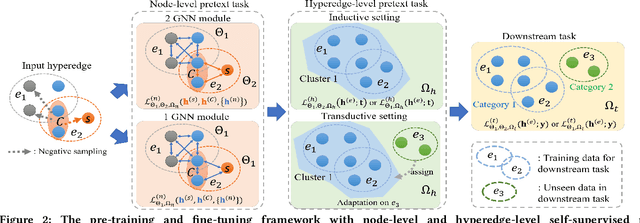

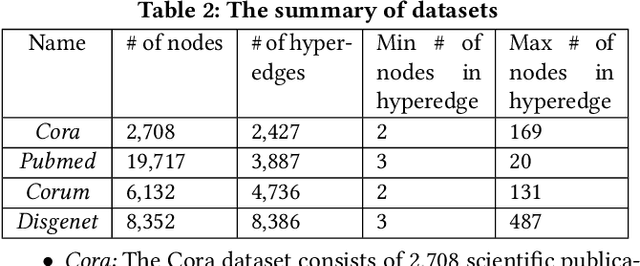

Abstract:Despite the prevalence of hypergraphs in a variety of high-impact applications, there are relatively few works on hypergraph representation learning, most of which primarily focus on hyperlink prediction, often restricted to the transductive learning setting. Among others, a major hurdle for effective hypergraph representation learning lies in the label scarcity of nodes and/or hyperedges. To address this issue, this paper presents an end-to-end, bi-level pre-training strategy with Graph Neural Networks for hypergraphs. The proposed framework named HyperGene bears three distinctive advantages. First, it is capable of ingesting the labeling information when available, but more importantly, it is mainly designed in the self-supervised fashion which significantly broadens its applicability. Second, at the heart of the proposed HyperGene are two carefully designed pretexts, one on the node level and the other on the hyperedge level, which enable us to encode both the local and the global context in a mutually complementary way. Third, the proposed framework can work in both transductive and inductive settings. When applying the two proposed pretexts in tandem, it can accelerate the adaptation of the knowledge from the pre-trained model to downstream applications in the transductive setting, thanks to the bi-level nature of the proposed method. The extensive experimental results demonstrate that: (1) HyperGene achieves up to 5.69% improvements in hyperedge classification, and (2) improves pre-training efficiency by up to 42.80% on average.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge