Risto Ritala

Planning for robotic exploration based on forward simulation

Jun 29, 2016

Abstract:We address the problem of controlling a mobile robot to explore a partially known environment. The robot's objective is the maximization of the amount of information collected about the environment. We formulate the problem as a partially observable Markov decision process (POMDP) with an information-theoretic objective function, and solve it applying forward simulation algorithms with an open-loop approximation. We present a new sample-based approximation for mutual information useful in mobile robotics. The approximation can be seamlessly integrated with forward simulation planning algorithms. We investigate the usefulness of POMDP based planning for exploration, and to alleviate some of its weaknesses propose a combination with frontier based exploration. Experimental results in simulated and real environments show that, depending on the environment, applying POMDP based planning for exploration can improve performance over frontier exploration.

* 19 pages, 11 figures in Robotics and Autonomous Systems (2016)

Optimal Sensing via Multi-armed Bandit Relaxations in Mixed Observability Domains

Mar 15, 2016

Abstract:Sequential decision making under uncertainty is studied in a mixed observability domain. The goal is to maximize the amount of information obtained on a partially observable stochastic process under constraints imposed by a fully observable internal state. An upper bound for the optimal value function is derived by relaxing constraints. We identify conditions under which the relaxed problem is a multi-armed bandit whose optimal policy is easily computable. The upper bound is applied to prune the search space in the original problem, and the effect on solution quality is assessed via simulation experiments. Empirical results show effective pruning of the search space in a target monitoring domain.

* 6 pages, 2 figures

Optimizing Gaze Direction in a Visual Navigation Task

Feb 16, 2016

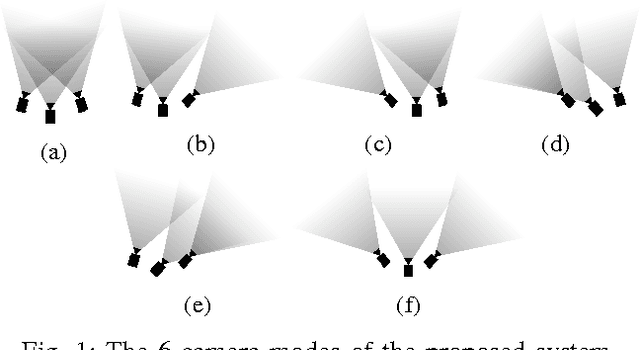

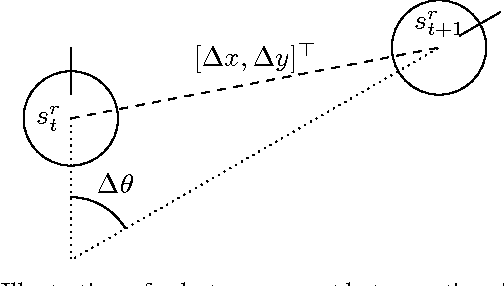

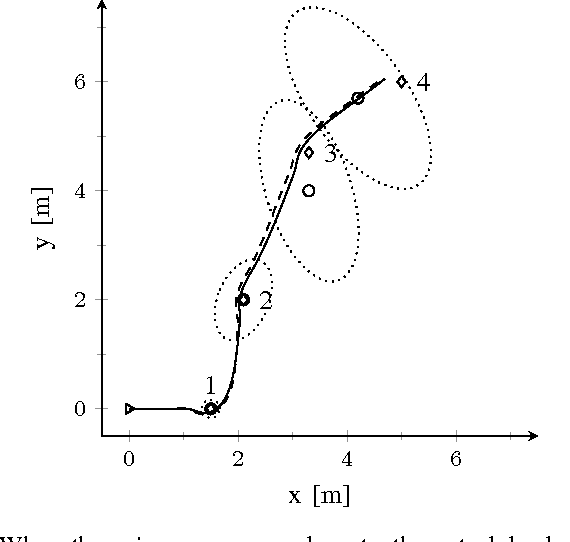

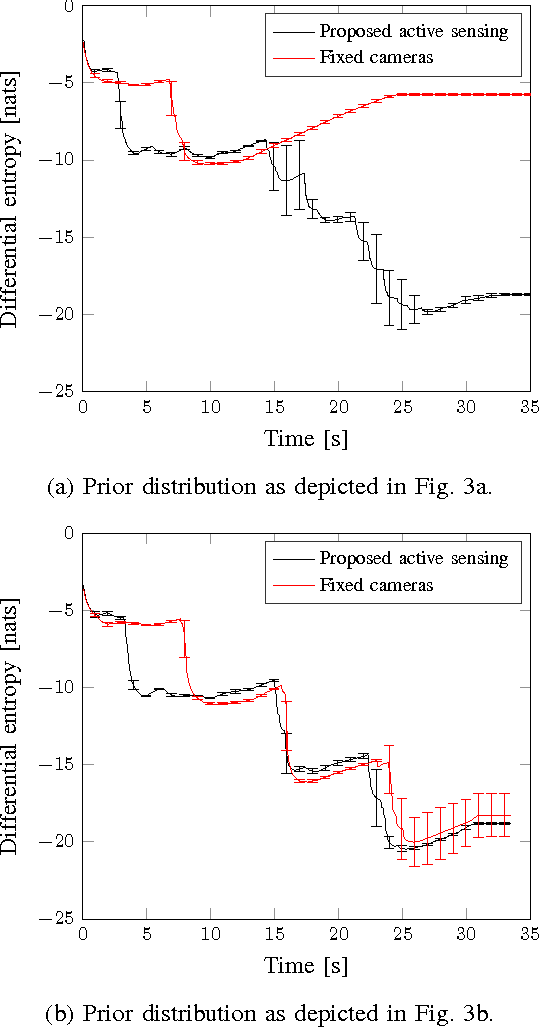

Abstract:Navigation in an unknown environment consists of multiple separable subtasks, such as collecting information about the surroundings and navigating to the current goal. In the case of pure visual navigation, all these subtasks need to utilize the same vision system, and therefore a way to optimally control the direction of focus is needed. We present a case study, where we model the active sensing problem of directing the gaze of a mobile robot with three machine vision cameras as a partially observable Markov decision process (POMDP) using a mutual information (MI) based reward function. The key aspect of the solution is that the cameras are dynamically used either in monocular or stereo configuration. The benefits of using the proposed active sensing implementation are demonstrated with simulations and experiments on a real robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge